Solves #2247. Noted that the only test I added checks for the

BeautifulSoup behaviour change. Happy to add a test for

`DirectoryLoader` if deemed necessary.

This makes it easy to run the tests locally. Some tests may not be able

to run in `Windows` environments, hence the need for a `Dockerfile`.

The new `Dockerfile` sets up a multi-stage build to install Poetry and

dependencies, and then copies the project code to a final image for

tests.

The `Makefile` has been updated to include a new 'docker_tests' target

that builds the Docker image and runs the `unit tests` inside a

container.

It would be beneficial to offer a local testing environment for

developers by enabling them to run a Docker image on their local

machines with the required dependencies, particularly for integration

tests. While this is not included in the current PR, it would be

straightforward to add in the future.

This pull request lacks documentation of the changes made at this

moment.

I'm using Deeplake as a vector store for a Q&A application. When several

questions are being processed at the same time for the same dataset, the

2nd one triggers the following error:

> LockedException: This dataset cannot be open for writing as it is

locked by another machine. Try loading the dataset with

`read_only=True`.

Answering questions doesn't require writing new embeddings so it's ok to

open the dataset in read only mode at that time.

This pull request thus adds the `read_only` option to the Deeplake

constructor and to its subsequent `deeplake.load()` call.

The related Deeplake documentation is

[here](https://docs.deeplake.ai/en/latest/deeplake.html#deeplake.load).

I've tested this update on my local dev environment. I don't know if an

integration test and/or additional documentation are expected however.

Let me know if it is, ideally with some guidance as I'm not particularly

experienced in Python.

This merge request proposes changes to the TextLoader class to make it

more flexible and robust when handling text files with different

encodings. The current implementation of TextLoader does not provide a

way to specify the encoding of the text file being read. As a result, it

might lead to incorrect handling of files with non-default encodings,

causing issues with loading the content.

Benefits:

- The proposed changes will make the TextLoader class more flexible,

allowing it to handle text files with different encodings.

- The changes maintain backward compatibility, as the encoding parameter

is optional.

# What does this PR do?

This PR adds the `__version__` variable in the main `__init__.py` to

easily retrieve the version, e.g., for debugging purposes or when a user

wants to open an issue and provide information.

Usage

```python

>>> import langchain

>>> langchain.__version__

'0.0.127'

```

When downloading a google doc, if the document is not a google doc type,

for example if you uploaded a .DOCX file to your google drive, the error

you get is not informative at all. I added a error handler which print

the exact error occurred during downloading the document from google

docs.

### Summary

Adds a new document loader for processing e-publications. Works with

`unstructured>=0.5.4`. You need to have

[`pandoc`](https://pandoc.org/installing.html) installed for this loader

to work.

### Testing

```python

from langchain.document_loaders import UnstructuredEPubLoader

loader = UnstructuredEPubLoader("winter-sports.epub", mode="elements")

data = loader.load()

data[0]

```

This upsteam wikipedia page loading seems to still have issues. Finding

a compromise solution where it does an exact match search and not a

search for the completion.

See previous PR: https://github.com/hwchase17/langchain/pull/2169

Creating a page using the title causes a wikipedia search with

autocomplete set to true. This frequently causes the summaries to be

unrelated to the actual page found.

See:

1554943e8a/wikipedia/wikipedia.py (L254-L280)

`predict_and_parse` exists, and it's a nice abstraction to allow for

applying output parsers to LLM generations. And async is very useful.

As an aside, the difference between `call/acall`, `predict/apredict` and

`generate/agenerate` isn't entirely

clear to me other than they all call into the LLM in slightly different

ways.

Is there some documentation or a good way to think about these

differences?

One thought:

output parsers should just work magically for all those LLM calls. If

the `output_parser` arg is set on the prompt, the LLM has access, so it

seems like extra work on the user's end to have to call

`output_parser.parse`

If this sounds reasonable, happy to throw something together. @hwchase17

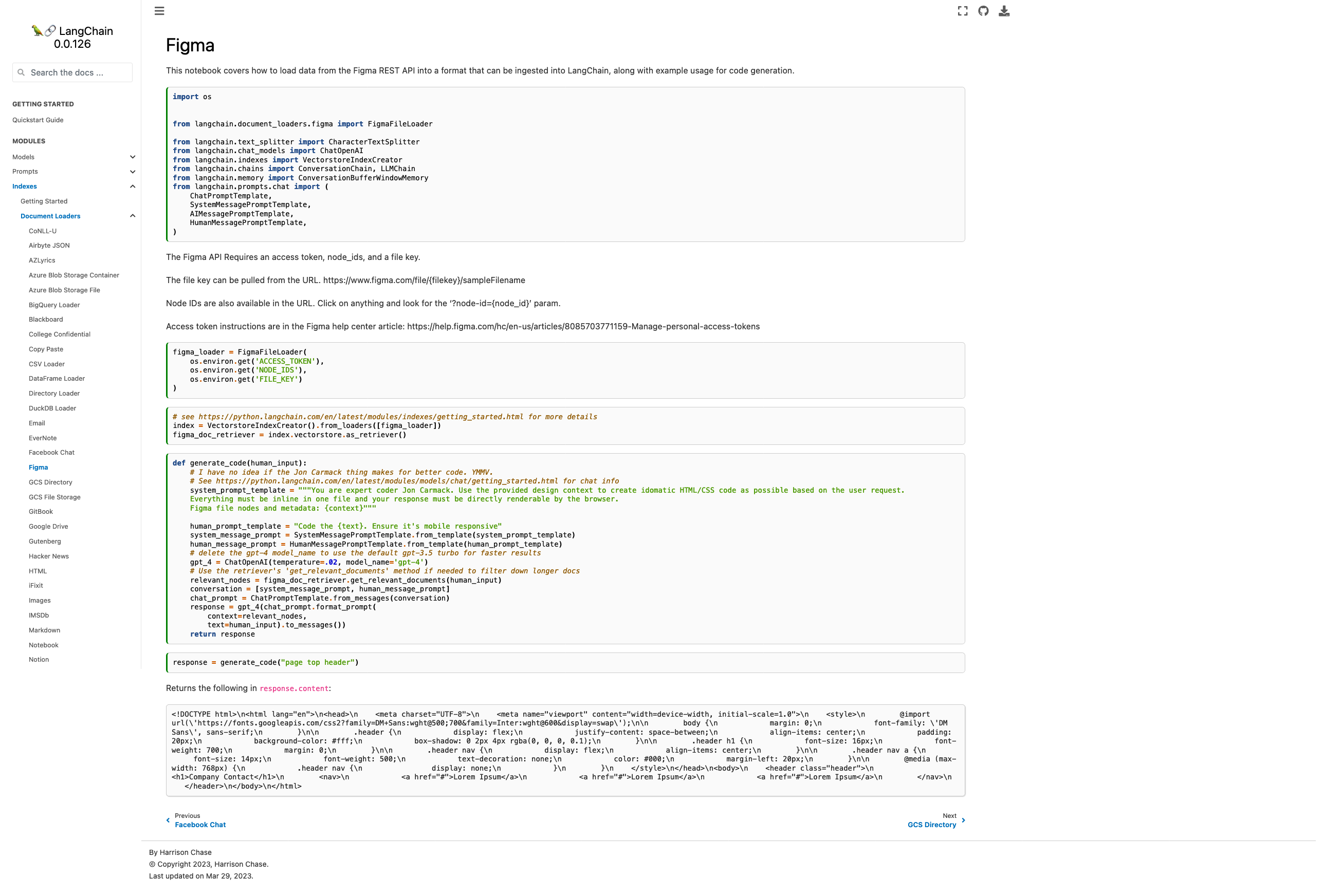

- Current docs are pointing to the wrong module, fixed

- Added some explanation on how to find the necessary parameters

- Added chat-based codegen example w/ retrievers

Picture of the new page:

Please let me know if you'd like any tweaks! I wasn't sure if the

example was too heavy for the page or not but decided "hey, I probably

would want to see it" and so included it.

Co-authored-by: maxtheman <max@maxs-mbp.lan>

The new functionality of Redis backend for chat message history

([see](https://github.com/hwchase17/langchain/pull/2122)) uses the Redis

list object to store messages and then uses the `lrange()` to retrieve

the list of messages

([see](https://github.com/hwchase17/langchain/blob/master/langchain/memory/chat_message_histories/redis.py#L50)).

Unfortunately this retrieves the messages as a list sorted in the

opposite order of how they were inserted - meaning the last inserted

message will be first in the retrieved list - which is not what we want.

This PR fixes that as it changes the order to match the order of

insertion.

Currently, if a tool is set to verbose, an agent can override it by

passing in its own verbose flag. This is not ideal if we want to stream

back responses from agents, as we want the llm and tools to be sending

back events but nothing else. This also makes the behavior consistent

with ts.

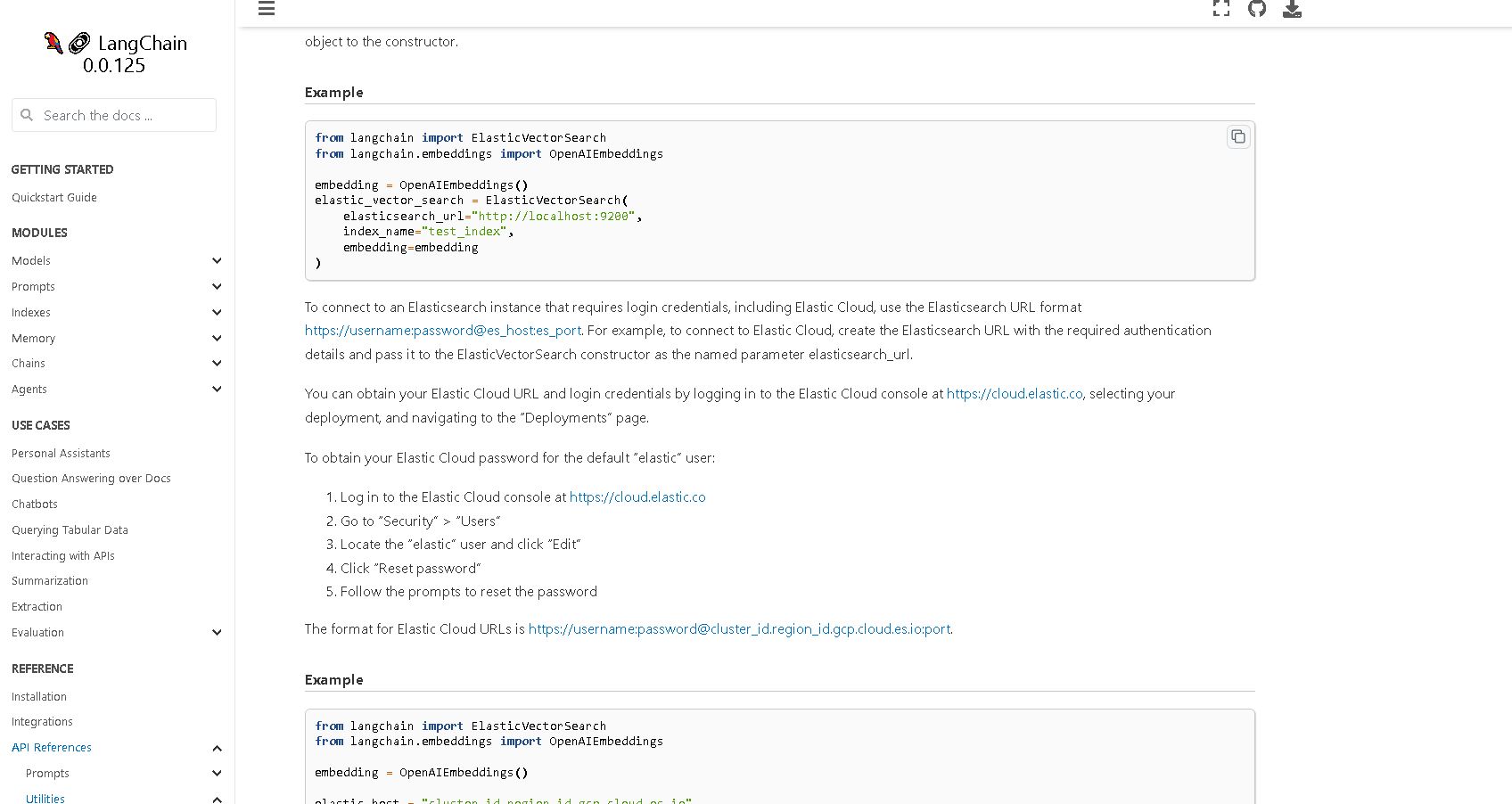

This merge includes updated comments in the ElasticVectorSearch class to

provide information on how to connect to `Elasticsearch` instances that

require login credentials, including Elastic Cloud, without any

functional changes.

The `ElasticVectorSearch` class now inherits from the `ABC` abstract

base class, which does not break or change any functionality. This

allows for easy subclassing and creation of custom implementations in

the future or for any users, especially for me 😄

I confirm that before pushing these changes, I ran:

```bash

make format && make lint

```

To ensure that the new documentation is rendered correctly I ran

```bash

make docs_build

```

To ensure that the new documentation has no broken links, I ran a check

```bash

make docs_linkcheck

```

Also take a look at https://github.com/hwchase17/langchain/issues/1865

P.S. Sorry for spamming you with force-pushes. In the future, I will be

smarter.