mirror of

https://github.com/hwchase17/langchain

synced 2024-11-11 19:11:02 +00:00

* **Description:** Adds a simple LLM implementation for interacting with [llamafile](https://github.com/Mozilla-Ocho/llamafile)-based models. * **Dependencies:** N/A * **Issue:** N/A **Detail** [llamafile](https://github.com/Mozilla-Ocho/llamafile) lets you run LLMs locally from a single file on most computers without installing any dependencies. To use the llamafile LLM implementation, the user needs to: 1. Download a llamafile e.g. https://huggingface.co/jartine/TinyLlama-1.1B-Chat-v1.0-GGUF/resolve/main/TinyLlama-1.1B-Chat-v1.0.Q5_K_M.llamafile?download=true 2. Make the file executable. 3. Run the llamafile in 'server mode'. (All llamafiles come packaged with a lightweight server; by default, the server listens at `http://localhost:8080`.) ```bash wget https://url/of/model.llamafile chmod +x model.llamafile ./model.llamafile --server --nobrowser ``` Now, the user can invoke the LLM via the LangChain client: ```python from langchain_community.llms.llamafile import Llamafile llm = Llamafile() llm.invoke("Tell me a joke.") ``` |

||

|---|---|---|

| .. | ||

| langchain_community | ||

| scripts | ||

| tests | ||

| Makefile | ||

| poetry.lock | ||

| pyproject.toml | ||

| README.md | ||

🦜️🧑🤝🧑 LangChain Community

Quick Install

pip install langchain-community

What is it?

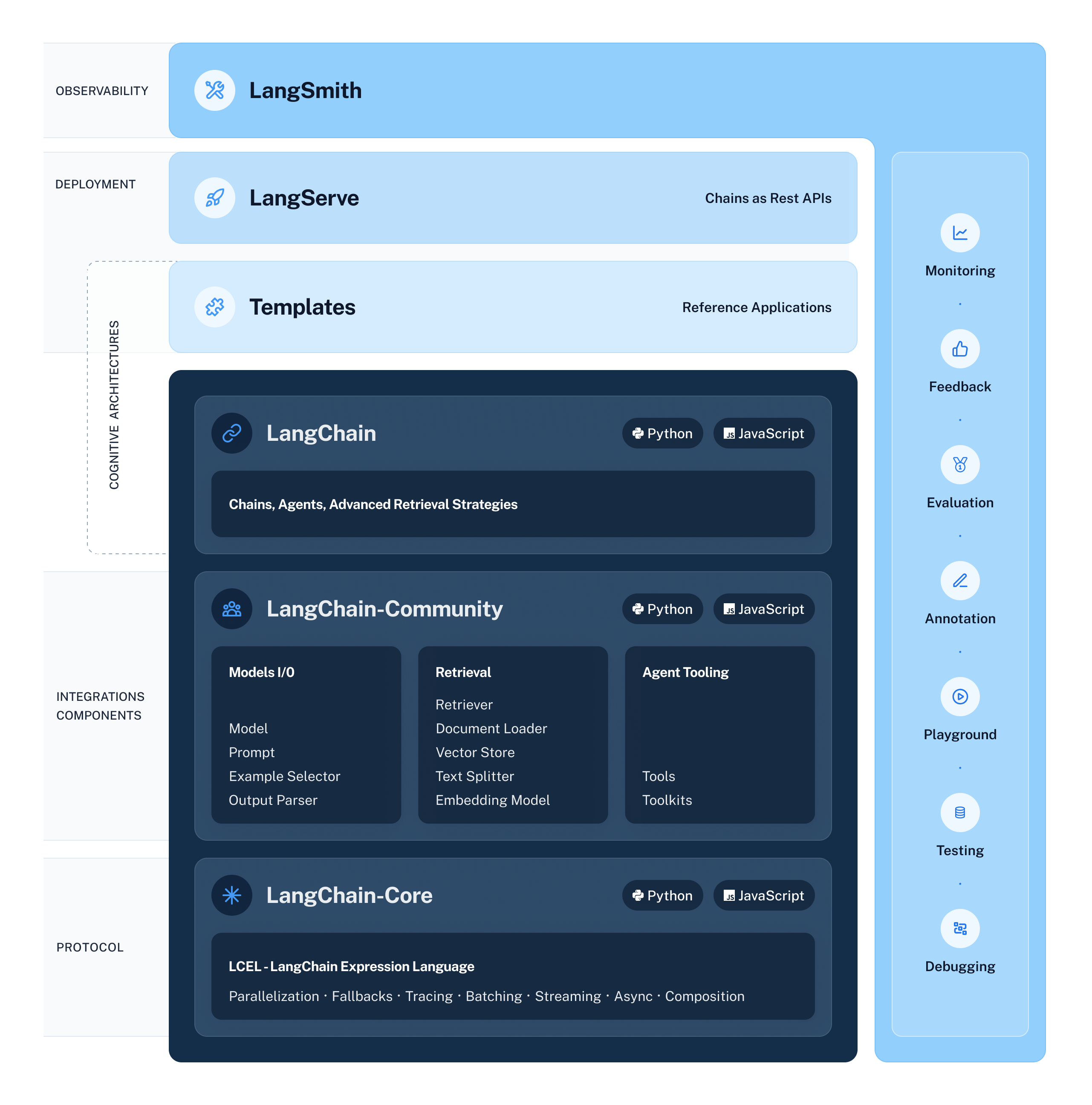

LangChain Community contains third-party integrations that implement the base interfaces defined in LangChain Core, making them ready-to-use in any LangChain application.

For full documentation see the API reference.

📕 Releases & Versioning

langchain-community is currently on version 0.0.x

All changes will be accompanied by a patch version increase.

💁 Contributing

As an open-source project in a rapidly developing field, we are extremely open to contributions, whether it be in the form of a new feature, improved infrastructure, or better documentation.

For detailed information on how to contribute, see the Contributing Guide.