forked from Archives/langchain

Update Cypher QA prompt (#5173)

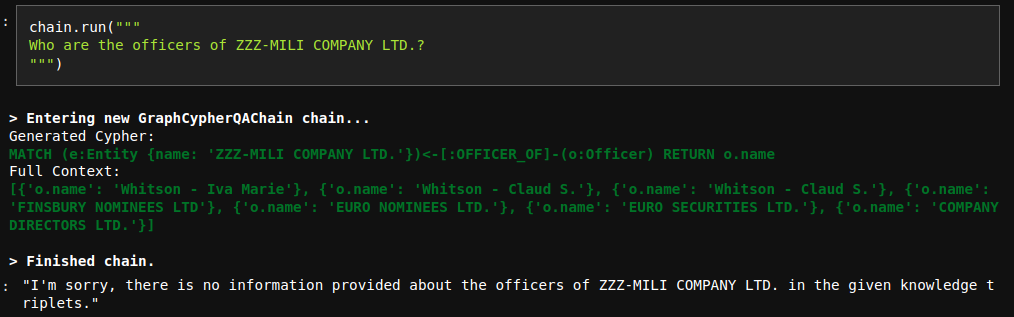

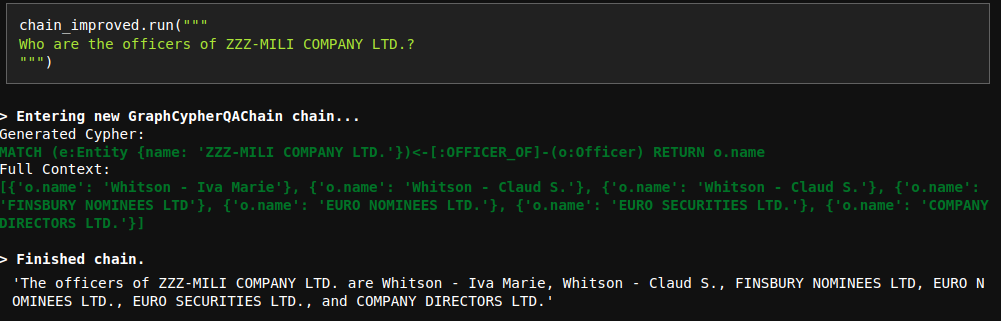

# Improve Cypher QA prompt The current QA prompt is optimized for networkX answer generation, which returns all the possible triples. However, Cypher search is a bit more focused and doesn't necessary return all the context information. Due to that reason, the model sometimes refuses to generate an answer even though the information is provided:  To fix this issue, I have updated the prompt. Interestingly, I tried many variations with less instructions and they didn't work properly. However, the current fix works nicely.

This commit is contained in:

parent

aa14e223ee

commit

fd866d1801

@ -8,7 +8,7 @@ from pydantic import Field

|

||||

from langchain.base_language import BaseLanguageModel

|

||||

from langchain.callbacks.manager import CallbackManagerForChainRun

|

||||

from langchain.chains.base import Chain

|

||||

from langchain.chains.graph_qa.prompts import CYPHER_GENERATION_PROMPT, PROMPT

|

||||

from langchain.chains.graph_qa.prompts import CYPHER_GENERATION_PROMPT, CYPHER_QA_PROMPT

|

||||

from langchain.chains.llm import LLMChain

|

||||

from langchain.graphs.neo4j_graph import Neo4jGraph

|

||||

from langchain.prompts.base import BasePromptTemplate

|

||||

@ -45,7 +45,7 @@ class GraphCypherQAChain(Chain):

|

||||

cls,

|

||||

llm: BaseLanguageModel,

|

||||

*,

|

||||

qa_prompt: BasePromptTemplate = PROMPT,

|

||||

qa_prompt: BasePromptTemplate = CYPHER_QA_PROMPT,

|

||||

cypher_prompt: BasePromptTemplate = CYPHER_GENERATION_PROMPT,

|

||||

**kwargs: Any,

|

||||

) -> GraphCypherQAChain:

|

||||

|

||||

@ -48,3 +48,16 @@ The question is:

|

||||

CYPHER_GENERATION_PROMPT = PromptTemplate(

|

||||

input_variables=["schema", "question"], template=CYPHER_GENERATION_TEMPLATE

|

||||

)

|

||||

|

||||

CYPHER_QA_TEMPLATE = """You are an assistant that helps to form nice and human understandable answers.

|

||||

The information part contains the provided information that you can use to construct an answer.

|

||||

The provided information is authorative, you must never doubt it or try to use your internal knowledge to correct it.

|

||||

Make it sound like the information are coming from an AI assistant, but don't add any information.

|

||||

Information:

|

||||

{context}

|

||||

|

||||

Question: {question}

|

||||

Helpful Answer:"""

|

||||

CYPHER_QA_PROMPT = PromptTemplate(

|

||||

input_variables=["context", "question"], template=CYPHER_QA_TEMPLATE

|

||||

)

|

||||

|

||||

Loading…

Reference in New Issue

Block a user