147 KiB

Tutorial 14 - Virtual Memory Part 2: MMIO Remap

tl;dr

- We introduce a first set of changes which is eventually needed for separating

kernelanduseraddress spaces. - The memory mapping strategy gets more sophisticated as we do away with

identity mappingthe whole of the board's address space. - Instead, only ranges that are actually needed are mapped:

- The

kernel binarystaysidentity mappedfor now. - Device

MMIO regionsare remapped lazily (to a special reserved virtual address region).

- The

Table of Contents

Introduction

This tutorial is a first step of many needed for enabling userspace applications (which we

hopefully will have some day in the very distant future).

For this, one of the features we want is a clean separation of kernel and user address spaces.

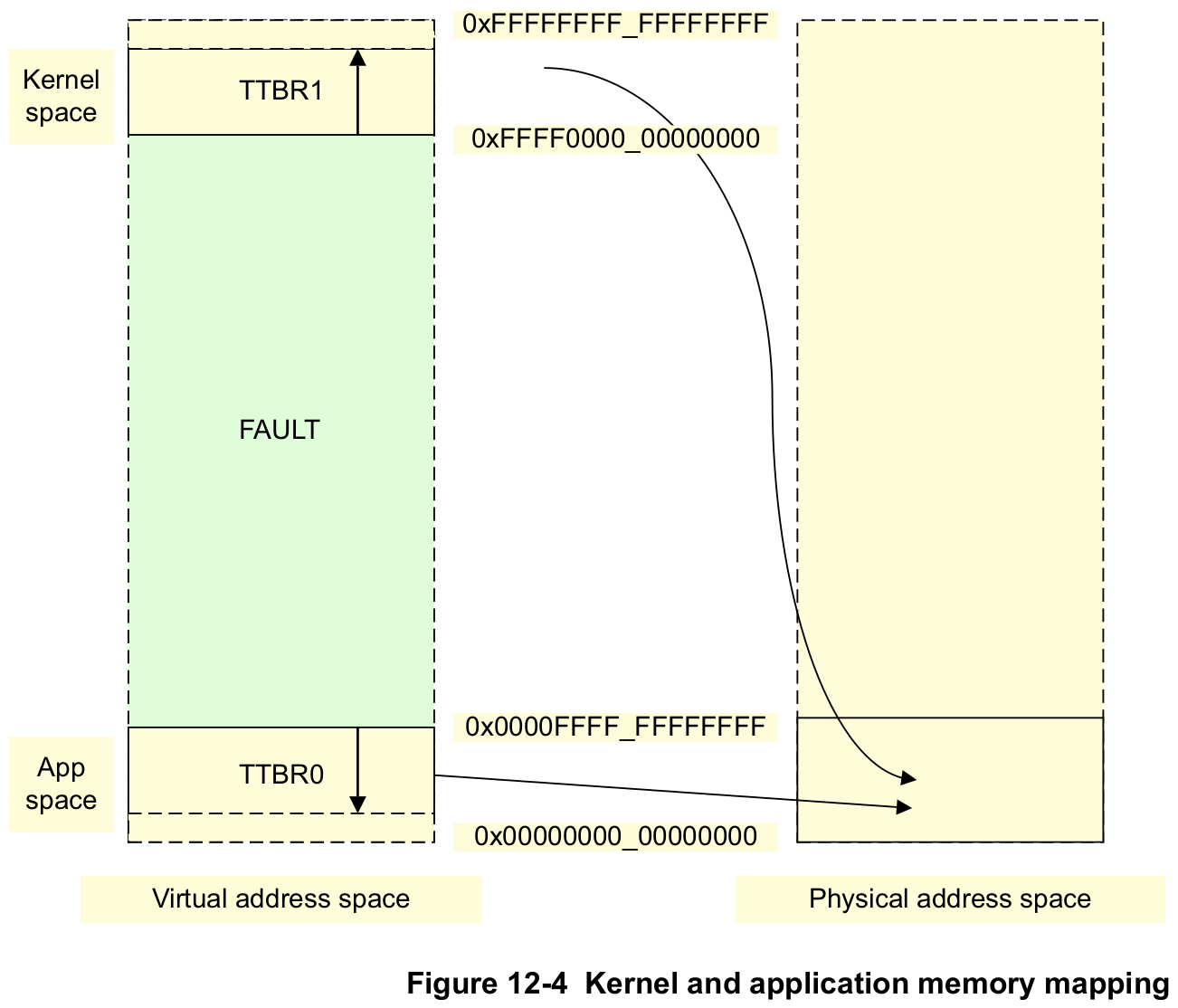

Fortunately, ARMv8 has convenient architecture support to realize this. The following text and

pictue gives some more motivation and technical information. It is quoted from the ARM Cortex-A

Series Programmer’s Guide for ARMv8-A, Chapter 12.2, Separation of kernel and application Virtual

Address spaces:

Operating systems typically have a number of applications or tasks running concurrently. Each of these has its own unique set of translation tables and the kernel switches from one to another as part of the process of switching context between one task and another. However, much of the memory system is used only by the kernel and has fixed virtual to Physical Address mappings where the translation table entries rarely change. The ARMv8 architecture provides a number of features to efficiently handle this requirement.

The table base addresses are specified in the Translation Table Base Registers

TTBR0_EL1andTTBR1_EL1. The translation table pointed to byTTBR0is selected when the upper bits of the VA are all 0.TTBR1is selected when the upper bits of the VA are all set to 1. [...]Figure 12-4 shows how the kernel space can be mapped to the most significant area of memory and the Virtual Address space associated with each application mapped to the least significant area of memory. However, both of these are mapped to a much smaller Physical Address space.

This approach is also sometimes called a "higher half kernel". To eventually achieve this separation, this tutorial makes a start by changing the following things:

- Instead of bulk-

identity mappingthe whole of the board's address space, only the particular parts that are needed will be mapped. - For now, the

kernel binarystays identity mapped. This will be changed in the coming tutorials as it is a quite difficult and peculiar exercise to remap the kernel. - Device

MMIO regionsare lazily remapped during device driver bringup (using the newDriverManagefunctioninstantiate_drivers()).- A dedicated region of virtual addresses that we reserve using

BSPcode and thelinker scriptis used for this.

- A dedicated region of virtual addresses that we reserve using

- We keep using

TTBR0for the kernel translation tables for now. This will be changed when we remap thekernel binaryin the coming tutorials.

Implementation

Until now, the whole address space of the board was identity mapped at once. The architecture

(src/_arch/_/memory/**) and bsp (src/bsp/_/memory/**) parts of the kernel worked

together directly while setting up the translation tables, without any indirection through generic

kernel code (src/memory/**).

The way it worked was that the architectural MMU code would query the bsp code about the start

and end of the physical address space, and any special regions in this space that need a mapping

that is not normal chacheable DRAM. It would then go ahead and map the whole address space at once

and never touch the translation tables again during runtime.

Changing in this tutorial, architecture and bsp code will no longer autonomously create the virtual memory mappings. Instead, this is now orchestrated by the kernel's generic MMU subsystem code.

A New Mapping API in src/memory/mmu/translation_table.rs

First, we define an interface for operating on translation tables:

/// Translation table operations.

pub trait TranslationTable {

/// Anything that needs to run before any of the other provided functions can be used.

///

/// # Safety

///

/// - Implementor must ensure that this function can run only once or is harmless if invoked

/// multiple times.

fn init(&mut self);

/// The translation table's base address to be used for programming the MMU.

fn phys_base_address(&self) -> Address<Physical>;

/// Map the given virtual memory region to the given physical memory region.

unsafe fn map_at(

&mut self,

virt_region: &MemoryRegion<Virtual>,

phys_region: &MemoryRegion<Physical>,

attr: &AttributeFields,

) -> Result<(), &'static str>;

}

In order to enable the generic kernel code to manipulate the kernel's translation tables, they must

first be made accessible. Until now, they were just a "hidden" struct in the architectural MMU

driver (src/arch/.../memory/mmu.rs). This made sense because the MMU driver code was the only code

that needed to be concerned with the table data structure, so having it accessible locally

simplified things.

Since the tables need to be exposed to the rest of the kernel code now, it makes sense to move them

to BSP code. Because ultimately, it is the BSP that is defining the translation table's

properties, such as the size of the virtual address space that the tables need to cover.

They are now defined in the global instances region of src/bsp/.../memory/mmu.rs. To control

access, they are guarded by an InitStateLock.

//--------------------------------------------------------------------------------------------------

// Global instances

//--------------------------------------------------------------------------------------------------

/// The kernel translation tables.

static KERNEL_TABLES: InitStateLock<KernelTranslationTable> =

InitStateLock::new(KernelTranslationTable::new());

The struct KernelTranslationTable is a type alias defined in the same file, which in turn gets its

definition from an associated type of type KernelVirtAddrSpace, which itself is a type alias of

memory::mmu::AddressSpace. I know this sounds horribly complicated, but in the end this is just

some layers of const generics whose implementation is scattered between generic and arch code.

This is done to (1) ensure a sane compile-time definition of the translation table struct (by doing

various bounds checks), and (2) to separate concerns between generic MMU code and specializations

that come from the architectural part.

In the end, these tables can be accessed by calling bsp::memory::mmu::kernel_translation_tables():

/// Return a reference to the kernel's translation tables.

pub fn kernel_translation_tables() -> &'static InitStateLock<KernelTranslationTable> {

&KERNEL_TABLES

}

Finally, the generic kernel code (src/memory/mmu.rs) now provides a couple of memory mapping

functions that access and manipulate this instance. They are exported for the rest of the kernel to

use:

/// Raw mapping of a virtual to physical region in the kernel translation tables.

///

/// Prevents mapping into the MMIO range of the tables.

pub unsafe fn kernel_map_at(

name: &'static str,

virt_region: &MemoryRegion<Virtual>,

phys_region: &MemoryRegion<Physical>,

attr: &AttributeFields,

) -> Result<(), &'static str>;

/// MMIO remapping in the kernel translation tables.

///

/// Typically used by device drivers.

pub unsafe fn kernel_map_mmio(

name: &'static str,

mmio_descriptor: &MMIODescriptor,

) -> Result<Address<Virtual>, &'static str>;

/// Map the kernel's binary. Returns the translation table's base address.

pub unsafe fn kernel_map_binary() -> Result<Address<Physical>, &'static str>;

/// Enable the MMU and data + instruction caching.

pub unsafe fn enable_mmu_and_caching(

phys_tables_base_addr: Address<Physical>,

) -> Result<(), MMUEnableError>;

The new APIs in action

kernel_map_binary() and enable_mmu_and_caching() are used early in kernel_init() to set up

virtual memory:

let phys_kernel_tables_base_addr = match memory::mmu::kernel_map_binary() {

Err(string) => panic!("Error mapping kernel binary: {}", string),

Ok(addr) => addr,

};

if let Err(e) = memory::mmu::enable_mmu_and_caching(phys_kernel_tables_base_addr) {

panic!("Enabling MMU failed: {}", e);

}

Both functions internally use bsp and arch specific code to achieve their goals. For example,

memory::mmu::kernel_map_binary() itself wraps around a bsp function of the same name

(bsp::memory::mmu::kernel_map_binary()):

/// Map the kernel binary.

pub unsafe fn kernel_map_binary() -> Result<(), &'static str> {

generic_mmu::kernel_map_at(

"Kernel boot-core stack",

// omitted for brevity.

)?;

generic_mmu::kernel_map_at(

"Kernel code and RO data",

&virt_code_region(),

&kernel_virt_to_phys_region(virt_code_region()),

&AttributeFields {

mem_attributes: MemAttributes::CacheableDRAM,

acc_perms: AccessPermissions::ReadOnly,

execute_never: false,

},

)?;

generic_mmu::kernel_map_at(

"Kernel data and bss",

// omitted for brevity.

)?;

Ok(())

}

Another user of the new APIs is the driver subsystem. As has been said in the introduction, the

goal is to remap the MMIO regions of the drivers. To achieve this in a seamless way, some changes

to the architecture of the driver subsystem were needed.

Until now, the drivers were static instances which had their MMIO addresses statically set in

the constructor. This was fine, because even if virtual memory was activated, only identity mapping was used, so the hardcoded addresses would be valid with and without the MMU being active.

With remapped MMIO addresses, this is not possible anymore, since the remapping will only happen

at runtime. Therefore, the new approach is to defer the whole instantiation of the drivers until the

remapped addresses are known. To achieve this, in src/bsp/raspberrypi/drivers.rs, the static

driver instances are now wrapped into a MaybeUninit (and are also mut now):

static mut PL011_UART: MaybeUninit<device_driver::PL011Uart> = MaybeUninit::uninit();

static mut GPIO: MaybeUninit<device_driver::GPIO> = MaybeUninit::uninit();

#[cfg(feature = "bsp_rpi3")]

static mut INTERRUPT_CONTROLLER: MaybeUninit<device_driver::InterruptController> =

MaybeUninit::uninit();

#[cfg(feature = "bsp_rpi4")]

static mut INTERRUPT_CONTROLLER: MaybeUninit<device_driver::GICv2> = MaybeUninit::uninit();

BSPDriverManager implements the new instantiate_drivers() interface function, which will be

called early during kernel_init(), short after virtual memory has been activated:

unsafe fn instantiate_drivers(&self) -> Result<(), &'static str> {

if self.init_done.load(Ordering::Relaxed) {

return Err("Drivers already instantiated");

}

self.instantiate_uart()?;

self.instantiate_gpio()?;

self.instantiate_interrupt_controller()?;

self.register_drivers();

self.init_done.store(true, Ordering::Relaxed);

Ok(())

}

As can be seen, for each driver, this BSP code calls a dedicated instantiation function. In this

tutorial text, only the UART will be discussed in detail:

unsafe fn instantiate_uart(&self) -> Result<(), &'static str> {

let mmio_descriptor = MMIODescriptor::new(mmio::PL011_UART_START, mmio::PL011_UART_SIZE);

let virt_addr =

memory::mmu::kernel_map_mmio(device_driver::PL011Uart::COMPATIBLE, &mmio_descriptor)?;

// This is safe to do, because it is only called from the init'ed instance itself.

fn uart_post_init() {

console::register_console(unsafe { PL011_UART.assume_init_ref() });

}

PL011_UART.write(device_driver::PL011Uart::new(

virt_addr,

exception::asynchronous::irq_map::PL011_UART,

uart_post_init,

));

Ok(())

}

A couple of things are happening here. First, an MMIODescriptor is created and then used to remap

the MMIO region using memory::mmu::kernel_map_mmio(). This function will be discussed in detail in

the next chapter. What's important for now is that it returns the new Virtual Address of the

remapped MMIO region. The constructor of the UART driver now also expects a virtual address.

Next, a new instance of the PL011Uart driver is created, and written into the PL011_UART global

variable (remember, it is defined as MaybeUninit<device_driver::PL011Uart> = MaybeUninit::uninit()). Meaning, after this line of code, PL011_UART is properly initialized.

Another new feature is the function uart_post_init(), which is supplied to the UART constructor.

This is a callback that will be called by the UART driver when it concludes its init() function

(remember that the driver's init functions are called from kernel_init()). This callback, in turn,

registers the PL011_UART as the new console of the kernel.

A look into src/console.rs should make clear what is happening. The classic console::console()

now dynamically points to whoever registered itself using the console::register_console()

function, instead of using a hardcoded static reference (from the BSP). This has been introduced

to accommodate the run-time instantiation of the device drivers (the same feature has been

implemented for the IRQManager as well). Until console::register_console() has been called for

the first time, an instance of the newly introduced NullConsole is used as the default.

NullConsole implements all the console traits, but does nothing. It discards outputs, and returns

dummy input. For example, should one of the printing macros be called before the UART driver has

been instantiated and registered, the kernel does not need to crash because the driver is not

brought up yet. Instead, it can just discards the output. With this new scheme of things, it is

possible to safely switch global references like the UART or the IRQ Manager at runtime.

That all the post-driver-init work has now been moved to callbacks is motivated by the idea that

this fully enables a driver once it has concluded its init() function, and not only after all the

drivers have been init'ed and then the post-init code would be called, as earlier. In our example,

printing through the UART will now be available already before the interrupt controller driver runs

its init function.

MMIO Virtual Address Allocation

Getting back to the remapping part, let's peek inside memory::mmu::kernel_map_mmio(). We can see

that a virtual address region is obtained from an allocator before remapping:

pub unsafe fn kernel_map_mmio(

name: &'static str,

mmio_descriptor: &MMIODescriptor,

) -> Result<Address<Virtual>, &'static str> {

// omitted

let virt_region =

page_alloc::kernel_mmio_va_allocator().lock(|allocator| allocator.alloc(num_pages))?;

kernel_map_at_unchecked(

name,

&virt_region,

&phys_region,

&AttributeFields {

mem_attributes: MemAttributes::Device,

acc_perms: AccessPermissions::ReadWrite,

execute_never: true,

},

)?;

// omitted

}

This allocator is defined and implemented in the added file src/memory/mmu/page_alloc.rs. Like

other parts of the mapping code, its implementation makes use of the newly introduced

PageAddress<ATYPE> and MemoryRegion<ATYPE> types (in

src/memory/mmu/types.rs), but apart from that is rather straight

forward. Therefore, it won't be covered in details here.

The more interesting question is: How does the allocator get to learn which VAs it can use?

This is happening in the following function, which gets called as part of

memory::mmu::post_enable_init(), which in turn gets called in kernel_init() after the MMU has

been turned on.

/// Query the BSP for the reserved virtual addresses for MMIO remapping and initialize the kernel's

/// MMIO VA allocator with it.

fn kernel_init_mmio_va_allocator() {

let region = bsp::memory::mmu::virt_mmio_remap_region();

page_alloc::kernel_mmio_va_allocator().lock(|allocator| allocator.initialize(region));

}

Again, it is the BSP that provides the information. The BSP itself indirectly gets it from the

linker script. In it, we have defined an 8 MiB region right after the .data segment:

__data_end_exclusive = .;

/***********************************************************************************************

* MMIO Remap Reserved

***********************************************************************************************/

__mmio_remap_start = .;

. += 8 * 1024 * 1024;

__mmio_remap_end_exclusive = .;

ASSERT((. & PAGE_MASK) == 0, "MMIO remap reservation is not page aligned")

The two symbols __mmio_remap_start and __mmio_remap_end_exclusive are used by the BSP to learn

the VA range.

Supporting Changes

There's a couple of changes more not covered in this tutorial text, but the reader should ideally skim through them:

src/memory.rsandsrc/memory/mmu/types.rsintroduce supporting types, likeAddress<ATYPE>,PageAddress<ATYPE>andMemoryRegion<ATYPE>. It is worth reading their implementations.src/memory/mmu/mapping_record.rsprovides the generic kernel code's way of tracking previous memory mappings for use cases such as reusing existing mappings (in case of drivers that have their MMIO ranges in the same64 KiBpage) or printing mappings statistics.

Test it

When you load the kernel, you can now see that the driver's MMIO virtual addresses start right after

the .data section:

Raspberry Pi 3:

$ make chainboot

[...]

Minipush 1.0

[MP] ⏳ Waiting for /dev/ttyUSB0

[MP] ✅ Serial connected

[MP] 🔌 Please power the target now

__ __ _ _ _ _

| \/ (_)_ _ (_) | ___ __ _ __| |

| |\/| | | ' \| | |__/ _ \/ _` / _` |

|_| |_|_|_||_|_|____\___/\__,_\__,_|

Raspberry Pi 3

[ML] Requesting binary

[MP] ⏩ Pushing 65 KiB =========================================🦀 100% 0 KiB/s Time: 00:00:00

[ML] Loaded! Executing the payload now

[ 0.740694] mingo version 0.14.0

[ 0.740902] Booting on: Raspberry Pi 3

[ 0.741357] MMU online:

[ 0.741649] -------------------------------------------------------------------------------------------------------------------------------------------

[ 0.743393] Virtual Physical Size Attr Entity

[ 0.745138] -------------------------------------------------------------------------------------------------------------------------------------------

[ 0.746883] 0x0000_0000_0000_0000..0x0000_0000_0007_ffff --> 0x00_0000_0000..0x00_0007_ffff | 512 KiB | C RW XN | Kernel boot-core stack

[ 0.748486] 0x0000_0000_0008_0000..0x0000_0000_0008_ffff --> 0x00_0008_0000..0x00_0008_ffff | 64 KiB | C RO X | Kernel code and RO data

[ 0.750099] 0x0000_0000_0009_0000..0x0000_0000_000e_ffff --> 0x00_0009_0000..0x00_000e_ffff | 384 KiB | C RW XN | Kernel data and bss

[ 0.751670] 0x0000_0000_000f_0000..0x0000_0000_000f_ffff --> 0x00_3f20_0000..0x00_3f20_ffff | 64 KiB | Dev RW XN | BCM PL011 UART

[ 0.753187] | BCM GPIO

[ 0.754638] 0x0000_0000_0010_0000..0x0000_0000_0010_ffff --> 0x00_3f00_0000..0x00_3f00_ffff | 64 KiB | Dev RW XN | BCM Interrupt Controller

[ 0.756264] -------------------------------------------------------------------------------------------------------------------------------------------

Raspberry Pi 4:

$ BSP=rpi4 make chainboot

[...]

Minipush 1.0

[MP] ⏳ Waiting for /dev/ttyUSB0

[MP] ✅ Serial connected

[MP] 🔌 Please power the target now

__ __ _ _ _ _

| \/ (_)_ _ (_) | ___ __ _ __| |

| |\/| | | ' \| | |__/ _ \/ _` / _` |

|_| |_|_|_||_|_|____\___/\__,_\__,_|

Raspberry Pi 4

[ML] Requesting binary

[MP] ⏩ Pushing 65 KiB =========================================🦀 100% 0 KiB/s Time: 00:00:00

[ML] Loaded! Executing the payload now

[ 0.736136] mingo version 0.14.0

[ 0.736170] Booting on: Raspberry Pi 4

[ 0.736625] MMU online:

[ 0.736918] -------------------------------------------------------------------------------------------------------------------------------------------

[ 0.738662] Virtual Physical Size Attr Entity

[ 0.740406] -------------------------------------------------------------------------------------------------------------------------------------------

[ 0.742151] 0x0000_0000_0000_0000..0x0000_0000_0007_ffff --> 0x00_0000_0000..0x00_0007_ffff | 512 KiB | C RW XN | Kernel boot-core stack

[ 0.743754] 0x0000_0000_0008_0000..0x0000_0000_0008_ffff --> 0x00_0008_0000..0x00_0008_ffff | 64 KiB | C RO X | Kernel code and RO data

[ 0.745368] 0x0000_0000_0009_0000..0x0000_0000_000d_ffff --> 0x00_0009_0000..0x00_000d_ffff | 320 KiB | C RW XN | Kernel data and bss

[ 0.746938] 0x0000_0000_000e_0000..0x0000_0000_000e_ffff --> 0x00_fe20_0000..0x00_fe20_ffff | 64 KiB | Dev RW XN | BCM PL011 UART

[ 0.748455] | BCM GPIO

[ 0.749907] 0x0000_0000_000f_0000..0x0000_0000_000f_ffff --> 0x00_ff84_0000..0x00_ff84_ffff | 64 KiB | Dev RW XN | GICv2 GICD

[ 0.751380] | GICV2 GICC

[ 0.752853] -------------------------------------------------------------------------------------------------------------------------------------------

Diff to previous

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/Cargo.toml 14_virtual_mem_part2_mmio_remap/kernel/Cargo.toml

--- 13_exceptions_part2_peripheral_IRQs/kernel/Cargo.toml

+++ 14_virtual_mem_part2_mmio_remap/kernel/Cargo.toml

@@ -1,6 +1,6 @@

[package]

name = "mingo"

-version = "0.13.0"

+version = "0.14.0"

authors = ["Andre Richter <andre.o.richter@gmail.com>"]

edition = "2021"

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/_arch/aarch64/memory/mmu/translation_table.rs 14_virtual_mem_part2_mmio_remap/kernel/src/_arch/aarch64/memory/mmu/translation_table.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/_arch/aarch64/memory/mmu/translation_table.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/_arch/aarch64/memory/mmu/translation_table.rs

@@ -14,10 +14,14 @@

//! crate::memory::mmu::translation_table::arch_translation_table

use crate::{

- bsp, memory,

- memory::mmu::{

- arch_mmu::{Granule512MiB, Granule64KiB},

- AccessPermissions, AttributeFields, MemAttributes,

+ bsp,

+ memory::{

+ self,

+ mmu::{

+ arch_mmu::{Granule512MiB, Granule64KiB},

+ AccessPermissions, AttributeFields, MemAttributes, MemoryRegion, PageAddress,

+ },

+ Address, Physical, Virtual,

},

};

use core::convert;

@@ -121,12 +125,9 @@

}

trait StartAddr {

- fn phys_start_addr_u64(&self) -> u64;

- fn phys_start_addr_usize(&self) -> usize;

+ fn phys_start_addr(&self) -> Address<Physical>;

}

-const NUM_LVL2_TABLES: usize = bsp::memory::mmu::KernelAddrSpace::SIZE >> Granule512MiB::SHIFT;

-

//--------------------------------------------------------------------------------------------------

// Public Definitions

//--------------------------------------------------------------------------------------------------

@@ -141,10 +142,10 @@

/// Table descriptors, covering 512 MiB windows.

lvl2: [TableDescriptor; NUM_TABLES],

-}

-/// A translation table type for the kernel space.

-pub type KernelTranslationTable = FixedSizeTranslationTable<NUM_LVL2_TABLES>;

+ /// Have the tables been initialized?

+ initialized: bool,

+}

//--------------------------------------------------------------------------------------------------

// Private Code

@@ -152,12 +153,8 @@

// The binary is still identity mapped, so we don't need to convert here.

impl<T, const N: usize> StartAddr for [T; N] {

- fn phys_start_addr_u64(&self) -> u64 {

- self as *const T as u64

- }

-

- fn phys_start_addr_usize(&self) -> usize {

- self as *const _ as usize

+ fn phys_start_addr(&self) -> Address<Physical> {

+ Address::new(self as *const _ as usize)

}

}

@@ -170,10 +167,10 @@

}

/// Create an instance pointing to the supplied address.

- pub fn from_next_lvl_table_addr(phys_next_lvl_table_addr: usize) -> Self {

+ pub fn from_next_lvl_table_addr(phys_next_lvl_table_addr: Address<Physical>) -> Self {

let val = InMemoryRegister::<u64, STAGE1_TABLE_DESCRIPTOR::Register>::new(0);

- let shifted = phys_next_lvl_table_addr >> Granule64KiB::SHIFT;

+ let shifted = phys_next_lvl_table_addr.as_usize() >> Granule64KiB::SHIFT;

val.write(

STAGE1_TABLE_DESCRIPTOR::NEXT_LEVEL_TABLE_ADDR_64KiB.val(shifted as u64)

+ STAGE1_TABLE_DESCRIPTOR::TYPE::Table

@@ -230,12 +227,15 @@

}

/// Create an instance.

- pub fn from_output_addr(phys_output_addr: usize, attribute_fields: &AttributeFields) -> Self {

+ pub fn from_output_page_addr(

+ phys_output_page_addr: PageAddress<Physical>,

+ attribute_fields: &AttributeFields,

+ ) -> Self {

let val = InMemoryRegister::<u64, STAGE1_PAGE_DESCRIPTOR::Register>::new(0);

- let shifted = phys_output_addr as u64 >> Granule64KiB::SHIFT;

+ let shifted = phys_output_page_addr.into_inner().as_usize() >> Granule64KiB::SHIFT;

val.write(

- STAGE1_PAGE_DESCRIPTOR::OUTPUT_ADDR_64KiB.val(shifted)

+ STAGE1_PAGE_DESCRIPTOR::OUTPUT_ADDR_64KiB.val(shifted as u64)

+ STAGE1_PAGE_DESCRIPTOR::AF::True

+ STAGE1_PAGE_DESCRIPTOR::TYPE::Page

+ STAGE1_PAGE_DESCRIPTOR::VALID::True

@@ -244,50 +244,133 @@

Self { value: val.get() }

}

+

+ /// Returns the valid bit.

+ fn is_valid(&self) -> bool {

+ InMemoryRegister::<u64, STAGE1_PAGE_DESCRIPTOR::Register>::new(self.value)

+ .is_set(STAGE1_PAGE_DESCRIPTOR::VALID)

+ }

}

//--------------------------------------------------------------------------------------------------

// Public Code

//--------------------------------------------------------------------------------------------------

+impl<const AS_SIZE: usize> memory::mmu::AssociatedTranslationTable

+ for memory::mmu::AddressSpace<AS_SIZE>

+where

+ [u8; Self::SIZE >> Granule512MiB::SHIFT]: Sized,

+{

+ type TableStartFromBottom = FixedSizeTranslationTable<{ Self::SIZE >> Granule512MiB::SHIFT }>;

+}

+

impl<const NUM_TABLES: usize> FixedSizeTranslationTable<NUM_TABLES> {

/// Create an instance.

+ #[allow(clippy::assertions_on_constants)]

pub const fn new() -> Self {

+ assert!(bsp::memory::mmu::KernelGranule::SIZE == Granule64KiB::SIZE);

+

// Can't have a zero-sized address space.

assert!(NUM_TABLES > 0);

Self {

lvl3: [[PageDescriptor::new_zeroed(); 8192]; NUM_TABLES],

lvl2: [TableDescriptor::new_zeroed(); NUM_TABLES],

+ initialized: false,

}

}

- /// Iterates over all static translation table entries and fills them at once.

- ///

- /// # Safety

- ///

- /// - Modifies a `static mut`. Ensure it only happens from here.

- pub unsafe fn populate_tt_entries(&mut self) -> Result<(), &'static str> {

- for (l2_nr, l2_entry) in self.lvl2.iter_mut().enumerate() {

- *l2_entry =

- TableDescriptor::from_next_lvl_table_addr(self.lvl3[l2_nr].phys_start_addr_usize());

+ /// Helper to calculate the lvl2 and lvl3 indices from an address.

+ #[inline(always)]

+ fn lvl2_lvl3_index_from_page_addr(

+ &self,

+ virt_page_addr: PageAddress<Virtual>,

+ ) -> Result<(usize, usize), &'static str> {

+ let addr = virt_page_addr.into_inner().as_usize();

+ let lvl2_index = addr >> Granule512MiB::SHIFT;

+ let lvl3_index = (addr & Granule512MiB::MASK) >> Granule64KiB::SHIFT;

- for (l3_nr, l3_entry) in self.lvl3[l2_nr].iter_mut().enumerate() {

- let virt_addr = (l2_nr << Granule512MiB::SHIFT) + (l3_nr << Granule64KiB::SHIFT);

+ if lvl2_index > (NUM_TABLES - 1) {

+ return Err("Virtual page is out of bounds of translation table");

+ }

- let (phys_output_addr, attribute_fields) =

- bsp::memory::mmu::virt_mem_layout().virt_addr_properties(virt_addr)?;

+ Ok((lvl2_index, lvl3_index))

+ }

- *l3_entry = PageDescriptor::from_output_addr(phys_output_addr, &attribute_fields);

- }

+ /// Sets the PageDescriptor corresponding to the supplied page address.

+ ///

+ /// Doesn't allow overriding an already valid page.

+ #[inline(always)]

+ fn set_page_descriptor_from_page_addr(

+ &mut self,

+ virt_page_addr: PageAddress<Virtual>,

+ new_desc: &PageDescriptor,

+ ) -> Result<(), &'static str> {

+ let (lvl2_index, lvl3_index) = self.lvl2_lvl3_index_from_page_addr(virt_page_addr)?;

+ let desc = &mut self.lvl3[lvl2_index][lvl3_index];

+

+ if desc.is_valid() {

+ return Err("Virtual page is already mapped");

}

+ *desc = *new_desc;

Ok(())

}

+}

- /// The translation table's base address to be used for programming the MMU.

- pub fn phys_base_address(&self) -> u64 {

- self.lvl2.phys_start_addr_u64()

+//------------------------------------------------------------------------------

+// OS Interface Code

+//------------------------------------------------------------------------------

+

+impl<const NUM_TABLES: usize> memory::mmu::translation_table::interface::TranslationTable

+ for FixedSizeTranslationTable<NUM_TABLES>

+{

+ fn init(&mut self) {

+ if self.initialized {

+ return;

+ }

+

+ // Populate the l2 entries.

+ for (lvl2_nr, lvl2_entry) in self.lvl2.iter_mut().enumerate() {

+ let phys_table_addr = self.lvl3[lvl2_nr].phys_start_addr();

+

+ let new_desc = TableDescriptor::from_next_lvl_table_addr(phys_table_addr);

+ *lvl2_entry = new_desc;

+ }

+

+ self.initialized = true;

+ }

+

+ fn phys_base_address(&self) -> Address<Physical> {

+ self.lvl2.phys_start_addr()

+ }

+

+ unsafe fn map_at(

+ &mut self,

+ virt_region: &MemoryRegion<Virtual>,

+ phys_region: &MemoryRegion<Physical>,

+ attr: &AttributeFields,

+ ) -> Result<(), &'static str> {

+ assert!(self.initialized, "Translation tables not initialized");

+

+ if virt_region.size() != phys_region.size() {

+ return Err("Tried to map memory regions with unequal sizes");

+ }

+

+ if phys_region.end_exclusive_page_addr() > bsp::memory::phys_addr_space_end_exclusive_addr()

+ {

+ return Err("Tried to map outside of physical address space");

+ }

+

+ let iter = phys_region.into_iter().zip(virt_region.into_iter());

+ for (phys_page_addr, virt_page_addr) in iter {

+ let new_desc = PageDescriptor::from_output_page_addr(phys_page_addr, attr);

+ let virt_page = virt_page_addr;

+

+ self.set_page_descriptor_from_page_addr(virt_page, &new_desc)?;

+ }

+

+ Ok(())

}

}

@@ -296,6 +379,9 @@

//--------------------------------------------------------------------------------------------------

#[cfg(test)]

+pub type MinSizeTranslationTable = FixedSizeTranslationTable<1>;

+

+#[cfg(test)]

mod tests {

use super::*;

use test_macros::kernel_test;

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/_arch/aarch64/memory/mmu.rs 14_virtual_mem_part2_mmio_remap/kernel/src/_arch/aarch64/memory/mmu.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/_arch/aarch64/memory/mmu.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/_arch/aarch64/memory/mmu.rs

@@ -15,7 +15,7 @@

use crate::{

bsp, memory,

- memory::mmu::{translation_table::KernelTranslationTable, TranslationGranule},

+ memory::{mmu::TranslationGranule, Address, Physical},

};

use core::intrinsics::unlikely;

use cortex_a::{asm::barrier, registers::*};

@@ -46,13 +46,6 @@

// Global instances

//--------------------------------------------------------------------------------------------------

-/// The kernel translation tables.

-///

-/// # Safety

-///

-/// - Supposed to land in `.bss`. Therefore, ensure that all initial member values boil down to "0".

-static mut KERNEL_TABLES: KernelTranslationTable = KernelTranslationTable::new();

-

static MMU: MemoryManagementUnit = MemoryManagementUnit;

//--------------------------------------------------------------------------------------------------

@@ -87,7 +80,7 @@

/// Configure various settings of stage 1 of the EL1 translation regime.

fn configure_translation_control(&self) {

- let t0sz = (64 - bsp::memory::mmu::KernelAddrSpace::SIZE_SHIFT) as u64;

+ let t0sz = (64 - bsp::memory::mmu::KernelVirtAddrSpace::SIZE_SHIFT) as u64;

TCR_EL1.write(

TCR_EL1::TBI0::Used

@@ -119,7 +112,10 @@

use memory::mmu::MMUEnableError;

impl memory::mmu::interface::MMU for MemoryManagementUnit {

- unsafe fn enable_mmu_and_caching(&self) -> Result<(), MMUEnableError> {

+ unsafe fn enable_mmu_and_caching(

+ &self,

+ phys_tables_base_addr: Address<Physical>,

+ ) -> Result<(), MMUEnableError> {

if unlikely(self.is_enabled()) {

return Err(MMUEnableError::AlreadyEnabled);

}

@@ -134,13 +130,8 @@

// Prepare the memory attribute indirection register.

self.set_up_mair();

- // Populate translation tables.

- KERNEL_TABLES

- .populate_tt_entries()

- .map_err(MMUEnableError::Other)?;

-

// Set the "Translation Table Base Register".

- TTBR0_EL1.set_baddr(KERNEL_TABLES.phys_base_address());

+ TTBR0_EL1.set_baddr(phys_tables_base_addr.as_usize() as u64);

self.configure_translation_control();

@@ -163,33 +154,3 @@

SCTLR_EL1.matches_all(SCTLR_EL1::M::Enable)

}

}

-

-//--------------------------------------------------------------------------------------------------

-// Testing

-//--------------------------------------------------------------------------------------------------

-

-#[cfg(test)]

-mod tests {

- use super::*;

- use core::{cell::UnsafeCell, ops::Range};

- use test_macros::kernel_test;

-

- /// Check if KERNEL_TABLES is in .bss.

- #[kernel_test]

- fn kernel_tables_in_bss() {

- extern "Rust" {

- static __bss_start: UnsafeCell<u64>;

- static __bss_end_exclusive: UnsafeCell<u64>;

- }

-

- let bss_range = unsafe {

- Range {

- start: __bss_start.get(),

- end: __bss_end_exclusive.get(),

- }

- };

- let kernel_tables_addr = unsafe { &KERNEL_TABLES as *const _ as usize as *mut u64 };

-

- assert!(bss_range.contains(&kernel_tables_addr));

- }

-}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/arm/gicv2/gicc.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/arm/gicv2/gicc.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/arm/gicv2/gicc.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/arm/gicv2/gicc.rs

@@ -4,7 +4,11 @@

//! GICC Driver - GIC CPU interface.

-use crate::{bsp::device_driver::common::MMIODerefWrapper, exception};

+use crate::{

+ bsp::device_driver::common::MMIODerefWrapper,

+ exception,

+ memory::{Address, Virtual},

+};

use tock_registers::{

interfaces::{Readable, Writeable},

register_bitfields, register_structs,

@@ -73,7 +77,7 @@

/// # Safety

///

/// - The user must ensure to provide a correct MMIO start address.

- pub const unsafe fn new(mmio_start_addr: usize) -> Self {

+ pub const unsafe fn new(mmio_start_addr: Address<Virtual>) -> Self {

Self {

registers: Registers::new(mmio_start_addr),

}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/arm/gicv2/gicd.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/arm/gicv2/gicd.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/arm/gicv2/gicd.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/arm/gicv2/gicd.rs

@@ -8,7 +8,9 @@

//! - SPI - Shared Peripheral Interrupt.

use crate::{

- bsp::device_driver::common::MMIODerefWrapper, state, synchronization,

+ bsp::device_driver::common::MMIODerefWrapper,

+ memory::{Address, Virtual},

+ state, synchronization,

synchronization::IRQSafeNullLock,

};

use tock_registers::{

@@ -128,7 +130,7 @@

/// # Safety

///

/// - The user must ensure to provide a correct MMIO start address.

- pub const unsafe fn new(mmio_start_addr: usize) -> Self {

+ pub const unsafe fn new(mmio_start_addr: Address<Virtual>) -> Self {

Self {

shared_registers: IRQSafeNullLock::new(SharedRegisters::new(mmio_start_addr)),

banked_registers: BankedRegisters::new(mmio_start_addr),

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/arm/gicv2.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/arm/gicv2.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/arm/gicv2.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/arm/gicv2.rs

@@ -79,7 +79,12 @@

mod gicc;

mod gicd;

-use crate::{bsp, cpu, driver, exception, synchronization, synchronization::InitStateLock};

+use crate::{

+ bsp, cpu, driver, exception,

+ memory::{Address, Virtual},

+ synchronization,

+ synchronization::InitStateLock,

+};

//--------------------------------------------------------------------------------------------------

// Private Definitions

@@ -104,6 +109,9 @@

/// Stores registered IRQ handlers. Writable only during kernel init. RO afterwards.

handler_table: InitStateLock<HandlerTable>,

+

+ /// Callback to be invoked after successful init.

+ post_init_callback: fn(),

}

//--------------------------------------------------------------------------------------------------

@@ -121,11 +129,16 @@

/// # Safety

///

/// - The user must ensure to provide a correct MMIO start address.

- pub const unsafe fn new(gicd_mmio_start_addr: usize, gicc_mmio_start_addr: usize) -> Self {

+ pub const unsafe fn new(

+ gicd_mmio_start_addr: Address<Virtual>,

+ gicc_mmio_start_addr: Address<Virtual>,

+ post_init_callback: fn(),

+ ) -> Self {

Self {

gicd: gicd::GICD::new(gicd_mmio_start_addr),

gicc: gicc::GICC::new(gicc_mmio_start_addr),

handler_table: InitStateLock::new([None; Self::NUM_IRQS]),

+ post_init_callback,

}

}

}

@@ -148,6 +161,8 @@

self.gicc.priority_accept_all();

self.gicc.enable();

+ (self.post_init_callback)();

+

Ok(())

}

}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/bcm/bcm2xxx_gpio.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/bcm/bcm2xxx_gpio.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/bcm/bcm2xxx_gpio.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/bcm/bcm2xxx_gpio.rs

@@ -5,7 +5,10 @@

//! GPIO Driver.

use crate::{

- bsp::device_driver::common::MMIODerefWrapper, driver, synchronization,

+ bsp::device_driver::common::MMIODerefWrapper,

+ driver,

+ memory::{Address, Virtual},

+ synchronization,

synchronization::IRQSafeNullLock,

};

use tock_registers::{

@@ -119,6 +122,7 @@

/// Representation of the GPIO HW.

pub struct GPIO {

inner: IRQSafeNullLock<GPIOInner>,

+ post_init_callback: fn(),

}

//--------------------------------------------------------------------------------------------------

@@ -131,7 +135,7 @@

/// # Safety

///

/// - The user must ensure to provide a correct MMIO start address.

- pub const unsafe fn new(mmio_start_addr: usize) -> Self {

+ pub const unsafe fn new(mmio_start_addr: Address<Virtual>) -> Self {

Self {

registers: Registers::new(mmio_start_addr),

}

@@ -198,9 +202,10 @@

/// # Safety

///

/// - The user must ensure to provide a correct MMIO start address.

- pub const unsafe fn new(mmio_start_addr: usize) -> Self {

+ pub const unsafe fn new(mmio_start_addr: Address<Virtual>, post_init_callback: fn()) -> Self {

Self {

inner: IRQSafeNullLock::new(GPIOInner::new(mmio_start_addr)),

+ post_init_callback,

}

}

@@ -219,4 +224,10 @@

fn compatible(&self) -> &'static str {

Self::COMPATIBLE

}

+

+ unsafe fn init(&self) -> Result<(), &'static str> {

+ (self.post_init_callback)();

+

+ Ok(())

+ }

}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/bcm/bcm2xxx_interrupt_controller/peripheral_ic.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/bcm/bcm2xxx_interrupt_controller/peripheral_ic.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/bcm/bcm2xxx_interrupt_controller/peripheral_ic.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/bcm/bcm2xxx_interrupt_controller/peripheral_ic.rs

@@ -7,7 +7,9 @@

use super::{InterruptController, PendingIRQs, PeripheralIRQ};

use crate::{

bsp::device_driver::common::MMIODerefWrapper,

- exception, synchronization,

+ exception,

+ memory::{Address, Virtual},

+ synchronization,

synchronization::{IRQSafeNullLock, InitStateLock},

};

use tock_registers::{

@@ -75,7 +77,7 @@

/// # Safety

///

/// - The user must ensure to provide a correct MMIO start address.

- pub const unsafe fn new(mmio_start_addr: usize) -> Self {

+ pub const unsafe fn new(mmio_start_addr: Address<Virtual>) -> Self {

Self {

wo_registers: IRQSafeNullLock::new(WriteOnlyRegisters::new(mmio_start_addr)),

ro_registers: ReadOnlyRegisters::new(mmio_start_addr),

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/bcm/bcm2xxx_interrupt_controller.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/bcm/bcm2xxx_interrupt_controller.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/bcm/bcm2xxx_interrupt_controller.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/bcm/bcm2xxx_interrupt_controller.rs

@@ -6,7 +6,10 @@

mod peripheral_ic;

-use crate::{driver, exception};

+use crate::{

+ driver, exception,

+ memory::{Address, Virtual},

+};

//--------------------------------------------------------------------------------------------------

// Private Definitions

@@ -37,6 +40,7 @@

/// Representation of the Interrupt Controller.

pub struct InterruptController {

periph: peripheral_ic::PeripheralIC,

+ post_init_callback: fn(),

}

//--------------------------------------------------------------------------------------------------

@@ -82,9 +86,13 @@

/// # Safety

///

/// - The user must ensure to provide a correct MMIO start address.

- pub const unsafe fn new(periph_mmio_start_addr: usize) -> Self {

+ pub const unsafe fn new(

+ periph_mmio_start_addr: Address<Virtual>,

+ post_init_callback: fn(),

+ ) -> Self {

Self {

periph: peripheral_ic::PeripheralIC::new(periph_mmio_start_addr),

+ post_init_callback,

}

}

}

@@ -97,6 +105,12 @@

fn compatible(&self) -> &'static str {

Self::COMPATIBLE

}

+

+ unsafe fn init(&self) -> Result<(), &'static str> {

+ (self.post_init_callback)();

+

+ Ok(())

+ }

}

impl exception::asynchronous::interface::IRQManager for InterruptController {

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/bcm/bcm2xxx_pl011_uart.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/bcm/bcm2xxx_pl011_uart.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/bcm/bcm2xxx_pl011_uart.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/bcm/bcm2xxx_pl011_uart.rs

@@ -10,8 +10,12 @@

//! - <https://developer.arm.com/documentation/ddi0183/latest>

use crate::{

- bsp, bsp::device_driver::common::MMIODerefWrapper, console, cpu, driver, exception,

- synchronization, synchronization::IRQSafeNullLock,

+ bsp,

+ bsp::device_driver::common::MMIODerefWrapper,

+ console, cpu, driver, exception,

+ memory::{Address, Virtual},

+ synchronization,

+ synchronization::IRQSafeNullLock,

};

use core::fmt;

use tock_registers::{

@@ -230,6 +234,7 @@

pub struct PL011Uart {

inner: IRQSafeNullLock<PL011UartInner>,

irq_number: bsp::device_driver::IRQNumber,

+ post_init_callback: fn(),

}

//--------------------------------------------------------------------------------------------------

@@ -242,7 +247,7 @@

/// # Safety

///

/// - The user must ensure to provide a correct MMIO start address.

- pub const unsafe fn new(mmio_start_addr: usize) -> Self {

+ pub const unsafe fn new(mmio_start_addr: Address<Virtual>) -> Self {

Self {

registers: Registers::new(mmio_start_addr),

chars_written: 0,

@@ -393,13 +398,16 @@

/// # Safety

///

/// - The user must ensure to provide a correct MMIO start address.

+ /// - The user must ensure to provide correct IRQ numbers.

pub const unsafe fn new(

- mmio_start_addr: usize,

+ mmio_start_addr: Address<Virtual>,

irq_number: bsp::device_driver::IRQNumber,

+ post_init_callback: fn(),

) -> Self {

Self {

inner: IRQSafeNullLock::new(PL011UartInner::new(mmio_start_addr)),

irq_number,

+ post_init_callback,

}

}

}

@@ -416,6 +424,7 @@

unsafe fn init(&self) -> Result<(), &'static str> {

self.inner.lock(|inner| inner.init());

+ (self.post_init_callback)();

Ok(())

}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/common.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/common.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/device_driver/common.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/device_driver/common.rs

@@ -4,6 +4,7 @@

//! Common device driver code.

+use crate::memory::{Address, Virtual};

use core::{marker::PhantomData, ops};

//--------------------------------------------------------------------------------------------------

@@ -11,7 +12,7 @@

//--------------------------------------------------------------------------------------------------

pub struct MMIODerefWrapper<T> {

- start_addr: usize,

+ start_addr: Address<Virtual>,

phantom: PhantomData<fn() -> T>,

}

@@ -21,7 +22,7 @@

impl<T> MMIODerefWrapper<T> {

/// Create an instance.

- pub const unsafe fn new(start_addr: usize) -> Self {

+ pub const unsafe fn new(start_addr: Address<Virtual>) -> Self {

Self {

start_addr,

phantom: PhantomData,

@@ -33,6 +34,6 @@

type Target = T;

fn deref(&self) -> &Self::Target {

- unsafe { &*(self.start_addr as *const _) }

+ unsafe { &*(self.start_addr.as_usize() as *const _) }

}

}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/console.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/console.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/console.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/console.rs

@@ -1,16 +0,0 @@

-// SPDX-License-Identifier: MIT OR Apache-2.0

-//

-// Copyright (c) 2018-2022 Andre Richter <andre.o.richter@gmail.com>

-

-//! BSP console facilities.

-

-use crate::console;

-

-//--------------------------------------------------------------------------------------------------

-// Public Code

-//--------------------------------------------------------------------------------------------------

-

-/// Return a reference to the console.

-pub fn console() -> &'static dyn console::interface::All {

- &super::driver::PL011_UART

-}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/driver.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/driver.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/driver.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/driver.rs

@@ -5,7 +5,16 @@

//! BSP driver support.

use super::{exception, memory::map::mmio};

-use crate::{bsp::device_driver, driver};

+use crate::{

+ bsp::device_driver,

+ console, driver, exception as generic_exception, memory,

+ memory::mmu::MMIODescriptor,

+ synchronization::{interface::ReadWriteEx, InitStateLock},

+};

+use core::{

+ mem::MaybeUninit,

+ sync::atomic::{AtomicBool, Ordering},

+};

pub use device_driver::IRQNumber;

@@ -15,35 +24,133 @@

/// Device Driver Manager type.

struct BSPDriverManager {

- device_drivers: [&'static (dyn DeviceDriver + Sync); 3],

+ device_drivers: InitStateLock<[Option<&'static (dyn DeviceDriver + Sync)>; NUM_DRIVERS]>,

+ init_done: AtomicBool,

}

//--------------------------------------------------------------------------------------------------

-// Global instances

+// Public Definitions

//--------------------------------------------------------------------------------------------------

-pub(super) static PL011_UART: device_driver::PL011Uart = unsafe {

- device_driver::PL011Uart::new(

- mmio::PL011_UART_START,

- exception::asynchronous::irq_map::PL011_UART,

- )

-};

+/// The number of active drivers provided by this BSP.

+pub const NUM_DRIVERS: usize = 3;

+

+//--------------------------------------------------------------------------------------------------

+// Global instances

+//--------------------------------------------------------------------------------------------------

-static GPIO: device_driver::GPIO = unsafe { device_driver::GPIO::new(mmio::GPIO_START) };

+static mut PL011_UART: MaybeUninit<device_driver::PL011Uart> = MaybeUninit::uninit();

+static mut GPIO: MaybeUninit<device_driver::GPIO> = MaybeUninit::uninit();

#[cfg(feature = "bsp_rpi3")]

-pub(super) static INTERRUPT_CONTROLLER: device_driver::InterruptController =

- unsafe { device_driver::InterruptController::new(mmio::PERIPHERAL_INTERRUPT_CONTROLLER_START) };

+static mut INTERRUPT_CONTROLLER: MaybeUninit<device_driver::InterruptController> =

+ MaybeUninit::uninit();

#[cfg(feature = "bsp_rpi4")]

-pub(super) static INTERRUPT_CONTROLLER: device_driver::GICv2 =

- unsafe { device_driver::GICv2::new(mmio::GICD_START, mmio::GICC_START) };

+static mut INTERRUPT_CONTROLLER: MaybeUninit<device_driver::GICv2> = MaybeUninit::uninit();

static BSP_DRIVER_MANAGER: BSPDriverManager = BSPDriverManager {

- device_drivers: [&PL011_UART, &GPIO, &INTERRUPT_CONTROLLER],

+ device_drivers: InitStateLock::new([None; NUM_DRIVERS]),

+ init_done: AtomicBool::new(false),

};

//--------------------------------------------------------------------------------------------------

+// Private Code

+//--------------------------------------------------------------------------------------------------

+

+impl BSPDriverManager {

+ unsafe fn instantiate_uart(&self) -> Result<(), &'static str> {

+ let mmio_descriptor = MMIODescriptor::new(mmio::PL011_UART_START, mmio::PL011_UART_SIZE);

+ let virt_addr =

+ memory::mmu::kernel_map_mmio(device_driver::PL011Uart::COMPATIBLE, &mmio_descriptor)?;

+

+ // This is safe to do, because it is only called from the init'ed instance itself.

+ fn uart_post_init() {

+ console::register_console(unsafe { PL011_UART.assume_init_ref() });

+ }

+

+ PL011_UART.write(device_driver::PL011Uart::new(

+ virt_addr,

+ exception::asynchronous::irq_map::PL011_UART,

+ uart_post_init,

+ ));

+

+ Ok(())

+ }

+

+ unsafe fn instantiate_gpio(&self) -> Result<(), &'static str> {

+ let mmio_descriptor = MMIODescriptor::new(mmio::GPIO_START, mmio::GPIO_SIZE);

+ let virt_addr =

+ memory::mmu::kernel_map_mmio(device_driver::GPIO::COMPATIBLE, &mmio_descriptor)?;

+

+ // This is safe to do, because it is only called from the init'ed instance itself.

+ fn gpio_post_init() {

+ unsafe { GPIO.assume_init_ref().map_pl011_uart() };

+ }

+

+ GPIO.write(device_driver::GPIO::new(virt_addr, gpio_post_init));

+

+ Ok(())

+ }

+

+ #[cfg(feature = "bsp_rpi3")]

+ unsafe fn instantiate_interrupt_controller(&self) -> Result<(), &'static str> {

+ let periph_mmio_descriptor =

+ MMIODescriptor::new(mmio::PERIPHERAL_IC_START, mmio::PERIPHERAL_IC_SIZE);

+ let periph_virt_addr = memory::mmu::kernel_map_mmio(

+ device_driver::InterruptController::COMPATIBLE,

+ &periph_mmio_descriptor,

+ )?;

+

+ // This is safe to do, because it is only called from the init'ed instance itself.

+ fn interrupt_controller_post_init() {

+ generic_exception::asynchronous::register_irq_manager(unsafe {

+ INTERRUPT_CONTROLLER.assume_init_ref()

+ });

+ }

+

+ INTERRUPT_CONTROLLER.write(device_driver::InterruptController::new(

+ periph_virt_addr,

+ interrupt_controller_post_init,

+ ));

+

+ Ok(())

+ }

+

+ #[cfg(feature = "bsp_rpi4")]

+ unsafe fn instantiate_interrupt_controller(&self) -> Result<(), &'static str> {

+ let gicd_mmio_descriptor = MMIODescriptor::new(mmio::GICD_START, mmio::GICD_SIZE);

+ let gicd_virt_addr = memory::mmu::kernel_map_mmio("GICv2 GICD", &gicd_mmio_descriptor)?;

+

+ let gicc_mmio_descriptor = MMIODescriptor::new(mmio::GICC_START, mmio::GICC_SIZE);

+ let gicc_virt_addr = memory::mmu::kernel_map_mmio("GICV2 GICC", &gicc_mmio_descriptor)?;

+

+ // This is safe to do, because it is only called from the init'ed instance itself.

+ fn interrupt_controller_post_init() {

+ generic_exception::asynchronous::register_irq_manager(unsafe {

+ INTERRUPT_CONTROLLER.assume_init_ref()

+ });

+ }

+

+ INTERRUPT_CONTROLLER.write(device_driver::GICv2::new(

+ gicd_virt_addr,

+ gicc_virt_addr,

+ interrupt_controller_post_init,

+ ));

+

+ Ok(())

+ }

+

+ unsafe fn register_drivers(&self) {

+ self.device_drivers.write(|drivers| {

+ drivers[0] = Some(PL011_UART.assume_init_ref());

+ drivers[1] = Some(GPIO.assume_init_ref());

+ drivers[2] = Some(INTERRUPT_CONTROLLER.assume_init_ref());

+ });

+ }

+}

+

+//--------------------------------------------------------------------------------------------------

// Public Code

//--------------------------------------------------------------------------------------------------

@@ -58,15 +165,34 @@

use driver::interface::DeviceDriver;

impl driver::interface::DriverManager for BSPDriverManager {

- fn all_device_drivers(&self) -> &[&'static (dyn DeviceDriver + Sync)] {

- &self.device_drivers[..]

+ unsafe fn instantiate_drivers(&self) -> Result<(), &'static str> {

+ if self.init_done.load(Ordering::Relaxed) {

+ return Err("Drivers already instantiated");

+ }

+

+ self.instantiate_uart()?;

+ self.instantiate_gpio()?;

+ self.instantiate_interrupt_controller()?;

+

+ self.register_drivers();

+

+ self.init_done.store(true, Ordering::Relaxed);

+ Ok(())

}

- fn post_device_driver_init(&self) {

- // Configure PL011Uart's output pins.

- GPIO.map_pl011_uart();

+ fn all_device_drivers(&self) -> [&(dyn DeviceDriver + Sync); NUM_DRIVERS] {

+ self.device_drivers

+ .read(|drivers| drivers.map(|drivers| drivers.unwrap()))

}

#[cfg(feature = "test_build")]

- fn qemu_bring_up_console(&self) {}

+ fn qemu_bring_up_console(&self) {

+ use crate::cpu;

+

+ unsafe {

+ self.instantiate_uart()

+ .unwrap_or_else(|_| cpu::qemu_exit_failure());

+ console::register_console(PL011_UART.assume_init_ref());

+ };

+ }

}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/exception/asynchronous.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/exception/asynchronous.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/exception/asynchronous.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/exception/asynchronous.rs

@@ -4,7 +4,7 @@

//! BSP asynchronous exception handling.

-use crate::{bsp, bsp::driver, exception};

+use crate::bsp;

//--------------------------------------------------------------------------------------------------

// Public Definitions

@@ -23,14 +23,3 @@

pub const PL011_UART: IRQNumber = IRQNumber::new(153);

}

-

-//--------------------------------------------------------------------------------------------------

-// Public Code

-//--------------------------------------------------------------------------------------------------

-

-/// Return a reference to the IRQ manager.

-pub fn irq_manager() -> &'static impl exception::asynchronous::interface::IRQManager<

- IRQNumberType = bsp::device_driver::IRQNumber,

-> {

- &driver::INTERRUPT_CONTROLLER

-}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/kernel.ld 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/kernel.ld

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/kernel.ld

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/kernel.ld

@@ -38,7 +38,7 @@

***********************************************************************************************/

.boot_core_stack (NOLOAD) :

{

- /* ^ */

+ __boot_core_stack_start = .; /* ^ */

/* | stack */

. += __rpi_phys_binary_load_addr; /* | growth */

/* | direction */

@@ -68,6 +68,7 @@

/***********************************************************************************************

* Data + BSS

***********************************************************************************************/

+ __data_start = .;

.data : { *(.data*) } :segment_data

/* Section is zeroed in pairs of u64. Align start and end to 16 bytes */

@@ -78,4 +79,16 @@

. = ALIGN(16);

__bss_end_exclusive = .;

} :segment_data

+

+ . = ALIGN(PAGE_SIZE);

+ __data_end_exclusive = .;

+

+ /***********************************************************************************************

+ * MMIO Remap Reserved

+ ***********************************************************************************************/

+ __mmio_remap_start = .;

+ . += 8 * 1024 * 1024;

+ __mmio_remap_end_exclusive = .;

+

+ ASSERT((. & PAGE_MASK) == 0, "MMIO remap reservation is not page aligned")

}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/memory/mmu.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/memory/mmu.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/memory/mmu.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/memory/mmu.rs

@@ -4,70 +4,163 @@

//! BSP Memory Management Unit.

-use super::map as memory_map;

-use crate::memory::mmu::*;

-use core::ops::RangeInclusive;

+use crate::{

+ memory::{

+ mmu::{

+ self as generic_mmu, AccessPermissions, AddressSpace, AssociatedTranslationTable,

+ AttributeFields, MemAttributes, MemoryRegion, PageAddress, TranslationGranule,

+ },

+ Physical, Virtual,

+ },

+ synchronization::InitStateLock,

+};

+

+//--------------------------------------------------------------------------------------------------

+// Private Definitions

+//--------------------------------------------------------------------------------------------------

+

+type KernelTranslationTable =

+ <KernelVirtAddrSpace as AssociatedTranslationTable>::TableStartFromBottom;

//--------------------------------------------------------------------------------------------------

// Public Definitions

//--------------------------------------------------------------------------------------------------

-/// The kernel's address space defined by this BSP.

-pub type KernelAddrSpace = AddressSpace<{ memory_map::END_INCLUSIVE + 1 }>;

+/// The translation granule chosen by this BSP. This will be used everywhere else in the kernel to

+/// derive respective data structures and their sizes. For example, the `crate::memory::mmu::Page`.

+pub type KernelGranule = TranslationGranule<{ 64 * 1024 }>;

+

+/// The kernel's virtual address space defined by this BSP.

+pub type KernelVirtAddrSpace = AddressSpace<{ 1024 * 1024 * 1024 }>;

-const NUM_MEM_RANGES: usize = 2;

+//--------------------------------------------------------------------------------------------------

+// Global instances

+//--------------------------------------------------------------------------------------------------

-/// The virtual memory layout.

+/// The kernel translation tables.

///

-/// The layout must contain only special ranges, aka anything that is _not_ normal cacheable DRAM.

-/// It is agnostic of the paging granularity that the architecture's MMU will use.

-pub static LAYOUT: KernelVirtualLayout<NUM_MEM_RANGES> = KernelVirtualLayout::new(

- memory_map::END_INCLUSIVE,

- [

- TranslationDescriptor {

- name: "Kernel code and RO data",

- virtual_range: code_range_inclusive,

- physical_range_translation: Translation::Identity,

- attribute_fields: AttributeFields {

- mem_attributes: MemAttributes::CacheableDRAM,

- acc_perms: AccessPermissions::ReadOnly,

- execute_never: false,

- },

- },

- TranslationDescriptor {

- name: "Device MMIO",

- virtual_range: mmio_range_inclusive,

- physical_range_translation: Translation::Identity,

- attribute_fields: AttributeFields {

- mem_attributes: MemAttributes::Device,

- acc_perms: AccessPermissions::ReadWrite,

- execute_never: true,

- },

- },

- ],

-);

+/// It is mandatory that InitStateLock is transparent.

+///

+/// That is, `size_of(InitStateLock<KernelTranslationTable>) == size_of(KernelTranslationTable)`.

+/// There is a unit tests that checks this porperty.

+static KERNEL_TABLES: InitStateLock<KernelTranslationTable> =

+ InitStateLock::new(KernelTranslationTable::new());

//--------------------------------------------------------------------------------------------------

// Private Code

//--------------------------------------------------------------------------------------------------

-fn code_range_inclusive() -> RangeInclusive<usize> {

- // Notice the subtraction to turn the exclusive end into an inclusive end.

- #[allow(clippy::range_minus_one)]

- RangeInclusive::new(super::code_start(), super::code_end_exclusive() - 1)

+/// Helper function for calculating the number of pages the given parameter spans.

+const fn size_to_num_pages(size: usize) -> usize {

+ assert!(size > 0);

+ assert!(size modulo KernelGranule::SIZE == 0);

+

+ size >> KernelGranule::SHIFT

+}

+

+/// The code pages of the kernel binary.

+fn virt_code_region() -> MemoryRegion<Virtual> {

+ let num_pages = size_to_num_pages(super::code_size());

+

+ let start_page_addr = super::virt_code_start();

+ let end_exclusive_page_addr = start_page_addr.checked_offset(num_pages as isize).unwrap();

+

+ MemoryRegion::new(start_page_addr, end_exclusive_page_addr)

+}

+

+/// The data pages of the kernel binary.

+fn virt_data_region() -> MemoryRegion<Virtual> {

+ let num_pages = size_to_num_pages(super::data_size());

+

+ let start_page_addr = super::virt_data_start();

+ let end_exclusive_page_addr = start_page_addr.checked_offset(num_pages as isize).unwrap();

+

+ MemoryRegion::new(start_page_addr, end_exclusive_page_addr)

+}

+

+/// The boot core stack pages.

+fn virt_boot_core_stack_region() -> MemoryRegion<Virtual> {

+ let num_pages = size_to_num_pages(super::boot_core_stack_size());

+

+ let start_page_addr = super::virt_boot_core_stack_start();

+ let end_exclusive_page_addr = start_page_addr.checked_offset(num_pages as isize).unwrap();

+

+ MemoryRegion::new(start_page_addr, end_exclusive_page_addr)

}

-fn mmio_range_inclusive() -> RangeInclusive<usize> {

- RangeInclusive::new(memory_map::mmio::START, memory_map::mmio::END_INCLUSIVE)

+// The binary is still identity mapped, so use this trivial conversion function for mapping below.

+

+fn kernel_virt_to_phys_region(virt_region: MemoryRegion<Virtual>) -> MemoryRegion<Physical> {

+ MemoryRegion::new(

+ PageAddress::from(virt_region.start_page_addr().into_inner().as_usize()),

+ PageAddress::from(

+ virt_region

+ .end_exclusive_page_addr()

+ .into_inner()

+ .as_usize(),

+ ),

+ )

}

//--------------------------------------------------------------------------------------------------

// Public Code

//--------------------------------------------------------------------------------------------------

-/// Return a reference to the virtual memory layout.

-pub fn virt_mem_layout() -> &'static KernelVirtualLayout<NUM_MEM_RANGES> {

- &LAYOUT

+/// Return a reference to the kernel's translation tables.

+pub fn kernel_translation_tables() -> &'static InitStateLock<KernelTranslationTable> {

+ &KERNEL_TABLES

+}

+

+/// The MMIO remap pages.

+pub fn virt_mmio_remap_region() -> MemoryRegion<Virtual> {

+ let num_pages = size_to_num_pages(super::mmio_remap_size());

+

+ let start_page_addr = super::virt_mmio_remap_start();

+ let end_exclusive_page_addr = start_page_addr.checked_offset(num_pages as isize).unwrap();

+

+ MemoryRegion::new(start_page_addr, end_exclusive_page_addr)

+}

+

+/// Map the kernel binary.

+///

+/// # Safety

+///

+/// - Any miscalculation or attribute error will likely be fatal. Needs careful manual checking.

+pub unsafe fn kernel_map_binary() -> Result<(), &'static str> {

+ generic_mmu::kernel_map_at(

+ "Kernel boot-core stack",

+ &virt_boot_core_stack_region(),

+ &kernel_virt_to_phys_region(virt_boot_core_stack_region()),

+ &AttributeFields {

+ mem_attributes: MemAttributes::CacheableDRAM,

+ acc_perms: AccessPermissions::ReadWrite,

+ execute_never: true,

+ },

+ )?;

+

+ generic_mmu::kernel_map_at(

+ "Kernel code and RO data",

+ &virt_code_region(),

+ &kernel_virt_to_phys_region(virt_code_region()),

+ &AttributeFields {

+ mem_attributes: MemAttributes::CacheableDRAM,

+ acc_perms: AccessPermissions::ReadOnly,

+ execute_never: false,

+ },

+ )?;

+

+ generic_mmu::kernel_map_at(

+ "Kernel data and bss",

+ &virt_data_region(),

+ &kernel_virt_to_phys_region(virt_data_region()),

+ &AttributeFields {

+ mem_attributes: MemAttributes::CacheableDRAM,

+ acc_perms: AccessPermissions::ReadWrite,

+ execute_never: true,

+ },

+ )?;

+

+ Ok(())

}

//--------------------------------------------------------------------------------------------------

@@ -77,38 +170,60 @@

#[cfg(test)]

mod tests {

use super::*;

+ use core::{cell::UnsafeCell, ops::Range};

use test_macros::kernel_test;

/// Check alignment of the kernel's virtual memory layout sections.

#[kernel_test]

fn virt_mem_layout_sections_are_64KiB_aligned() {

- const SIXTYFOUR_KIB: usize = 65536;

-

- for i in LAYOUT.inner().iter() {

- let start: usize = *(i.virtual_range)().start();

- let end: usize = *(i.virtual_range)().end() + 1;

-

- assert_eq!(start modulo SIXTYFOUR_KIB, 0);

- assert_eq!(end modulo SIXTYFOUR_KIB, 0);

- assert!(end >= start);

+ for i in [

+ virt_boot_core_stack_region,

+ virt_code_region,

+ virt_data_region,

+ ]

+ .iter()

+ {

+ let start = i().start_page_addr().into_inner();

+ let end_exclusive = i().end_exclusive_page_addr().into_inner();

+

+ assert!(start.is_page_aligned());

+ assert!(end_exclusive.is_page_aligned());

+ assert!(end_exclusive >= start);

}

}

/// Ensure the kernel's virtual memory layout is free of overlaps.

#[kernel_test]

fn virt_mem_layout_has_no_overlaps() {

- let layout = virt_mem_layout().inner();

-

- for (i, first) in layout.iter().enumerate() {

- for second in layout.iter().skip(i + 1) {

- let first_range = first.virtual_range;

- let second_range = second.virtual_range;

-

- assert!(!first_range().contains(second_range().start()));

- assert!(!first_range().contains(second_range().end()));

- assert!(!second_range().contains(first_range().start()));

- assert!(!second_range().contains(first_range().end()));

+ let layout = [

+ virt_boot_core_stack_region(),

+ virt_code_region(),

+ virt_data_region(),

+ ];

+

+ for (i, first_range) in layout.iter().enumerate() {

+ for second_range in layout.iter().skip(i + 1) {

+ assert!(!first_range.overlaps(second_range))

}

}

}

+

+ /// Check if KERNEL_TABLES is in .bss.

+ #[kernel_test]

+ fn kernel_tables_in_bss() {

+ extern "Rust" {

+ static __bss_start: UnsafeCell<u64>;

+ static __bss_end_exclusive: UnsafeCell<u64>;

+ }

+

+ let bss_range = unsafe {

+ Range {

+ start: __bss_start.get(),

+ end: __bss_end_exclusive.get(),

+ }

+ };

+ let kernel_tables_addr = &KERNEL_TABLES as *const _ as usize as *mut u64;

+

+ assert!(bss_range.contains(&kernel_tables_addr));

+ }

}

diff -uNr 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/memory.rs 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/memory.rs

--- 13_exceptions_part2_peripheral_IRQs/kernel/src/bsp/raspberrypi/memory.rs

+++ 14_virtual_mem_part2_mmio_remap/kernel/src/bsp/raspberrypi/memory.rs

@@ -10,27 +10,59 @@

//! as the boot core's stack.

//!

//! +---------------------------------------+

-//! | | 0x0

+//! | | boot_core_stack_start @ 0x0

//! | | ^

//! | Boot-core Stack | | stack

//! | | | growth

//! | | | direction

//! +---------------------------------------+

-//! | | code_start @ 0x8_0000

+//! | | code_start @ 0x8_0000 == boot_core_stack_end_exclusive

//! | .text |

//! | .rodata |

//! | .got |

//! | |

//! +---------------------------------------+

-//! | | code_end_exclusive

+//! | | data_start == code_end_exclusive

//! | .data |

//! | .bss |

//! | |

//! +---------------------------------------+

+//! | | data_end_exclusive

//! | |

+//!

+//!

+//!

+//!

+//!

+//! The virtual memory layout is as follows:

+//!

+//! +---------------------------------------+

+//! | | boot_core_stack_start @ 0x0

+//! | | ^

+//! | Boot-core Stack | | stack

+//! | | | growth

+//! | | | direction

+//! +---------------------------------------+

+//! | | code_start @ 0x8_0000 == boot_core_stack_end_exclusive

+//! | .text |

+//! | .rodata |

+//! | .got |

+//! | |

+//! +---------------------------------------+

+//! | | data_start == code_end_exclusive

+//! | .data |

+//! | .bss |

+//! | |

+//! +---------------------------------------+

+//! | | mmio_remap_start == data_end_exclusive

+//! | VA region for MMIO remapping |

+//! | |

+//! +---------------------------------------+

+//! | | mmio_remap_end_exclusive

//! | |

pub mod mmu;

+use crate::memory::{mmu::PageAddress, Address, Physical, Virtual};

use core::cell::UnsafeCell;

//--------------------------------------------------------------------------------------------------

@@ -41,6 +73,15 @@

extern "Rust" {

static __code_start: UnsafeCell<()>;

static __code_end_exclusive: UnsafeCell<()>;

+

+ static __data_start: UnsafeCell<()>;

+ static __data_end_exclusive: UnsafeCell<()>;

+

+ static __mmio_remap_start: UnsafeCell<()>;

+ static __mmio_remap_end_exclusive: UnsafeCell<()>;

+

+ static __boot_core_stack_start: UnsafeCell<()>;

+ static __boot_core_stack_end_exclusive: UnsafeCell<()>;

}

//--------------------------------------------------------------------------------------------------

@@ -50,34 +91,23 @@

/// The board's physical memory map.

#[rustfmt::skip]

pub(super) mod map {

- /// The inclusive end address of the memory map.

- ///

- /// End address + 1 must be power of two.

- ///

- /// # Note

- ///

- /// RPi3 and RPi4 boards can have different amounts of RAM. To make our code lean for

- /// educational purposes, we set the max size of the address space to 4 GiB regardless of board.

- /// This way, we can map the entire range that we need (end of MMIO for RPi4) in one take.

- ///

- /// However, making this trade-off has the downside of making it possible for the CPU to assert a

- /// physical address that is not backed by any DRAM (e.g. accessing an address close to 4 GiB on

- /// an RPi3 that comes with 1 GiB of RAM). This would result in a crash or other kind of error.

- pub const END_INCLUSIVE: usize = 0xFFFF_FFFF;

-

- pub const GPIO_OFFSET: usize = 0x0020_0000;

- pub const UART_OFFSET: usize = 0x0020_1000;

+ use super::*;

/// Physical devices.

#[cfg(feature = "bsp_rpi3")]

pub mod mmio {

use super::*;

- pub const START: usize = 0x3F00_0000;

- pub const PERIPHERAL_INTERRUPT_CONTROLLER_START: usize = START + 0x0000_B200;

- pub const GPIO_START: usize = START + GPIO_OFFSET;

- pub const PL011_UART_START: usize = START + UART_OFFSET;

- pub const END_INCLUSIVE: usize = 0x4000_FFFF;

+ pub const PERIPHERAL_IC_START: Address<Physical> = Address::new(0x3F00_B200);

+ pub const PERIPHERAL_IC_SIZE: usize = 0x24;

+

+ pub const GPIO_START: Address<Physical> = Address::new(0x3F20_0000);

+ pub const GPIO_SIZE: usize = 0xA0;

+

+ pub const PL011_UART_START: Address<Physical> = Address::new(0x3F20_1000);

+ pub const PL011_UART_SIZE: usize = 0x48;

+

+ pub const END: Address<Physical> = Address::new(0x4001_0000);

}

/// Physical devices.

@@ -85,13 +115,22 @@

pub mod mmio {

use super::*;

- pub const START: usize = 0xFE00_0000;

- pub const GPIO_START: usize = START + GPIO_OFFSET;

- pub const PL011_UART_START: usize = START + UART_OFFSET;

- pub const GICD_START: usize = 0xFF84_1000;

- pub const GICC_START: usize = 0xFF84_2000;

- pub const END_INCLUSIVE: usize = 0xFF84_FFFF;

+ pub const GPIO_START: Address<Physical> = Address::new(0xFE20_0000);

+ pub const GPIO_SIZE: usize = 0xA0;

+

+ pub const PL011_UART_START: Address<Physical> = Address::new(0xFE20_1000);

+ pub const PL011_UART_SIZE: usize = 0x48;

+

+ pub const GICD_START: Address<Physical> = Address::new(0xFF84_1000);