mirror of

https://github.com/hwchase17/langchain

synced 2024-11-06 03:20:49 +00:00

Add the support of multimodal conversation in dashscope,now we can use

multimodal language model "qwen-vl-v1", "qwen-vl-chat-v1",

"qwen-audio-turbo" to processing picture an audio. :)

- [ ] **PR title**: "community: add multimodal conversation support in

dashscope"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** add multimodal conversation support in dashscope

- **Issue:**

- **Dependencies:** dashscope≥1.18.0

- **Twitter handle:** none :)

- [ ] **How to use it?**:

- ```python

Tongyi_chat = ChatTongyi(

top_p=0.5,

dashscope_api_key=api_key,

model="qwen-vl-v1"

)

response= Tongyi_chat.invoke(

input =

[

{

"role": "user",

"content": [

{"image":

"https://dashscope.oss-cn-beijing.aliyuncs.com/images/dog_and_girl.jpeg"},

{"text": "这是什么?"}

]

}

]

)

```

---------

Co-authored-by: Bagatur <baskaryan@gmail.com>

|

||

|---|---|---|

| .. | ||

| langchain_community | ||

| scripts | ||

| tests | ||

| Makefile | ||

| poetry.lock | ||

| pyproject.toml | ||

| README.md | ||

🦜️🧑🤝🧑 LangChain Community

Quick Install

pip install langchain-community

What is it?

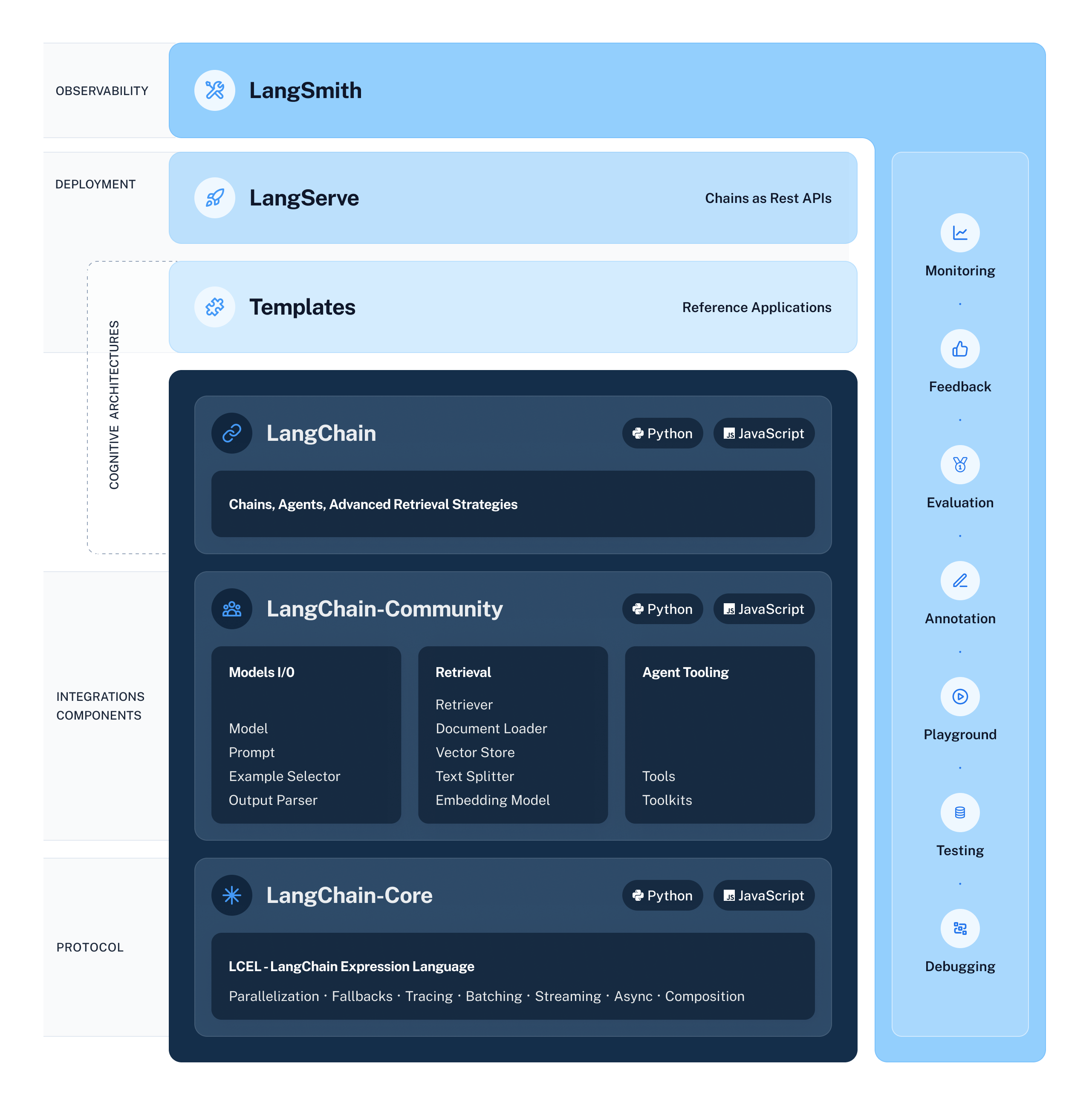

LangChain Community contains third-party integrations that implement the base interfaces defined in LangChain Core, making them ready-to-use in any LangChain application.

For full documentation see the API reference.

📕 Releases & Versioning

langchain-community is currently on version 0.0.x

All changes will be accompanied by a patch version increase.

💁 Contributing

As an open-source project in a rapidly developing field, we are extremely open to contributions, whether it be in the form of a new feature, improved infrastructure, or better documentation.

For detailed information on how to contribute, see the Contributing Guide.