mirror of

https://github.com/hwchase17/langchain

synced 2024-11-06 03:20:49 +00:00

**Description:** [IPEX-LLM](https://github.com/intel-analytics/ipex-llm) is a PyTorch library for running LLM on Intel CPU and GPU (e.g., local PC with iGPU, discrete GPU such as Arc, Flex and Max) with very low latency. This PR adds ipex-llm integrations to langchain for BGE embedding support on both Intel CPU and GPU. **Dependencies:** `ipex-llm`, `sentence-transformers` **Contribution maintainer**: @Oscilloscope98 **tests and docs**: - langchain/docs/docs/integrations/text_embedding/ipex_llm.ipynb - langchain/docs/docs/integrations/text_embedding/ipex_llm_gpu.ipynb - langchain/libs/community/tests/integration_tests/embeddings/test_ipex_llm.py --------- Co-authored-by: Shengsheng Huang <shannie.huang@gmail.com> |

||

|---|---|---|

| .. | ||

| langchain_community | ||

| scripts | ||

| tests | ||

| Makefile | ||

| poetry.lock | ||

| pyproject.toml | ||

| README.md | ||

🦜️🧑🤝🧑 LangChain Community

Quick Install

pip install langchain-community

What is it?

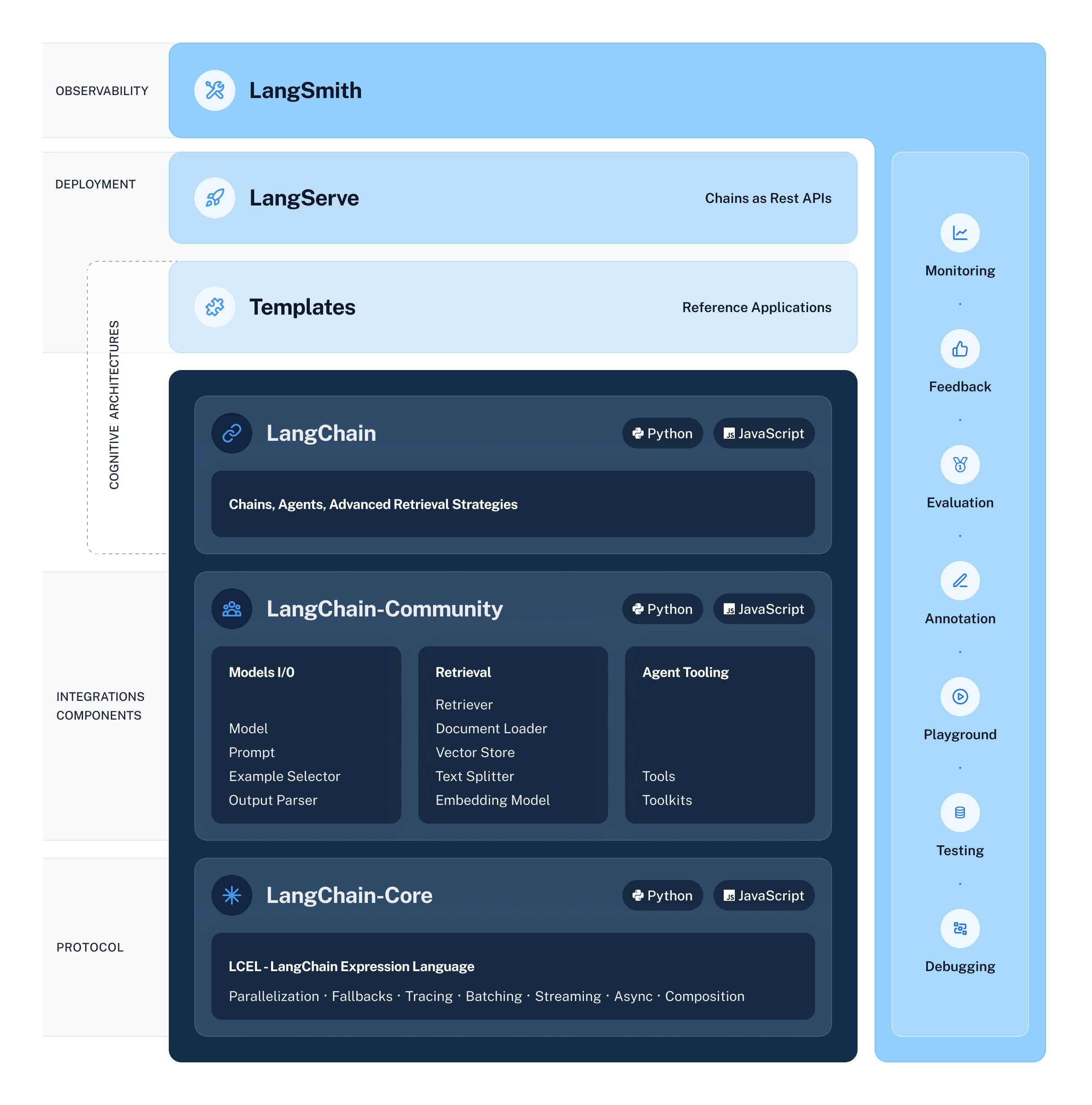

LangChain Community contains third-party integrations that implement the base interfaces defined in LangChain Core, making them ready-to-use in any LangChain application.

For full documentation see the API reference.

📕 Releases & Versioning

langchain-community is currently on version 0.0.x

All changes will be accompanied by a patch version increase.

💁 Contributing

As an open-source project in a rapidly developing field, we are extremely open to contributions, whether it be in the form of a new feature, improved infrastructure, or better documentation.

For detailed information on how to contribute, see the Contributing Guide.