Thank you for contributing to LangChain!

- [x] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [x] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [x] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [x] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

|

||

|---|---|---|

| .. | ||

| anthropic-iterative-search | ||

| basic-critique-revise | ||

| bedrock-jcvd | ||

| cassandra-entomology-rag | ||

| cassandra-synonym-caching | ||

| chain-of-note-wiki | ||

| chat-bot-feedback | ||

| cohere-librarian | ||

| csv-agent | ||

| docs | ||

| elastic-query-generator | ||

| extraction-anthropic-functions | ||

| extraction-openai-functions | ||

| gemini-functions-agent | ||

| guardrails-output-parser | ||

| hybrid-search-weaviate | ||

| hyde | ||

| llama2-functions | ||

| mongo-parent-document-retrieval | ||

| neo4j-advanced-rag | ||

| neo4j-cypher | ||

| neo4j-cypher-ft | ||

| neo4j-cypher-memory | ||

| neo4j-generation | ||

| neo4j-parent | ||

| neo4j-semantic-layer | ||

| neo4j-semantic-ollama | ||

| neo4j-vector-memory | ||

| nvidia-rag-canonical | ||

| openai-functions-agent | ||

| openai-functions-agent-gmail | ||

| openai-functions-tool-retrieval-agent | ||

| pii-protected-chatbot | ||

| pirate-speak | ||

| pirate-speak-configurable | ||

| plate-chain | ||

| propositional-retrieval | ||

| python-lint | ||

| rag-astradb | ||

| rag-aws-bedrock | ||

| rag-aws-kendra | ||

| rag-chroma | ||

| rag-chroma-multi-modal | ||

| rag-chroma-multi-modal-multi-vector | ||

| rag-chroma-private | ||

| rag-codellama-fireworks | ||

| rag-conversation | ||

| rag-conversation-zep | ||

| rag-elasticsearch | ||

| rag-fusion | ||

| rag-gemini-multi-modal | ||

| rag-google-cloud-sensitive-data-protection | ||

| rag-google-cloud-vertexai-search | ||

| rag-gpt-crawler | ||

| rag-lancedb | ||

| rag-matching-engine | ||

| rag-momento-vector-index | ||

| rag-mongo | ||

| rag-multi-index-fusion | ||

| rag-multi-index-router | ||

| rag-multi-modal-local | ||

| rag-multi-modal-mv-local | ||

| rag-ollama-multi-query | ||

| rag-opensearch | ||

| rag-pinecone | ||

| rag-pinecone-multi-query | ||

| rag-pinecone-rerank | ||

| rag-redis | ||

| rag-self-query | ||

| rag-semi-structured | ||

| rag-singlestoredb | ||

| rag-supabase | ||

| rag-timescale-conversation | ||

| rag-timescale-hybrid-search-time | ||

| rag-vectara | ||

| rag-vectara-multiquery | ||

| rag-weaviate | ||

| research-assistant | ||

| retrieval-agent | ||

| retrieval-agent-fireworks | ||

| rewrite-retrieve-read | ||

| robocorp-action-server | ||

| self-query-qdrant | ||

| self-query-supabase | ||

| shopping-assistant | ||

| skeleton-of-thought | ||

| solo-performance-prompting-agent | ||

| sql-llama2 | ||

| sql-llamacpp | ||

| sql-ollama | ||

| sql-pgvector | ||

| sql-research-assistant | ||

| stepback-qa-prompting | ||

| summarize-anthropic | ||

| vertexai-chuck-norris | ||

| xml-agent | ||

| .gitignore | ||

| Makefile | ||

| poetry.lock | ||

| pyproject.toml | ||

| README.md | ||

LangChain Templates

LangChain Templates are the easiest and fastest way to build a production-ready LLM application. These templates serve as a set of reference architectures for a wide variety of popular LLM use cases. They are all in a standard format which make it easy to deploy them with LangServe.

🚩 We will be releasing a hosted version of LangServe for one-click deployments of LangChain applications. Sign up here to get on the waitlist.

Quick Start

To use, first install the LangChain CLI.

pip install -U langchain-cli

Next, create a new LangChain project:

langchain app new my-app

This will create a new directory called my-app with two folders:

app: This is where LangServe code will livepackages: This is where your chains or agents will live

To pull in an existing template as a package, you first need to go into your new project:

cd my-app

And you can the add a template as a project.

In this getting started guide, we will add a simple pirate-speak project.

All this project does is convert user input into pirate speak.

langchain app add pirate-speak

This will pull in the specified template into packages/pirate-speak

You will then be prompted if you want to install it.

This is the equivalent of running pip install -e packages/pirate-speak.

You should generally accept this (or run that same command afterwards).

We install it with -e so that if you modify the template at all (which you likely will) the changes are updated.

After that, it will ask you if you want to generate route code for this project. This is code you need to add to your app to start using this chain. If we accept, we will see the following code generated:

from pirate_speak.chain import chain as pirate_speak_chain

add_routes(app, pirate_speak_chain, path="/pirate-speak")

You can now edit the template you pulled down.

You can change the code files in packages/pirate-speak to use a different model, different prompt, different logic.

Note that the above code snippet always expects the final chain to be importable as from pirate_speak.chain import chain,

so you should either keep the structure of the package similar enough to respect that or be prepared to update that code snippet.

Once you have done as much of that as you want, it is

In order to have LangServe use this project, you then need to modify app/server.py.

Specifically, you should add the above code snippet to app/server.py so that file looks like:

from fastapi import FastAPI

from langserve import add_routes

from pirate_speak.chain import chain as pirate_speak_chain

app = FastAPI()

add_routes(app, pirate_speak_chain, path="/pirate-speak")

(Optional) Let's now configure LangSmith. LangSmith will help us trace, monitor and debug LangChain applications. LangSmith is currently in private beta, you can sign up here. If you don't have access, you can skip this section

export LANGCHAIN_TRACING_V2=true

export LANGCHAIN_API_KEY=<your-api-key>

export LANGCHAIN_PROJECT=<your-project> # if not specified, defaults to "default"

For this particular application, we will use OpenAI as the LLM, so we need to export our OpenAI API key:

export OPENAI_API_KEY=sk-...

You can then spin up production-ready endpoints, along with a playground, by running:

langchain serve

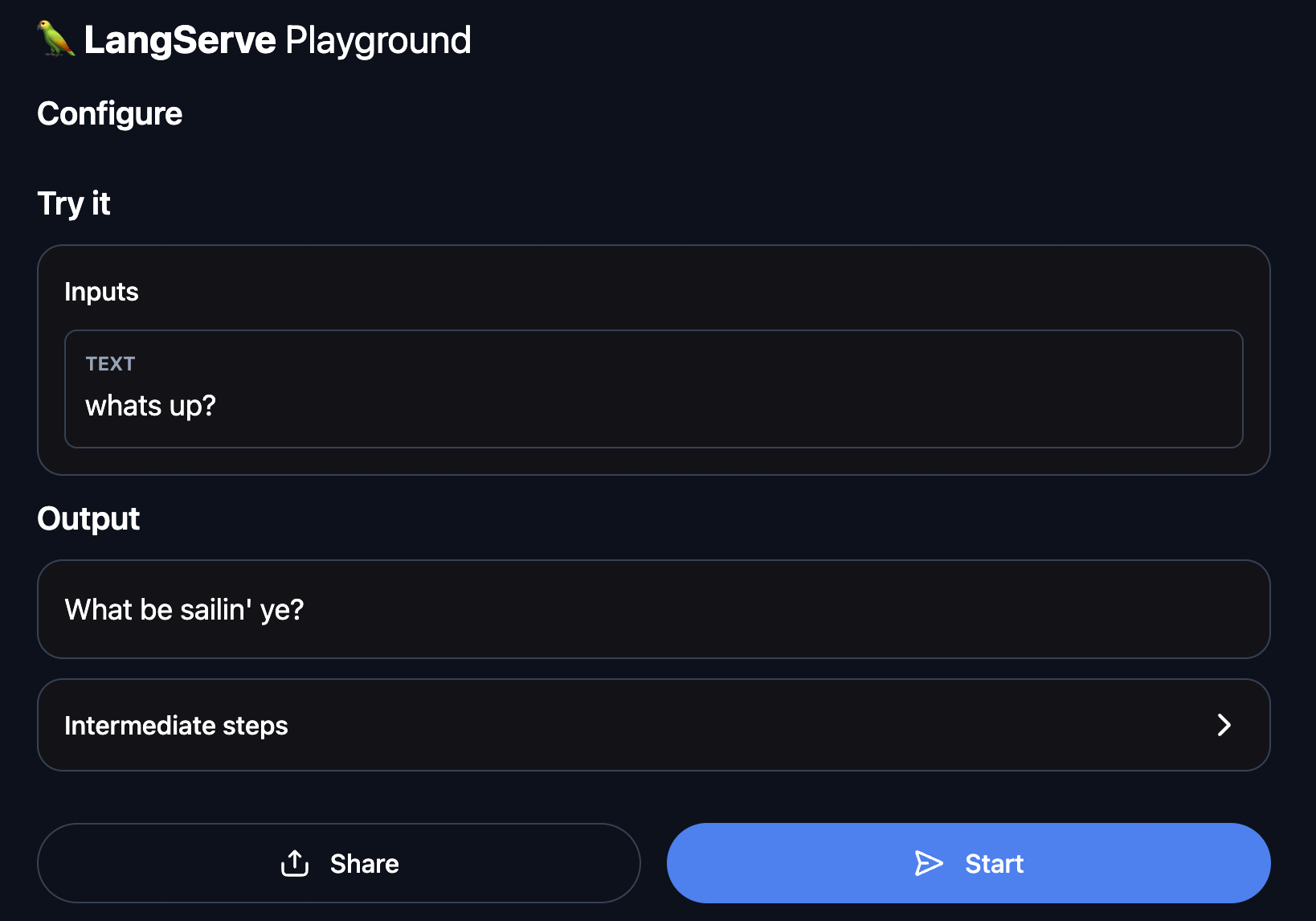

This now gives a fully deployed LangServe application. For example, you get a playground out-of-the-box at http://127.0.0.1:8000/pirate-speak/playground/:

Access API documentation at http://127.0.0.1:8000/docs

Use the LangServe python or js SDK to interact with the API as if it were a regular Runnable.

from langserve import RemoteRunnable

api = RemoteRunnable("http://127.0.0.1:8000/pirate-speak")

api.invoke({"text": "hi"})

That's it for the quick start! You have successfully downloaded your first template and deployed it with LangServe.

Additional Resources

Index of Templates

Explore the many templates available to use - from advanced RAG to agents.

Contributing

Want to contribute your own template? It's pretty easy! These instructions walk through how to do that.

Launching LangServe from a Package

You can also launch LangServe from a package directly (without having to create a new project). These instructions cover how to do that.