mirror of

https://github.com/hwchase17/langchain

synced 2024-11-18 09:25:54 +00:00

ElasticVectorSearch: Add in vector search backed by Elastic (#67)

woo! Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

This commit is contained in:

parent

efbc03bda8

commit

e48e562ea5

27

README.md

27

README.md

@ -47,6 +47,11 @@ The following use cases require specific installs and api keys:

|

|||||||

- Install requirements with `pip install playwright`

|

- Install requirements with `pip install playwright`

|

||||||

- _Wikipedia_:

|

- _Wikipedia_:

|

||||||

- Install requirements with `pip install wikipedia`

|

- Install requirements with `pip install wikipedia`

|

||||||

|

- _Elasticsearch_:

|

||||||

|

- Install requirements with `pip install elasticsearch`

|

||||||

|

- Set up Elasticsearch backend. If you want to do locally, [this](https://www.elastic.co/guide/en/elasticsearch/reference/7.17/getting-started.html) is a good guide.

|

||||||

|

- _FAISS_:

|

||||||

|

- Install requirements with `pip install faiss` for Python 3.7 and `pip install faiss-cpu` for Python 3.10+.

|

||||||

|

|

||||||

## 🚀 What can I do with this

|

## 🚀 What can I do with this

|

||||||

|

|

||||||

@ -98,6 +103,28 @@ question = "What NFL team won the Super Bowl in the year Justin Beiber was born?

|

|||||||

llm_chain.predict(question=question)

|

llm_chain.predict(question=question)

|

||||||

```

|

```

|

||||||

|

|

||||||

|

**Embed & Search Documents**

|

||||||

|

|

||||||

|

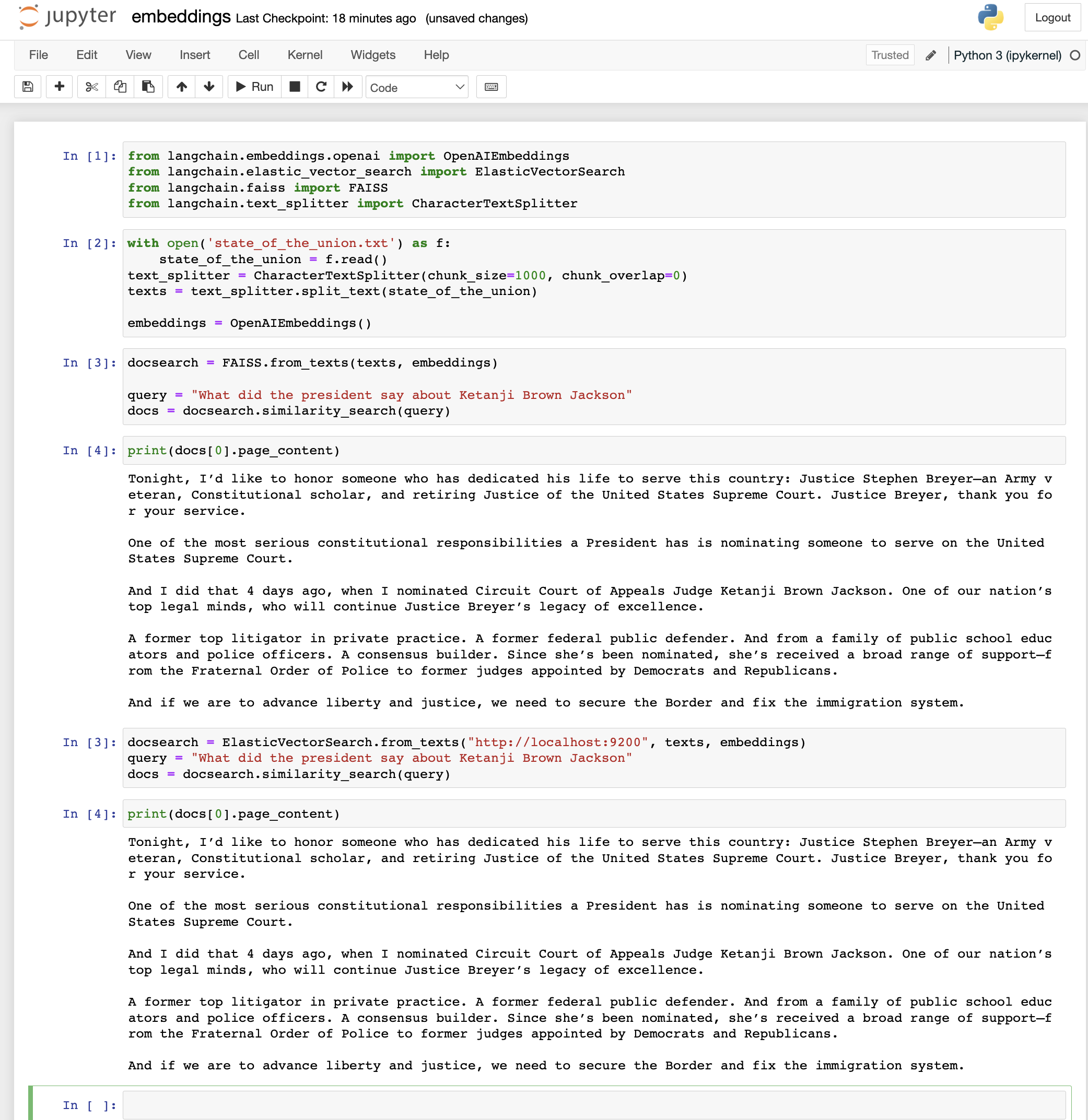

We support two vector databases to store and search embeddings -- FAISS and Elasticsearch. Here's a code snippet showing how to use FAISS to store embeddings and search for text similar to a query. Both database backends are featured in this [example notebook] (https://github.com/hwchase17/langchain/blob/master/notebooks/examples/embeddings.ipynb).

|

||||||

|

|

||||||

|

```

|

||||||

|

from langchain.embeddings.openai import OpenAIEmbeddings

|

||||||

|

from langchain.faiss import FAISS

|

||||||

|

from langchain.text_splitter import CharacterTextSplitter

|

||||||

|

|

||||||

|

with open('state_of_the_union.txt') as f:

|

||||||

|

state_of_the_union = f.read()

|

||||||

|

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)

|

||||||

|

texts = text_splitter.split_text(state_of_the_union)

|

||||||

|

|

||||||

|

embeddings = OpenAIEmbeddings()

|

||||||

|

|

||||||

|

docsearch = FAISS.from_texts(texts, embeddings)

|

||||||

|

|

||||||

|

query = "What did the president say about Ketanji Brown Jackson"

|

||||||

|

docs = docsearch.similarity_search(query)

|

||||||

|

```

|

||||||

|

|

||||||

## 📖 Documentation

|

## 📖 Documentation

|

||||||

|

|

||||||

The above examples are probably the most user friendly documentation that exists,

|

The above examples are probably the most user friendly documentation that exists,

|

||||||

|

|||||||

@ -8,6 +8,7 @@

|

|||||||

"outputs": [],

|

"outputs": [],

|

||||||

"source": [

|

"source": [

|

||||||

"from langchain.embeddings.openai import OpenAIEmbeddings\n",

|

"from langchain.embeddings.openai import OpenAIEmbeddings\n",

|

||||||

|

"from langchain.elastic_vector_search import ElasticVectorSearch\n",

|

||||||

"from langchain.faiss import FAISS\n",

|

"from langchain.faiss import FAISS\n",

|

||||||

"from langchain.text_splitter import CharacterTextSplitter"

|

"from langchain.text_splitter import CharacterTextSplitter"

|

||||||

]

|

]

|

||||||

@ -24,8 +25,7 @@

|

|||||||

"text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)\n",

|

"text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=0)\n",

|

||||||

"texts = text_splitter.split_text(state_of_the_union)\n",

|

"texts = text_splitter.split_text(state_of_the_union)\n",

|

||||||

"\n",

|

"\n",

|

||||||

"embeddings = OpenAIEmbeddings()\n",

|

"embeddings = OpenAIEmbeddings()"

|

||||||

"docsearch = FAISS.from_texts(texts, embeddings)"

|

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

@ -35,6 +35,8 @@

|

|||||||

"metadata": {},

|

"metadata": {},

|

||||||

"outputs": [],

|

"outputs": [],

|

||||||

"source": [

|

"source": [

|

||||||

|

"docsearch = FAISS.from_texts(texts, embeddings)\n",

|

||||||

|

"\n",

|

||||||

"query = \"What did the president say about Ketanji Brown Jackson\"\n",

|

"query = \"What did the president say about Ketanji Brown Jackson\"\n",

|

||||||

"docs = docsearch.similarity_search(query)"

|

"docs = docsearch.similarity_search(query)"

|

||||||

]

|

]

|

||||||

@ -65,10 +67,49 @@

|

|||||||

"print(docs[0].page_content)"

|

"print(docs[0].page_content)"

|

||||||

]

|

]

|

||||||

},

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"execution_count": 3,

|

||||||

|

"id": "4906b8a3",

|

||||||

|

"metadata": {},

|

||||||

|

"outputs": [],

|

||||||

|

"source": [

|

||||||

|

"docsearch = ElasticVectorSearch.from_texts(\"http://localhost:9200\", texts, embeddings)\n",

|

||||||

|

"\n",

|

||||||

|

"query = \"What did the president say about Ketanji Brown Jackson\"\n",

|

||||||

|

"docs = docsearch.similarity_search(query)"

|

||||||

|

]

|

||||||

|

},

|

||||||

|

{

|

||||||

|

"cell_type": "code",

|

||||||

|

"execution_count": 4,

|

||||||

|

"id": "95f9eee9",

|

||||||

|

"metadata": {},

|

||||||

|

"outputs": [

|

||||||

|

{

|

||||||

|

"name": "stdout",

|

||||||

|

"output_type": "stream",

|

||||||

|

"text": [

|

||||||

|

"Tonight, I’d like to honor someone who has dedicated his life to serve this country: Justice Stephen Breyer—an Army veteran, Constitutional scholar, and retiring Justice of the United States Supreme Court. Justice Breyer, thank you for your service. \n",

|

||||||

|

"\n",

|

||||||

|

"One of the most serious constitutional responsibilities a President has is nominating someone to serve on the United States Supreme Court. \n",

|

||||||

|

"\n",

|

||||||

|

"And I did that 4 days ago, when I nominated Circuit Court of Appeals Judge Ketanji Brown Jackson. One of our nation’s top legal minds, who will continue Justice Breyer’s legacy of excellence. \n",

|

||||||

|

"\n",

|

||||||

|

"A former top litigator in private practice. A former federal public defender. And from a family of public school educators and police officers. A consensus builder. Since she’s been nominated, she’s received a broad range of support—from the Fraternal Order of Police to former judges appointed by Democrats and Republicans. \n",

|

||||||

|

"\n",

|

||||||

|

"And if we are to advance liberty and justice, we need to secure the Border and fix the immigration system. \n"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

],

|

||||||

|

"source": [

|

||||||

|

"print(docs[0].page_content)"

|

||||||

|

]

|

||||||

|

},

|

||||||

{

|

{

|

||||||

"cell_type": "code",

|

"cell_type": "code",

|

||||||

"execution_count": null,

|

"execution_count": null,

|

||||||

"id": "25500fa6",

|

"id": "70a253c4",

|

||||||

"metadata": {},

|

"metadata": {},

|

||||||

"outputs": [],

|

"outputs": [],

|

||||||

"source": []

|

"source": []

|

||||||

@ -90,7 +131,7 @@

|

|||||||

"name": "python",

|

"name": "python",

|

||||||

"nbconvert_exporter": "python",

|

"nbconvert_exporter": "python",

|

||||||

"pygments_lexer": "ipython3",

|

"pygments_lexer": "ipython3",

|

||||||

"version": "3.7.6"

|

"version": "3.10.4"

|

||||||

}

|

}

|

||||||

},

|

},

|

||||||

"nbformat": 4,

|

"nbformat": 4,

|

||||||

|

|||||||

@ -16,6 +16,7 @@ from langchain.chains import (

|

|||||||

SQLDatabaseChain,

|

SQLDatabaseChain,

|

||||||

)

|

)

|

||||||

from langchain.docstore import Wikipedia

|

from langchain.docstore import Wikipedia

|

||||||

|

from langchain.elastic_vector_search import ElasticVectorSearch

|

||||||

from langchain.faiss import FAISS

|

from langchain.faiss import FAISS

|

||||||

from langchain.llms import Cohere, HuggingFaceHub, OpenAI

|

from langchain.llms import Cohere, HuggingFaceHub, OpenAI

|

||||||

from langchain.prompts import BasePrompt, DynamicPrompt, Prompt

|

from langchain.prompts import BasePrompt, DynamicPrompt, Prompt

|

||||||

@ -39,4 +40,5 @@ __all__ = [

|

|||||||

"SQLDatabaseChain",

|

"SQLDatabaseChain",

|

||||||

"FAISS",

|

"FAISS",

|

||||||

"MRKLChain",

|

"MRKLChain",

|

||||||

|

"ElasticVectorSearch",

|

||||||

]

|

]

|

||||||

|

|||||||

146

langchain/elastic_vector_search.py

Normal file

146

langchain/elastic_vector_search.py

Normal file

@ -0,0 +1,146 @@

|

|||||||

|

"""Wrapper around Elasticsearch vector database."""

|

||||||

|

import uuid

|

||||||

|

from typing import Callable, Dict, List

|

||||||

|

|

||||||

|

from langchain.docstore.document import Document

|

||||||

|

from langchain.embeddings.base import Embeddings

|

||||||

|

|

||||||

|

|

||||||

|

def _default_text_mapping(dim: int) -> Dict:

|

||||||

|

return {

|

||||||

|

"properties": {

|

||||||

|

"text": {"type": "text"},

|

||||||

|

"vector": {"type": "dense_vector", "dims": dim},

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

|

||||||

|

def _default_script_query(query_vector: List[int]) -> Dict:

|

||||||

|

return {

|

||||||

|

"script_score": {

|

||||||

|

"query": {"match_all": {}},

|

||||||

|

"script": {

|

||||||

|

"source": "cosineSimilarity(params.query_vector, 'vector') + 1.0",

|

||||||

|

"params": {"query_vector": query_vector},

|

||||||

|

},

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

|

||||||

|

class ElasticVectorSearch:

|

||||||

|

"""Wrapper around Elasticsearch as a vector database.

|

||||||

|

|

||||||

|

Example:

|

||||||

|

.. code-block:: python

|

||||||

|

|

||||||

|

from langchain import ElasticVectorSearch

|

||||||

|

elastic_vector_search = ElasticVectorSearch(

|

||||||

|

"http://localhost:9200",

|

||||||

|

"embeddings",

|

||||||

|

mapping,

|

||||||

|

embedding_function

|

||||||

|

)

|

||||||

|

|

||||||

|

"""

|

||||||

|

|

||||||

|

def __init__(

|

||||||

|

self,

|

||||||

|

elastic_url: str,

|

||||||

|

index_name: str,

|

||||||

|

mapping: Dict,

|

||||||

|

embedding_function: Callable,

|

||||||

|

):

|

||||||

|

"""Initialize with necessary components."""

|

||||||

|

try:

|

||||||

|

import elasticsearch

|

||||||

|

except ImportError:

|

||||||

|

raise ValueError(

|

||||||

|

"Could not import elasticsearch python packge. "

|

||||||

|

"Please install it with `pip install elasticearch`."

|

||||||

|

)

|

||||||

|

self.embedding_function = embedding_function

|

||||||

|

self.index_name = index_name

|

||||||

|

try:

|

||||||

|

es_client = elasticsearch.Elasticsearch(elastic_url) # noqa

|

||||||

|

except ValueError as e:

|

||||||

|

raise ValueError(

|

||||||

|

"Your elasticsearch client string is misformatted. " f"Got error: {e} "

|

||||||

|

)

|

||||||

|

self.client = es_client

|

||||||

|

self.mapping = mapping

|

||||||

|

|

||||||

|

def similarity_search(self, query: str, k: int = 4) -> List[Document]:

|

||||||

|

"""Return docs most similar to query.

|

||||||

|

|

||||||

|

Args:

|

||||||

|

query: Text to look up documents similar to.

|

||||||

|

k: Number of Documents to return. Defaults to 4.

|

||||||

|

|

||||||

|

Returns:

|

||||||

|

List of Documents most similar to the query.

|

||||||

|

"""

|

||||||

|

embedding = self.embedding_function(query)

|

||||||

|

script_query = _default_script_query(embedding)

|

||||||

|

response = self.client.search(index=self.index_name, query=script_query)

|

||||||

|

texts = [hit["_source"]["text"] for hit in response["hits"]["hits"][:k]]

|

||||||

|

documents = [Document(page_content=text) for text in texts]

|

||||||

|

return documents

|

||||||

|

|

||||||

|

@classmethod

|

||||||

|

def from_texts(

|

||||||

|

cls, elastic_url: str, texts: List[str], embedding: Embeddings

|

||||||

|

) -> "ElasticVectorSearch":

|

||||||

|

"""Construct ElasticVectorSearch wrapper from raw documents.

|

||||||

|

|

||||||

|

This is a user friendly interface that:

|

||||||

|

1. Embeds documents.

|

||||||

|

2. Creates a new index for the embeddings in the Elasticsearch instance.

|

||||||

|

3. Adds the documents to the newly created Elasticsearch index.

|

||||||

|

|

||||||

|

This is intended to be a quick way to get started.

|

||||||

|

|

||||||

|

Example:

|

||||||

|

.. code-block:: python

|

||||||

|

|

||||||

|

from langchain import ElasticVectorSearch

|

||||||

|

from langchain.embeddings import OpenAIEmbeddings

|

||||||

|

embeddings = OpenAIEmbeddings()

|

||||||

|

elastic_vector_search = ElasticVectorSearch.from_texts(

|

||||||

|

"http://localhost:9200",

|

||||||

|

texts,

|

||||||

|

embeddings

|

||||||

|

)

|

||||||

|

"""

|

||||||

|

try:

|

||||||

|

import elasticsearch

|

||||||

|

from elasticsearch.helpers import bulk

|

||||||

|

except ImportError:

|

||||||

|

raise ValueError(

|

||||||

|

"Could not import elasticsearch python packge. "

|

||||||

|

"Please install it with `pip install elasticearch`."

|

||||||

|

)

|

||||||

|

try:

|

||||||

|

client = elasticsearch.Elasticsearch(elastic_url)

|

||||||

|

except ValueError as e:

|

||||||

|

raise ValueError(

|

||||||

|

"Your elasticsearch client string is misformatted. " f"Got error: {e} "

|

||||||

|

)

|

||||||

|

index_name = uuid.uuid4().hex

|

||||||

|

embeddings = embedding.embed_documents(texts)

|

||||||

|

dim = len(embeddings[0])

|

||||||

|

mapping = _default_text_mapping(dim)

|

||||||

|

# TODO would be nice to create index before embedding,

|

||||||

|

# just to save expensive steps for last

|

||||||

|

client.indices.create(index=index_name, mappings=mapping)

|

||||||

|

requests = []

|

||||||

|

for i, text in enumerate(texts):

|

||||||

|

request = {

|

||||||

|

"_op_type": "index",

|

||||||

|

"_index": index_name,

|

||||||

|

"vector": embeddings[i],

|

||||||

|

"text": text,

|

||||||

|

}

|

||||||

|

requests.append(request)

|

||||||

|

bulk(client, requests)

|

||||||

|

client.indices.refresh(index=index_name)

|

||||||

|

return cls(elastic_url, index_name, mapping, embedding.embed_query)

|

||||||

@ -1,6 +1,7 @@

|

|||||||

-r test_requirements.txt

|

-r test_requirements.txt

|

||||||

# For integrations

|

# For integrations

|

||||||

cohere

|

cohere

|

||||||

|

elasticsearch

|

||||||

openai

|

openai

|

||||||

google-search-results

|

google-search-results

|

||||||

nlpcloud

|

nlpcloud

|

||||||

|

|||||||

Loading…

Reference in New Issue

Block a user