@eyurtsev

当Confluence文档内容中包含附件,且附件内容为非英文时,提取出来的文本是乱码的。

When the content of the document contains attachments, and the content

of the attachments is not in English, the extracted text is garbled.

这主要是因为没有为pytesseract传递lang参数,默认情况下只支持英文。

This is mainly because lang parameter is not passed to pytesseract, and

only English is supported by default.

所以我给ConfluenceLoader.load()添加了ocr_languages参数,以便支持多种语言。

So I added the ocr_languages parameter to ConfluenceLoader.load () to

support multiple languages.

Fixes (not reported) an error that may occur in some cases in the

RecursiveCharacterTextSplitter.

An empty `new_separators` array ([]) would end up in the else path of

the condition below and used in a function where it is expected to be

non empty.

```python

if new_separators is None:

...

else:

# _split_text() expects this array to be non-empty!

other_info = self._split_text(s, new_separators)

```

resulting in an `IndexError`

```python

def _split_text(self, text: str, separators: List[str]) -> List[str]:

"""Split incoming text and return chunks."""

final_chunks = []

# Get appropriate separator to use

> separator = separators[-1]

E IndexError: list index out of range

langchain/text_splitter.py:425: IndexError

```

#### Who can review?

@hwchase17 @eyurtsev

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

This fixes a token limit bug in the

SentenceTransformersTokenTextSplitter. Before the token limit was taken

from tokenizer used by the model. However, for some models the token

limit of the tokenizer (from `AutoTokenizer.from_pretrained`) does not

equal the token limit of the model. This was a false assumption.

Therefore, the token limit of the text splitter is now taken from the

sentence transformers model token limit.

Twitter: @plasmajens

#### Before submitting

#### Who can review?

@hwchase17 and/or @dev2049

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

This PR updates the Vectara integration (@hwchase17 ):

* Adds reuse of requests.session to imrpove efficiency and speed.

* Utilizes Vectara's low-level API (instead of standard API) to better

match user's specific chunking with LangChain

* Now add_texts puts all the texts into a single Vectara document so

indexing is much faster.

* updated variables names from alpha to lambda_val (to be consistent

with Vectara docs) and added n_context_sentence so it's available to use

if needed.

* Updates to documentation and tests

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Unstructured XML Loader

Adds an `UnstructuredXMLLoader` class for .xml files. Works with

unstructured>=0.6.7. A plain text representation of the text with the

XML tags will be available under the `page_content` attribute in the

doc.

### Testing

```python

from langchain.document_loaders import UnstructuredXMLLoader

loader = UnstructuredXMLLoader(

"example_data/factbook.xml",

)

docs = loader.load()

```

## Who can review?

@hwchase17

@eyurtsev

Added AwaDB vector store, which is a wrapper over the AwaDB, that can be

used as a vector storage and has an efficient similarity search. Added

integration tests for the vector store

Added jupyter notebook with the example

Delete a unneeded empty file and resolve the

conflict(https://github.com/hwchase17/langchain/pull/5886)

Please check, Thanks!

@dev2049

@hwchase17

---------

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

---------

Co-authored-by: ljeagle <vincent_jieli@yeah.net>

Co-authored-by: vincent <awadb.vincent@gmail.com>

Based on the inspiration from the SQL chain, the following three

parameters are added to Graph Cypher Chain.

- top_k: Limited the number of results from the database to be used as

context

- return_direct: Return database results without transforming them to

natural language

- return_intermediate_steps: Return intermediate steps

Hi,

This is a fix for https://github.com/hwchase17/langchain/pull/5014. This

PR forgot to add the ability to self solve the ValueError(f"Could not

parse LLM output: {llm_output}") error for `_atake_next_step`.

<!--

Fixed a simple typo on

https://python.langchain.com/en/latest/modules/indexes/retrievers/examples/vectorstore.html

where the word "use" was missing.

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

**Fix SnowflakeLoader's Behavior of Returning Empty Documents**

**Description:**

This PR addresses the issue where the SnowflakeLoader was consistently

returning empty documents. After investigation, it was found that the

query method within the SnowflakeLoader was not properly fetching and

processing the data.

**Changes:**

1. Modified the query method in SnowflakeLoader to handle data fetch and

processing more accurately.

2. Enhanced error handling within the SnowflakeLoader to catch and log

potential issues that may arise during data loading.

**Impact:**

This fix will ensure the SnowflakeLoader reliably returns the expected

documents instead of empty ones, improving the efficiency and

reliability of data processing tasks in the LangChain project.

Before Fix:

`[

Document(page_content='', metadata={}),

Document(page_content='', metadata={}),

Document(page_content='', metadata={}),

Document(page_content='', metadata={}),

Document(page_content='', metadata={}),

Document(page_content='', metadata={}),

Document(page_content='', metadata={}),

Document(page_content='', metadata={}),

Document(page_content='', metadata={}),

Document(page_content='', metadata={})

]`

After Fix:

`[Document(page_content='CUSTOMER_ID: 1\nFIRST_NAME: John\nLAST_NAME:

Doe\nEMAIL: john.doe@example.com\nPHONE: 555-123-4567\nADDRESS: 123 Elm

St, San Francisco, CA 94102', metadata={}),

Document(page_content='CUSTOMER_ID: 2\nFIRST_NAME: Jane\nLAST_NAME:

Doe\nEMAIL: jane.doe@example.com\nPHONE: 555-987-6543\nADDRESS: 456 Oak

St, San Francisco, CA 94103', metadata={}),

Document(page_content='CUSTOMER_ID: 3\nFIRST_NAME: Michael\nLAST_NAME:

Smith\nEMAIL: michael.smith@example.com\nPHONE: 555-234-5678\nADDRESS:

789 Pine St, San Francisco, CA 94104', metadata={}),

Document(page_content='CUSTOMER_ID: 4\nFIRST_NAME: Emily\nLAST_NAME:

Johnson\nEMAIL: emily.johnson@example.com\nPHONE: 555-345-6789\nADDRESS:

321 Maple St, San Francisco, CA 94105', metadata={}),

Document(page_content='CUSTOMER_ID: 5\nFIRST_NAME: David\nLAST_NAME:

Williams\nEMAIL: david.williams@example.com\nPHONE:

555-456-7890\nADDRESS: 654 Birch St, San Francisco, CA 94106',

metadata={}), Document(page_content='CUSTOMER_ID: 6\nFIRST_NAME:

Emma\nLAST_NAME: Jones\nEMAIL: emma.jones@example.com\nPHONE:

555-567-8901\nADDRESS: 987 Cedar St, San Francisco, CA 94107',

metadata={}), Document(page_content='CUSTOMER_ID: 7\nFIRST_NAME:

Oliver\nLAST_NAME: Brown\nEMAIL: oliver.brown@example.com\nPHONE:

555-678-9012\nADDRESS: 147 Cherry St, San Francisco, CA 94108',

metadata={}), Document(page_content='CUSTOMER_ID: 8\nFIRST_NAME:

Sophia\nLAST_NAME: Davis\nEMAIL: sophia.davis@example.com\nPHONE:

555-789-0123\nADDRESS: 369 Walnut St, San Francisco, CA 94109',

metadata={}), Document(page_content='CUSTOMER_ID: 9\nFIRST_NAME:

James\nLAST_NAME: Taylor\nEMAIL: james.taylor@example.com\nPHONE:

555-890-1234\nADDRESS: 258 Hawthorn St, San Francisco, CA 94110',

metadata={}), Document(page_content='CUSTOMER_ID: 10\nFIRST_NAME:

Isabella\nLAST_NAME: Wilson\nEMAIL: isabella.wilson@example.com\nPHONE:

555-901-2345\nADDRESS: 963 Aspen St, San Francisco, CA 94111',

metadata={})]

`

**Tests:**

All unit and integration tests have been run and passed successfully.

Additional tests were added to validate the new behavior of the

SnowflakeLoader.

**Checklist:**

- [x] Code changes are covered by tests

- [x] Code passes `make format` and `make lint`

- [x] This PR does not introduce any breaking changes

Please review and let me know if any changes are required.

"One Retriever to merge them all, One Retriever to expose them, One

Retriever to bring them all and in and process them with Document

formatters."

Hi @dev2049! Here bothering people again!

I'm using this simple idea to deal with merging the output of several

retrievers into one.

I'm aware of DocumentCompressorPipeline and

ContextualCompressionRetriever but I don't think they allow us to do

something like this. Also I was getting in trouble to get the pipeline

working too. Please correct me if i'm wrong.

This allow to do some sort of "retrieval" preprocessing and then using

the retrieval with the curated results anywhere you could use a

retriever.

My use case is to generate diff indexes with diff embeddings and sources

for a more colorful results then filtering them with one or many

document formatters.

I saw some people looking for something like this, here:

https://github.com/hwchase17/langchain/issues/3991

and something similar here:

https://github.com/hwchase17/langchain/issues/5555

This is just a proposal I know I'm missing tests , etc. If you think

this is a worth it idea I can work on tests and anything you want to

change.

Let me know!

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Expose full params in Qdrant

There were many questions regarding supporting some additional

parameters in Qdrant integration. Qdrant supports many vector search

optimizations that were impossible to use directly in Qdrant before.

That includes:

1. Possibility to manipulate collection params while using

`Qdrant.from_texts`. The PR allows setting things such as quantization,

HNWS config, optimizers config, etc. That makes it consistent with raw

`QdrantClient`.

2. Extended options while searching. It includes HNSW options, exact

search, score threshold filtering, and read consistency in distributed

mode.

After merging that PR, #4858 might also be closed.

## Who can review?

VectorStores / Retrievers / Memory

@dev2049 @hwchase17

This PR adds the possibility of specifying the endpoint URL to AWS in

the DynamoDBChatMessageHistory, so that it is possible to target not

only the AWS cloud services, but also a local installation.

Specifying the endpoint URL, which is normally not done when addressing

the cloud services, is very helpful when targeting a local instance

(like [Localstack](https://localstack.cloud/)) when running local tests.

Fixes#5835

#### Who can review?

Tag maintainers/contributors who might be interested: @dev2049

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Fixes proxy error.

Since openai does not parse proxy parameters and uses openai.proxy

directly, the proxy method needs to be modified.

7610c5adfa/openai/api_requestor.py (LL90)

#### Who can review?

@hwchase17 - project lead

Models

- @hwchase17

- @agola11

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

#### Add start index to metadata in TextSplitter

- Modified method `create_documents` to track start position of each

chunk

- The `start_index` is included in the metadata if the `add_start_index`

parameter in the class constructor is set to `True`

This enables referencing back to the original document, particularly

useful when a specific chunk is retrieved.

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@eyurtsev @agola11

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

This PR adds a Baseten integration. I've done my best to follow the

contributor's guidelines and add docs, an example notebook, and an

integration test modeled after similar integrations' test.

Please let me know if there is anything I can do to improve the PR. When

it is merged, please tag https://twitter.com/basetenco and

https://twitter.com/philip_kiely as contributors (the note on the PR

template said to include Twitter accounts)

+ this private attribute is referenced as `arxiv_search` in internal

usage and is set when verifying the environment

twitter: @spazm

#### Who can review?

Any of @hwchase17, @leo-gan, or @bongsang might be interested in

reviewing.

+ Mismatch between `arxiv_client` attribute vs `arxiv_search` in

validation and usage is present in the initial commit by @hwchase17.

+ @leo-gan has made most of the edits.

+ @bongsang implemented pdf download.

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

---------

Co-authored-by: rlm <pexpresss31@gmail.com>

Fix the document page to open both search and Mendable when pressing

Ctrl+K.

I have changed the shortcut for Mendable to Ctrl+J.

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

@hwchase17

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

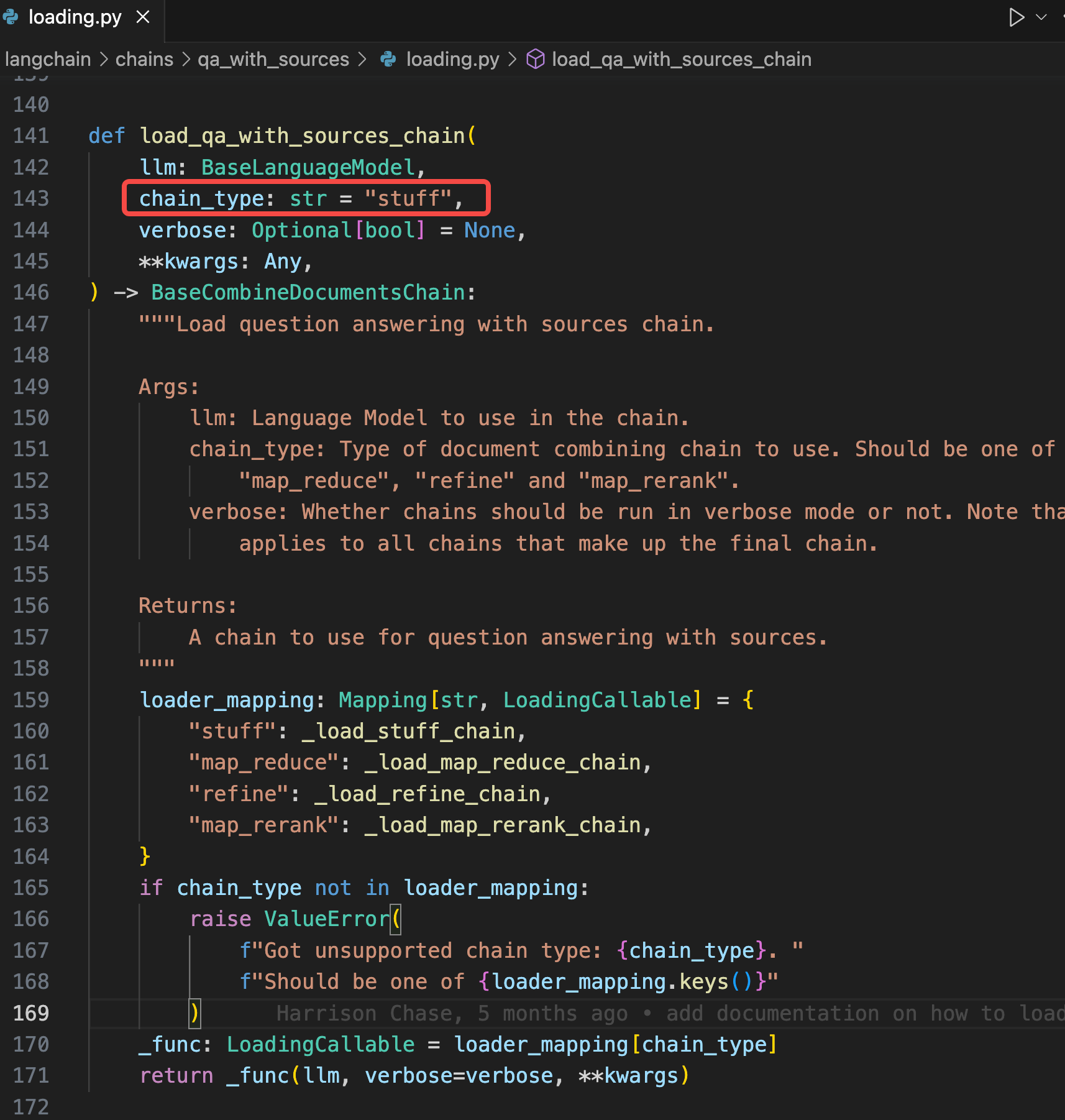

`load_qa_with_sources_chain` method already support four type of chain,

including `map_rerank`. update document to prevent any misunderstandings

😀.

<!-- Remove if not applicable -->

Fixes # (issue)

No, just update document.

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

@hwchase17

Tag maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes#3983

Mimicing what we do for saving and loading VectorDBQA chain, I added the

logic for RetrievalQA chain.

Also added a unit test. I did not find how we test other chains for

their saving and loading functionality, so I just added a file with one

test case. Let me know if there are recommended ways to test it.

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested:

@dev2049

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Your PR Title (What it does)

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

- Added `SingleStoreDB` vector store, which is a wrapper over the

SingleStore DB database, that can be used as a vector storage and has an

efficient similarity search.

- Added integration tests for the vector store

- Added jupyter notebook with the example

@dev2049

---------

Co-authored-by: Volodymyr Tkachuk <vtkachuk-ua@singlestore.com>

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Allow callbacks to monitor ConversationalRetrievalChain

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

I ran into an issue where load_qa_chain was not passing the callbacks

down to the child LLM chains, and so made sure that callbacks are

propagated. There are probably more improvements to do here but this

seemed like a good place to stop.

Note that I saw a lot of references to callbacks_manager, which seems to

be deprecated. I left that code alone for now.

## Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@agola11

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

in the `ElasticKnnSearch` class added 2 arguments that were not exposed

properly

`knn_search` added:

- `vector_query_field: Optional[str] = 'vector'`

-- vector_query_field: Field name to use in knn search if not default

'vector'

`knn_hybrid_search` added:

- `vector_query_field: Optional[str] = 'vector'`

-- vector_query_field: Field name to use in knn search if not default

'vector'

- `query_field: Optional[str] = 'text'`

-- query_field: Field name to use in search if not default 'text'

Fixes # https://github.com/hwchase17/langchain/issues/5633

cc: @dev2049 @hwchase17

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Simply fixing a small typo in the memory page.

Also removed an extra code block at the end of the file.

Along the way, the current outputs seem to have changed in a few places

so left that for posterity, and updated the number of runs which seems

harmless, though I can clean that up if preferred.

Implementation of similarity_search_with_relevance_scores for quadrant

vector store.

As implemented the method is also compatible with other capacities such

as filtering.

Integration tests updated.

#### Who can review?

Tag maintainers/contributors who might be interested:

VectorStores / Retrievers / Memory

- @dev2049

This PR adds documentation for Shale Protocol's integration with

LangChain.

[Shale Protocol](https://shaleprotocol.com) provides forever-free

production-ready inference APIs to the open-source community. We have

global data centers and plan to support all major open LLMs (estimated

~1,000 by 2025).

The team consists of software and ML engineers, AI researchers,

designers, and operators across North America and Asia. Combined

together, the team has 50+ years experience in machine learning, cloud

infrastructure, software engineering and product development. Team

members have worked at places like Google and Microsoft.

#### Who can review?

Tag maintainers/contributors who might be interested:

- @hwchase17

- @agola11

---------

Co-authored-by: Karen Sheng <46656667+karensheng@users.noreply.github.com>

## Changes

- Added the `stop` param to the `_VertexAICommon` class so it can be set

at llm initialization

## Example Usage

```python

VertexAI(

# ...

temperature=0.15,

max_output_tokens=128,

top_p=1,

top_k=40,

stop=["\n```"],

)

```

## Possible Reviewers

- @hwchase17

- @agola11

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

Add some logging into the powerbi tool so that you can see the queries

being sent to PBI and attempts to correct them.

<!-- Remove if not applicable -->

Fixes # (issue)

#### Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

#### Who can review?

Tag maintainers/contributors who might be interested: @vowelparrot

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

### Summary

Adds an `UnstructuredCSVLoader` for loading CSVs. One advantage of using

`UnstructuredCSVLoader` relative to the standard `CSVLoader` is that if

you use `UnstructuredCSVLoader` in `"elements"` mode, an HTML

representation of the table will be available in the metadata.

#### Who can review?

@hwchase17

@eyurtsev