|

|

1 year ago | |

|---|---|---|

| benchmarks | 1 year ago | |

| executors | 1 year ago | |

| generators | 1 year ago | |

| lazzzy@404c06a5bf | 1 year ago | |

| media | 1 year ago | |

| root | 1 year ago | |

| scratch | 1 year ago | |

| .gitignore | 1 year ago | |

| .gitmodules | 1 year ago | |

| LICENSE | 1 year ago | |

| README.md | 1 year ago | |

| generate_dataset.py | 1 year ago | |

| main.py | 1 year ago | |

| plot.py | 1 year ago | |

| reflexion.py | 1 year ago | |

| reflexion_mbpp_py_logs | 1 year ago | |

| reflexion_ucs.py | 1 year ago | |

| requirements.txt | 1 year ago | |

| run_reflexion.sh | 1 year ago | |

| run_reflexion_ucs.sh | 1 year ago | |

| run_simple.sh | 1 year ago | |

| simple.py | 1 year ago | |

| simple_mbpp_py2_logs | 1 year ago | |

| simple_mbpp_py_logs | 1 year ago | |

| test_acc.py | 1 year ago | |

| utils.py | 1 year ago | |

| validate_py_results.py | 1 year ago | |

| validate_rs_results.py | 1 year ago | |

README.md

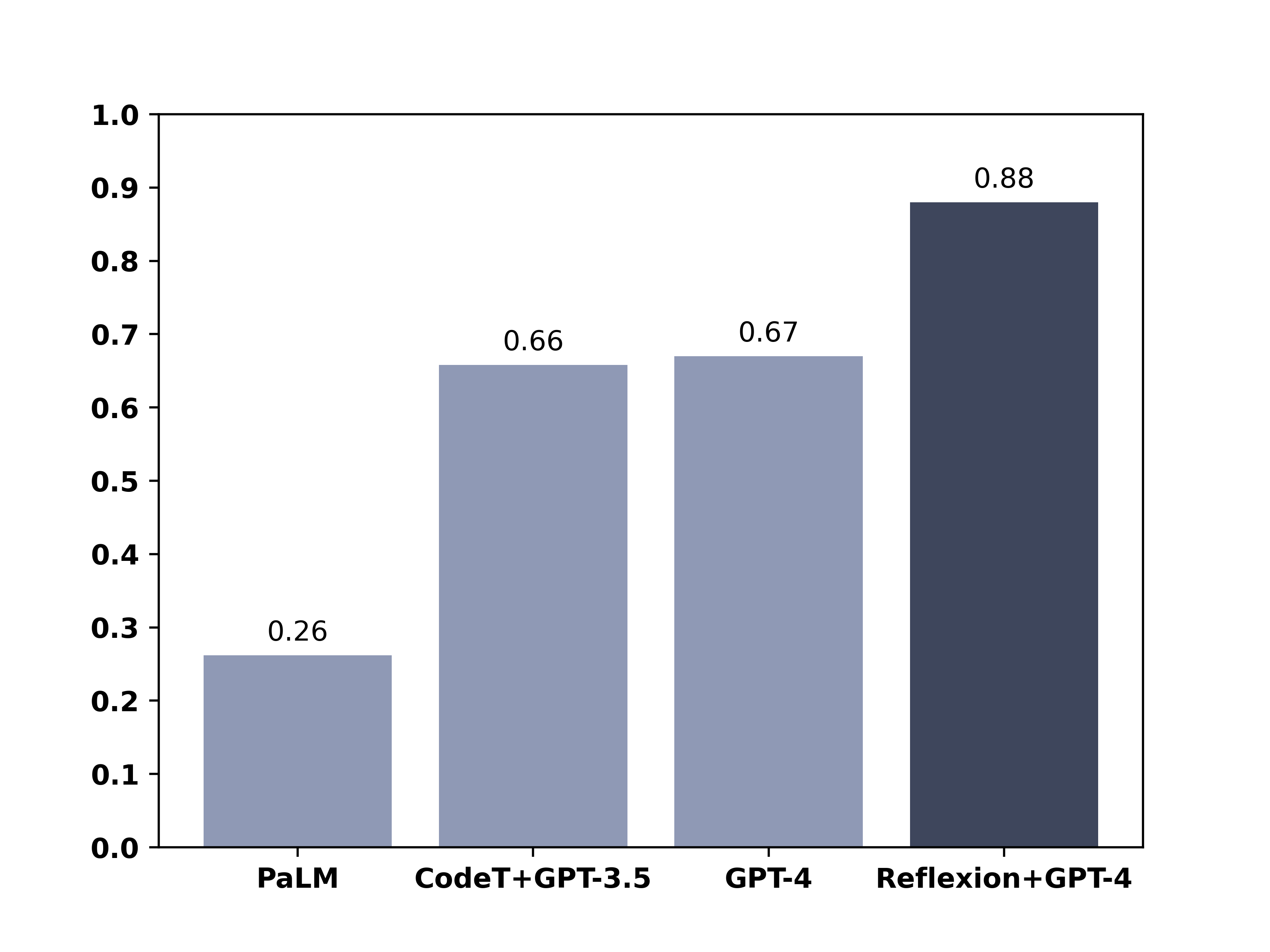

Mastering HumanEval with Reflexion

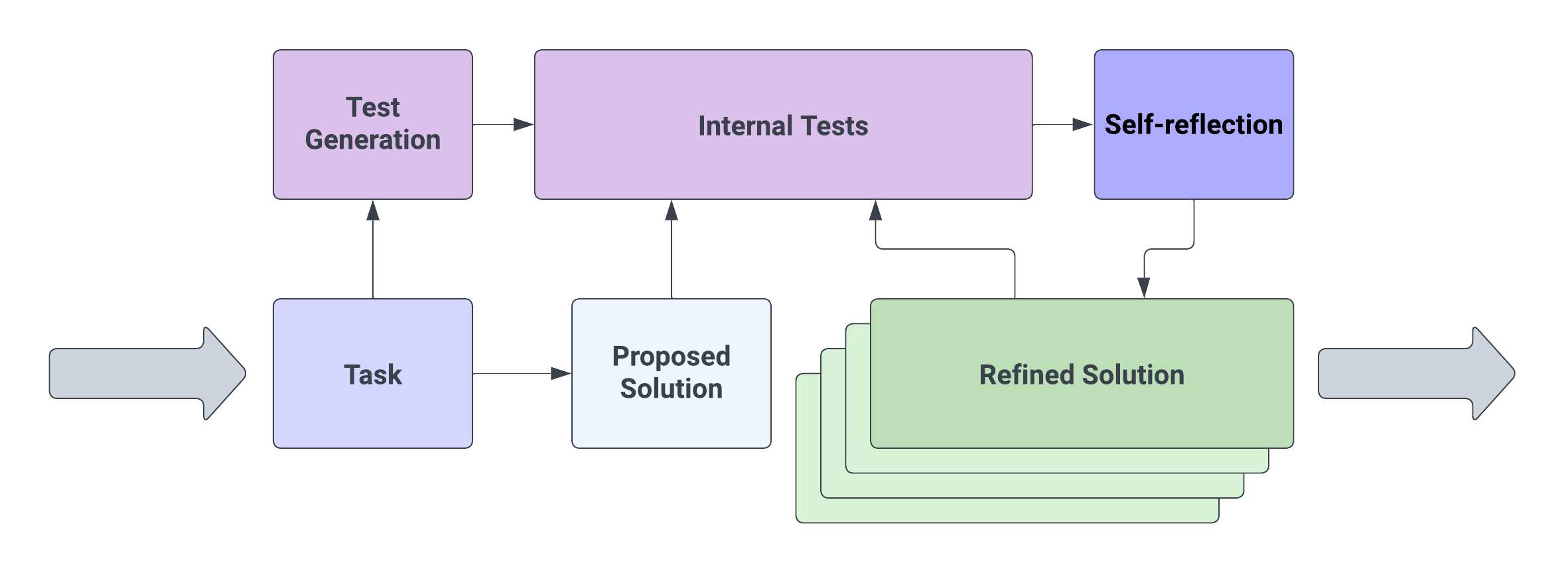

This is a spin-off project inspired by the paper: Reflexion: an autonomous agent with dynamic memory and self-reflection. Noah Shinn, Beck Labash, Ashwin Gopinath. Preprint, 2023

Read more about this project in this post.

Check out an interesting type-inference implementation here: OpenTau

Check out the code for the original paper here

If you have any questions, please contact noahshinn024@gmail.com

Cloning The Repository

The repository contains git submodules. To clone the repo with the submodules, run:

git clone --recurse-submodules

Note

Due to the nature of these experiments, it may not be feasible for individual developers to rerun the results due to limited access to GPT-4 and significant API charges. Due to recent requests, both trials have been rerun once more and are dumped in ./root with a script here to validate the solutions with the unit tests provided by HumanEval.

To run the validation on your log files or the provided log files:

python ./validate_py_results.py <path to jsonlines file>

Warning

Please do not run the Reflexion agent in an unsecure environment as the generated code is not validated before execution.

Cite

Note: This is a spin-off implementation that implements a relaxation on the internal success criteria proposed in the original paper.

@article{shinn2023reflexion,

title={Reflexion: an autonomous agent with dynamic memory and self-reflection},

author={Shinn, Noah and Labash, Beck and Gopinath, Ashwin},

journal={arXiv preprint arXiv:2303.11366},

year={2023}

}