mirror of

https://github.com/openai/openai-cookbook

synced 2024-11-17 15:29:46 +00:00

1356 lines

49 KiB

Plaintext

1356 lines

49 KiB

Plaintext

{

|

|

"cells": [

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "46589cdf-1ab6-4028-b07c-08b75acd98e5",

|

|

"metadata": {},

|

|

"source": [

|

|

"# Philosophy with Vector Embeddings, OpenAI and Cassandra / Astra DB\n",

|

|

"\n",

|

|

"### CQL Version"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "b3496d07-f473-4008-9133-1a54b818c8d3",

|

|

"metadata": {},

|

|

"source": [

|

|

"In this quickstart you will learn how to build a \"philosophy quote finder & generator\" using OpenAI's vector embeddings and DataStax Astra DB (_or a vector-capable Apache Cassandra® cluster, if you prefer_) as the vector store for data persistence.\n",

|

|

"\n",

|

|

"The basic workflow of this notebook is outlined below. You will evaluate and store the vector embeddings for a number of quotes by famous philosophers, use them to build a powerful search engine and, after that, even a generator of new quotes!\n",

|

|

"\n",

|

|

"The notebook exemplifies some of the standard usage patterns of vector search -- while showing how easy is it to get started with the [Vector capabilities of Astra DB](https://docs.datastax.com/en/astra-serverless/docs/vector-search/overview.html).\n",

|

|

"\n",

|

|

"For a background on using vector search and text embeddings to build a question-answering system, please check out this excellent hands-on notebook: [Question answering using embeddings](https://github.com/openai/openai-cookbook/blob/main/examples/Question_answering_using_embeddings.ipynb).\n",

|

|

"\n",

|

|

"#### _Choose-your-framework_\n",

|

|

"\n",

|

|

"Please note that this notebook uses the [Cassandra drivers](https://docs.datastax.com/en/developer/python-driver/latest/) and runs CQL (Cassandra Query Language) statements directly, but we cover other choices of technology to accomplish the same task. Check out this folder's [README](https://github.com/openai/openai-cookbook/tree/main/examples/vector_databases/cassandra_astradb) for other options. This notebook can run either as a Colab notebook or as a regular Jupyter notebook.\n",

|

|

"\n",

|

|

"Table of contents:\n",

|

|

"- Setup\n",

|

|

"- Get DB connection\n",

|

|

"- Connect to OpenAI\n",

|

|

"- Load quotes into the Vector Store\n",

|

|

"- Use case 1: **quote search engine**\n",

|

|

"- Use case 2: **quote generator**\n",

|

|

"- (Optional) exploit partitioning in the Vector Store"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "cddf17cc-eef4-4021-b72a-4d3832a9b4a7",

|

|

"metadata": {},

|

|

"source": [

|

|

"### How it works\n",

|

|

"\n",

|

|

"**Indexing**\n",

|

|

"\n",

|

|

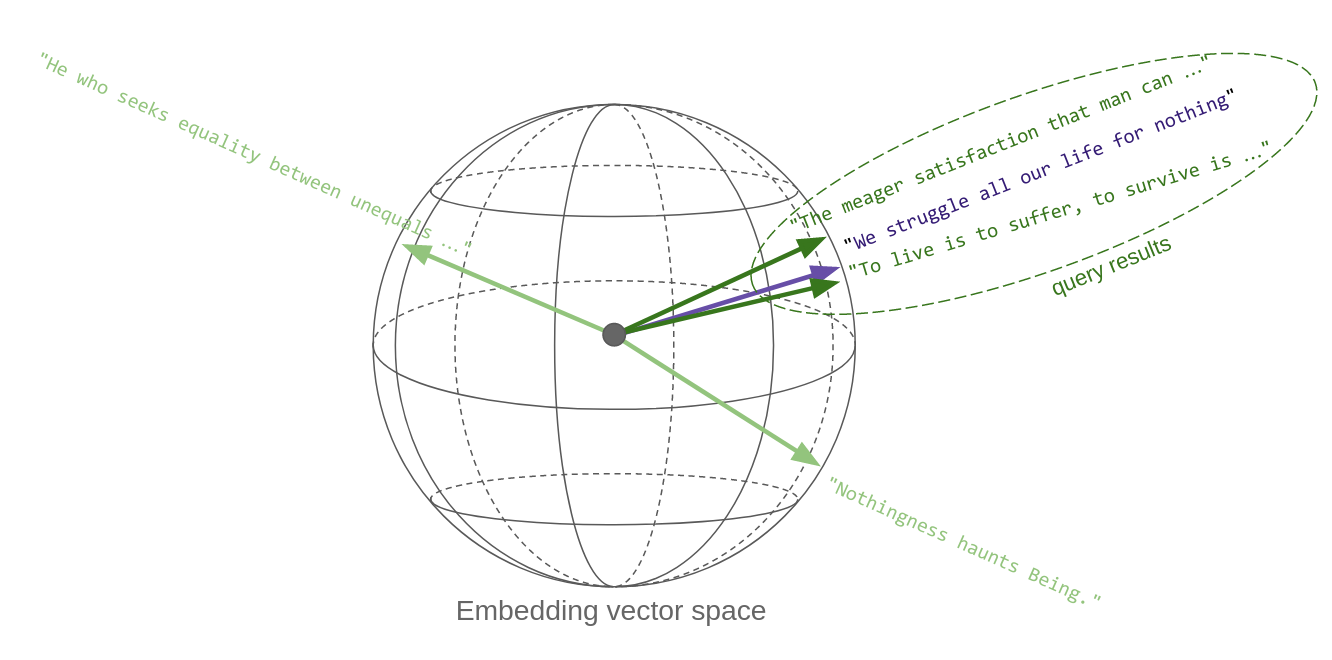

"Each quote is made into an embedding vector with OpenAI's `Embedding`. These are saved in the Vector Store for later use in searching. Some metadata, including the author's name and a few other pre-computed tags, are stored alongside, to allow for search customization.\n",

|

|

"\n",

|

|

"\n",

|

|

"\n",

|

|

"**Search**\n",

|

|

"\n",

|

|

"To find a quote similar to the provided search quote, the latter is made into an embedding vector on the fly, and this vector is used to query the store for similar vectors ... i.e. similar quotes that were previously indexed. The search can optionally be constrained by additional metadata (\"find me quotes by Spinoza similar to this one ...\").\n",

|

|

"\n",

|

|

"\n",

|

|

"\n",

|

|

"The key point here is that \"quotes similar in content\" translates, in vector space, to vectors that are metrically close to each other: thus, vector similarity search effectively implements semantic similarity. _This is the key reason vector embeddings are so powerful._\n",

|

|

"\n",

|

|

"The sketch below tries to convey this idea. Each quote, once it's made into a vector, is a point in space. Well, in this case it's on a sphere, since OpenAI's embedding vectors, as most others, are normalized to _unit length_. Oh, and the sphere is actually not three-dimensional, rather 1536-dimensional!\n",

|

|

"\n",

|

|

"So, in essence, a similarity search in vector space returns the vectors that are closest to the query vector:\n",

|

|

"\n",

|

|

"\n",

|

|

"\n",

|

|

"**Generation**\n",

|

|

"\n",

|

|

"Given a suggestion (a topic or a tentative quote), the search step is performed, and the first returned results (quotes) are fed into an LLM prompt which asks the generative model to invent a new text along the lines of the passed examples _and_ the initial suggestion.\n",

|

|

"\n",

|

|

""

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "10493f44-565d-4f23-8bfd-1a7335392c2b",

|

|

"metadata": {},

|

|

"source": [

|

|

"## Setup"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "44a14f95-4683-4d0c-a251-0df7b43ca975",

|

|

"metadata": {},

|

|

"source": [

|

|

"First install some required packages:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 1,

|

|

"id": "39afdb74-56e4-44ff-9c72-ab2669780113",

|

|

"metadata": {

|

|

"scrolled": true

|

|

},

|

|

"outputs": [],

|

|

"source": [

|

|

"!pip install cassandra-driver openai"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "9cb99e33-5cb7-416f-8dca-da18e0cb108d",

|

|

"metadata": {},

|

|

"source": [

|

|

"## Get DB connection"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "65a8edc1-4633-491b-9ed3-11163ec24e46",

|

|

"metadata": {},

|

|

"source": [

|

|

"A couple of secrets are required to create a `Session` object (a connection to your Astra DB instance).\n",

|

|

"\n",

|

|

"_(Note: some steps will be slightly different on Google Colab and on local Jupyter, that's why the notebook will detect the runtime type.)_"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 2,

|

|

"id": "a7429ed4-b3fe-44b0-ad00-60883df32070",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"from cassandra.cluster import Cluster\n",

|

|

"from cassandra.auth import PlainTextAuthProvider"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 3,

|

|

"id": "e4f2eec1-b784-4cea-9006-03cfe7b31e25",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"import os\n",

|

|

"from getpass import getpass\n",

|

|

"\n",

|

|

"try:\n",

|

|

" from google.colab import files\n",

|

|

" IS_COLAB = True\n",

|

|

"except ModuleNotFoundError:\n",

|

|

" IS_COLAB = False"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 4,

|

|

"id": "7615e522-574f-427e-9f7f-87fc721207a4",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"name": "stdin",

|

|

"output_type": "stream",

|

|

"text": [

|

|

"Please provide the full path to your Secure Connect Bundle zipfile: /path/to/secure-connect-DATABASE.zip\n",

|

|

"Please provide your Database Token ('AstraCS:...' string): ········\n",

|

|

"Please provide the Keyspace name for your Database: my_keyspace\n"

|

|

]

|

|

}

|

|

],

|

|

"source": [

|

|

"# Your database's Secure Connect Bundle zip file is needed:\n",

|

|

"if IS_COLAB:\n",

|

|

" print('Please upload your Secure Connect Bundle zipfile: ')\n",

|

|

" uploaded = files.upload()\n",

|

|

" if uploaded:\n",

|

|

" astraBundleFileTitle = list(uploaded.keys())[0]\n",

|

|

" ASTRA_DB_SECURE_BUNDLE_PATH = os.path.join(os.getcwd(), astraBundleFileTitle)\n",

|

|

" else:\n",

|

|

" raise ValueError(\n",

|

|

" 'Cannot proceed without Secure Connect Bundle. Please re-run the cell.'\n",

|

|

" )\n",

|

|

"else:\n",

|

|

" # you are running a local-jupyter notebook:\n",

|

|

" ASTRA_DB_SECURE_BUNDLE_PATH = input(\"Please provide the full path to your Secure Connect Bundle zipfile: \")\n",

|

|

"\n",

|

|

"ASTRA_DB_APPLICATION_TOKEN = getpass(\"Please provide your Database Token ('AstraCS:...' string): \")\n",

|

|

"ASTRA_DB_KEYSPACE = input(\"Please provide the Keyspace name for your Database: \")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "f8c4e5ec-2ab2-4d41-b3ec-c946469fed8b",

|

|

"metadata": {},

|

|

"source": [

|

|

"### Creation of the DB connection\n",

|

|

"\n",

|

|

"This is how you create a connection to Astra DB:\n",

|

|

"\n",

|

|

"_(Incidentally, you could also use any Cassandra cluster (as long as it provides Vector capabilities), just by [changing the parameters](https://docs.datastax.com/en/developer/python-driver/latest/getting_started/#connecting-to-cassandra) to the following `Cluster` instantiation.)_"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 6,

|

|

"id": "949ab020-90c8-499b-a139-f69f07af50ed",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"# Don't mind the \"Closing connection\" error after \"downgrading protocol...\" messages,\n",

|

|

"# it is really just a warning: the connection will work smoothly.\n",

|

|

"cluster = Cluster(\n",

|

|

" cloud={\n",

|

|

" \"secure_connect_bundle\": ASTRA_DB_SECURE_BUNDLE_PATH,\n",

|

|

" },\n",

|

|

" auth_provider=PlainTextAuthProvider(\n",

|

|

" \"token\",\n",

|

|

" ASTRA_DB_APPLICATION_TOKEN,\n",

|

|

" ),\n",

|

|

")\n",

|

|

"\n",

|

|

"session = cluster.connect()\n",

|

|

"keyspace = ASTRA_DB_KEYSPACE"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "60829851-bd48-4461-9243-974f76304933",

|

|

"metadata": {},

|

|

"source": [

|

|

"### Creation of the Vector table in CQL\n",

|

|

"\n",

|

|

"You need a table which support vectors and is equipped with metadata. Call it \"philosophers_cql\".\n",

|

|

"\n",

|

|

"Each row will store: a quote, its vector embedding, the quote author and a set of \"tags\". You also need a primary key to ensure uniqueness of rows.\n",

|

|

"\n",

|

|

"The following is the full CQL command that creates the table (check out [this page](https://docs.datastax.com/en/dse/6.7/cql/cql/cqlQuickReference.html) for more on the CQL syntax of this and the following statements):"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 7,

|

|

"id": "8db837dc-cd49-41e2-8b5d-edb17ccc470e",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"create_table_statement = f\"\"\"CREATE TABLE IF NOT EXISTS {keyspace}.philosophers_cql (\n",

|

|

" quote_id UUID PRIMARY KEY,\n",

|

|

" body TEXT,\n",

|

|

" embedding_vector VECTOR<FLOAT, 1536>,\n",

|

|

" author TEXT,\n",

|

|

" tags SET<TEXT>\n",

|

|

");\"\"\""

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "fb1beab1-bbbe-4714-b817-c3ee3db34d91",

|

|

"metadata": {},

|

|

"source": [

|

|

"Pass this statement on your database Session to execute it:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 8,

|

|

"id": "e9a83507-1ebc-420e-8845-bef55f2b7c64",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"<cassandra.cluster.ResultSet at 0x7f04a77fd1e0>"

|

|

]

|

|

},

|

|

"execution_count": 8,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"session.execute(create_table_statement)"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "2c1be40d-bb6f-46d1-b39a-ac51ce11a466",

|

|

"metadata": {},

|

|

"source": [

|

|

"#### Add a vector index for ANN search"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "1761df5b-3d92-47e5-889b-8626d3c80b0a",

|

|

"metadata": {},

|

|

"source": [

|

|

"In order to run ANN (approximate-nearest-neighbor) searches on the vectors in the table, you need to create a specific index on the `embedding_vector` column.\n",

|

|

"\n",

|

|

"_When creating the index, you can optionally choose the \"similarity function\" used to compute vector distances: since for unit-length vectors (such as those from OpenAI) the \"cosine difference\" is the same as the \"dot product\", you'll use the latter which is computationally less expensive._\n",

|

|

"\n",

|

|

"Run this CQL statement:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 9,

|

|

"id": "9dd61e12-a7a3-4c99-9ba3-f8d8641ff32a",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"<cassandra.cluster.ResultSet at 0x7f04a77fffd0>"

|

|

]

|

|

},

|

|

"execution_count": 9,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"create_vector_index_statement = f\"\"\"CREATE CUSTOM INDEX IF NOT EXISTS idx_embedding_vector\n",

|

|

" ON {keyspace}.philosophers_cql (embedding_vector)\n",

|

|

" USING 'org.apache.cassandra.index.sai.StorageAttachedIndex'\n",

|

|

" WITH OPTIONS = {{'similarity_function' : 'dot_product'}};\n",

|

|

"\"\"\"\n",

|

|

"# Note: the double '{{' and '}}' are just the F-string escape sequence for '{' and '}'\n",

|

|

"\n",

|

|

"session.execute(create_vector_index_statement)"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "637d9946-6ada-48ff-99f7-c91a730e841c",

|

|

"metadata": {},

|

|

"source": [

|

|

"#### Add indexes for author and tag filtering"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "0b1f7e8a-193e-45ad-90dc-2764ad8e16a1",

|

|

"metadata": {},

|

|

"source": [

|

|

"That is enough to run vector searches on the table ... but you want to be able to optionally specify an author and/or some tags to restrict the quote search. Create two other indexes to support this:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 10,

|

|

"id": "691f1a07-cab4-42a1-baba-f17b561ddd3f",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"<cassandra.cluster.ResultSet at 0x7f047bf455a0>"

|

|

]

|

|

},

|

|

"execution_count": 10,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"create_author_index_statement = f\"\"\"CREATE CUSTOM INDEX IF NOT EXISTS idx_author\n",

|

|

" ON {keyspace}.philosophers_cql (author)\n",

|

|

" USING 'org.apache.cassandra.index.sai.StorageAttachedIndex';\n",

|

|

"\"\"\"\n",

|

|

"session.execute(create_author_index_statement)\n",

|

|

"\n",

|

|

"create_tags_index_statement = f\"\"\"CREATE CUSTOM INDEX IF NOT EXISTS idx_tags\n",

|

|

" ON {keyspace}.philosophers_cql (VALUES(tags))\n",

|

|

" USING 'org.apache.cassandra.index.sai.StorageAttachedIndex';\n",

|

|

"\"\"\"\n",

|

|

"session.execute(create_tags_index_statement)"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "da86f91a-88a6-4997-b0f8-9da0816f8ece",

|

|

"metadata": {},

|

|

"source": [

|

|

"## Connect to OpenAI"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "a6b664b5-fd84-492e-a7bd-4dda3863b48a",

|

|

"metadata": {},

|

|

"source": [

|

|

"### Set up your secret key"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 11,

|

|

"id": "37fe7653-dd64-4494-83e1-5702ec41725c",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"name": "stdin",

|

|

"output_type": "stream",

|

|

"text": [

|

|

"Please enter your OpenAI API Key: ········\n"

|

|

]

|

|

}

|

|

],

|

|

"source": [

|

|

"OPENAI_API_KEY = getpass(\"Please enter your OpenAI API Key: \")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 12,

|

|

"id": "8065a42a-0ece-4453-b771-1dbef6d8a620",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"import openai\n",

|

|

"\n",

|

|

"openai.api_key = OPENAI_API_KEY"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "847f2821-7f3f-4dcd-8e0c-49aa397e36f4",

|

|

"metadata": {},

|

|

"source": [

|

|

"### A test call for embeddings\n",

|

|

"\n",

|

|

"Quickly check how one can get the embedding vectors for a list of input texts:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 13,

|

|

"id": "6bf89454-9a55-4202-ab6b-ea15b2048f3d",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"embedding_model_name = \"text-embedding-ada-002\"\n",

|

|

"\n",

|

|

"result = openai.Embedding.create(\n",

|

|

" input=[\n",

|

|

" \"This is a sentence\",\n",

|

|

" \"A second sentence\"\n",

|

|

" ],\n",

|

|

" engine=embedding_model_name,\n",

|

|

")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 14,

|

|

"id": "50a8e6f0-0aa7-4ffc-94e9-702b68566815",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"name": "stdout",

|

|

"output_type": "stream",

|

|

"text": [

|

|

"len(result.data) = 2\n",

|

|

"result.data[1].embedding = [-0.01075850147753954, 0.0013505702372640371, 0.0036223...\n",

|

|

"len(result.data[1].embedding) = 1536\n"

|

|

]

|

|

}

|

|

],

|

|

"source": [

|

|

"print(f\"len(result.data) = {len(result.data)}\")\n",

|

|

"print(f\"result.data[1].embedding = {str(result.data[1].embedding)[:55]}...\")\n",

|

|

"print(f\"len(result.data[1].embedding) = {len(result.data[1].embedding)}\")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "d7f09c42-fff3-4aa2-922b-043739b4b06a",

|

|

"metadata": {},

|

|

"source": [

|

|

"## Load quotes into the Vector Store"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "cf0f3d58-74c2-458b-903d-3d12e61b7846",

|

|

"metadata": {},

|

|

"source": [

|

|

"Get a JSON file containing our quotes. We already prepared this collection and put it into this repo for quick loading.\n",

|

|

"\n",

|

|

"_(Note: we adapted the following from a Kaggle dataset -- which we acknowledge -- and also added a few tags to each quote.)_"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 15,

|

|

"id": "94ff33fb-4b52-4c15-ab74-4af4fe973cbf",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"import json\n",

|

|

"import requests\n",

|

|

"\n",

|

|

"if IS_COLAB:\n",

|

|

" # load from Web request to (github) repo\n",

|

|

" json_url = \"https://raw.githubusercontent.com/openai/openai-cookbook/main/examples/vector_databases/cassandra_astradb/sources/philo_quotes.json\"\n",

|

|

" quote_dict = json.loads(requests.get(json_url).text) \n",

|

|

"else:\n",

|

|

" # load from local repo\n",

|

|

" quote_dict = json.load(open(\"./sources/philo_quotes.json\"))"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "ab6b08b1-e3db-4c7c-9d7c-2ada7c8bc71d",

|

|

"metadata": {},

|

|

"source": [

|

|

"A quick inspection of the input data structure:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 16,

|

|

"id": "6ab84ccb-3363-4bdc-9484-0d68c25a58ff",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"name": "stdout",

|

|

"output_type": "stream",

|

|

"text": [

|

|

"Adapted from this Kaggle dataset: https://www.kaggle.com/datasets/mertbozkurt5/quotes-by-philosophers (License: CC BY-NC-SA 4.0)\n",

|

|

"\n",

|

|

"Quotes loaded: 450.\n",

|

|

"By author:\n",

|

|

" aristotle (50)\n",

|

|

" freud (50)\n",

|

|

" hegel (50)\n",

|

|

" kant (50)\n",

|

|

" nietzsche (50)\n",

|

|

" plato (50)\n",

|

|

" sartre (50)\n",

|

|

" schopenhauer (50)\n",

|

|

" spinoza (50)\n",

|

|

"\n",

|

|

"Some examples:\n",

|

|

" aristotle:\n",

|

|

" True happiness comes from gaining insight and grow ... (tags: knowledge)\n",

|

|

" The roots of education are bitter, but the fruit i ... (tags: education, knowledge)\n",

|

|

" freud:\n",

|

|

" We are what we are because we have been what we ha ... (tags: history)\n",

|

|

" From error to error one discovers the entire truth ... (tags: )\n"

|

|

]

|

|

}

|

|

],

|

|

"source": [

|

|

"print(quote_dict[\"source\"])\n",

|

|

"\n",

|

|

"total_quotes = sum(len(quotes) for quotes in quote_dict[\"quotes\"].values())\n",

|

|

"print(f\"\\nQuotes loaded: {total_quotes}.\\nBy author:\")\n",

|

|

"print(\"\\n\".join(f\" {author} ({len(quotes)})\" for author, quotes in quote_dict[\"quotes\"].items()))\n",

|

|

"\n",

|

|

"print(\"\\nSome examples:\")\n",

|

|

"for author, quotes in list(quote_dict[\"quotes\"].items())[:2]:\n",

|

|

" print(f\" {author}:\")\n",

|

|

" for quote in quotes[:2]:\n",

|

|

" print(f\" {quote['body'][:50]} ... (tags: {', '.join(quote['tags'])})\")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "15745dc8-e7c1-4781-933b-41eef6ddd657",

|

|

"metadata": {},

|

|

"source": [

|

|

"### Insert quotes into vector store\n",

|

|

"\n",

|

|

"You will compute the embeddings for the quotes and save them into the Vector Store, along with the text itself and the metadata planned for later use.\n",

|

|

"\n",

|

|

"To optimize speed and reduce the calls, you'll perform batched calls to the embedding OpenAI service, with one batch per author.\n",

|

|

"\n",

|

|

"The DB write is accomplished with a CQL statement. But since you'll run this particular insertion several times (albeit with different values), it's best to _prepare_ the statement and then just run it over and over.\n",

|

|

"\n",

|

|

"_(Note: for faster execution, the Cassandra drivers would let you do concurrent inserts, which we don't do here for a more straightforward demo code.)_"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 17,

|

|

"id": "68e80e81-886b-45a4-be61-c33b8028bcfb",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"name": "stdout",

|

|

"output_type": "stream",

|

|

"text": [

|

|

"aristotle: ************************************************** Done (50 quotes inserted).\n",

|

|

"freud: ************************************************** Done (50 quotes inserted).\n",

|

|

"hegel: ************************************************** Done (50 quotes inserted).\n",

|

|

"kant: ************************************************** Done (50 quotes inserted).\n",

|

|

"nietzsche: ************************************************** Done (50 quotes inserted).\n",

|

|

"plato: ************************************************** Done (50 quotes inserted).\n",

|

|

"sartre: ************************************************** Done (50 quotes inserted).\n",

|

|

"schopenhauer: ************************************************** Done (50 quotes inserted).\n",

|

|

"spinoza: ************************************************** Done (50 quotes inserted).\n",

|

|

"Finished inserting.\n"

|

|

]

|

|

}

|

|

],

|

|

"source": [

|

|

"from uuid import uuid4\n",

|

|

"\n",

|

|

"prepared_insertion = session.prepare(\n",

|

|

" f\"INSERT INTO {keyspace}.philosophers_cql (quote_id, author, body, embedding_vector, tags) VALUES (?, ?, ?, ?, ?);\"\n",

|

|

")\n",

|

|

"\n",

|

|

"for philosopher, quotes in quote_dict[\"quotes\"].items():\n",

|

|

" print(f\"{philosopher}: \", end=\"\")\n",

|

|

" result = openai.Embedding.create(\n",

|

|

" input=[quote[\"body\"] for quote in quotes],\n",

|

|

" engine=embedding_model_name,\n",

|

|

" )\n",

|

|

" for quote_idx, (quote, q_data) in enumerate(zip(quotes, result.data)):\n",

|

|

" quote_id = uuid4() # a new random ID for each quote. In a production app you'll want to have better control...\n",

|

|

" session.execute(\n",

|

|

" prepared_insertion,\n",

|

|

" (quote_id, philosopher, quote[\"body\"], q_data.embedding, set(quote[\"tags\"])),\n",

|

|

" )\n",

|

|

" print(\"*\", end='')\n",

|

|

" print(f\" Done ({len(quotes)} quotes inserted).\")\n",

|

|

"print(\"Finished inserting.\")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "db3ee629-b6b9-4a77-8c58-c3b93403a6a6",

|

|

"metadata": {},

|

|

"source": [

|

|

"## Use case 1: **quote search engine**"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "db3b12b3-2557-4826-af5a-16e6cd9a4531",

|

|

"metadata": {},

|

|

"source": [

|

|

"For the quote-search functionality, you need first to make the input quote into a vector, and then use it to query the store (besides handling the optional metadata into the search call, that is).\n",

|

|

"\n",

|

|

"Encapsulate the search-engine functionality into a function for ease of re-use:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 18,

|

|

"id": "d6fcf182-3ab7-4d28-9472-dce35cc38182",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"def find_quote_and_author(query_quote, n, author=None, tags=None):\n",

|

|

" query_vector = openai.Embedding.create(\n",

|

|

" input=[query_quote],\n",

|

|

" engine=embedding_model_name,\n",

|

|

" ).data[0].embedding\n",

|

|

" # depending on what conditions are passed, the WHERE clause in the statement may vary.\n",

|

|

" where_clauses = []\n",

|

|

" where_values = []\n",

|

|

" if author:\n",

|

|

" where_clauses += [\"author = %s\"]\n",

|

|

" where_values += [author]\n",

|

|

" if tags:\n",

|

|

" for tag in tags:\n",

|

|

" where_clauses += [\"tags CONTAINS %s\"]\n",

|

|

" where_values += [tag]\n",

|

|

" # The reason for these two lists above is that when running the CQL search statement the values passed\n",

|

|

" # must match the sequence of \"?\" marks in the statement.\n",

|

|

" if where_clauses:\n",

|

|

" search_statement = f\"\"\"SELECT body, author FROM {keyspace}.philosophers_cql\n",

|

|

" WHERE {' AND '.join(where_clauses)}\n",

|

|

" ORDER BY embedding_vector ANN OF %s\n",

|

|

" LIMIT %s;\n",

|

|

" \"\"\"\n",

|

|

" else:\n",

|

|

" search_statement = f\"\"\"SELECT body, author FROM {keyspace}.philosophers_cql\n",

|

|

" ORDER BY embedding_vector ANN OF %s\n",

|

|

" LIMIT %s;\n",

|

|

" \"\"\"\n",

|

|

" # For best performance, one should keep a cache of prepared statements (see the insertion code above)\n",

|

|

" # for the various possible statements used here.\n",

|

|

" # (We'll leave it as an exercise to the reader to avoid making this code too long.\n",

|

|

" # Remember: to prepare a statement you use '?' instead of '%s'.)\n",

|

|

" query_values = tuple(where_values + [query_vector] + [n])\n",

|

|

" result_rows = session.execute(search_statement, query_values)\n",

|

|

" return [\n",

|

|

" (result_row.body, result_row.author)\n",

|

|

" for result_row in result_rows\n",

|

|

" ]"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "2539262d-100b-4e8d-864d-e9c612a73e91",

|

|

"metadata": {},

|

|

"source": [

|

|

"### Putting search to test"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "3634165c-0882-4281-bc60-ab96261a500d",

|

|

"metadata": {},

|

|

"source": [

|

|

"Passing just a quote:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 19,

|

|

"id": "6722c2c0-3e54-4738-80ce-4d1149e95414",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"[('Life to the great majority is only a constant struggle for mere existence, with the certainty of losing it at last.',\n",

|

|

" 'schopenhauer'),\n",

|

|

" ('We give up leisure in order that we may have leisure, just as we go to war in order that we may have peace.',\n",

|

|

" 'aristotle'),\n",

|

|

" ('Perhaps the gods are kind to us, by making life more disagreeable as we grow older. In the end death seems less intolerable than the manifold burdens we carry',\n",

|

|

" 'freud')]"

|

|

]

|

|

},

|

|

"execution_count": 19,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"find_quote_and_author(\"We struggle all our life for nothing\", 3)"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "50828e4c-9bb5-4489-9fe9-87da5fbe1f18",

|

|

"metadata": {},

|

|

"source": [

|

|

"Search restricted to an author:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 20,

|

|

"id": "da9c705f-5c12-42b3-a038-202f89a3c6da",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"[('To live is to suffer, to survive is to find some meaning in the suffering.',\n",

|

|

" 'nietzsche'),\n",

|

|

" ('What makes us heroic?--Confronting simultaneously our supreme suffering and our supreme hope.',\n",

|

|

" 'nietzsche')]"

|

|

]

|

|

},

|

|

"execution_count": 20,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"find_quote_and_author(\"We struggle all our life for nothing\", 2, author=\"nietzsche\")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "4a3857ea-6dfe-489a-9b86-4e5e0534960f",

|

|

"metadata": {},

|

|

"source": [

|

|

"Search constrained to a tag (out of those saved earlier with the quotes):"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 21,

|

|

"id": "abcfaec9-8f42-4789-a5ed-1073fa2932c2",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"[('Mankind will never see an end of trouble until lovers of wisdom come to hold political power, or the holders of power become lovers of wisdom',\n",

|

|

" 'plato'),\n",

|

|

" ('Everything the State says is a lie, and everything it has it has stolen.',\n",

|

|

" 'nietzsche')]"

|

|

]

|

|

},

|

|

"execution_count": 21,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"find_quote_and_author(\"We struggle all our life for nothing\", 2, tags=[\"politics\"])"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "746fe38f-139f-44a6-a225-a63e40d3ddf5",

|

|

"metadata": {},

|

|

"source": [

|

|

"### Cutting out irrelevant results\n",

|

|

"\n",

|

|

"The vector similarity search generally returns the vectors that are closest to the query, even if that means results that might be somewhat irrelevant if there's nothing better.\n",

|

|

"\n",

|

|

"To keep this issue under control, you can get the actual \"similarity\" between the query and each result, and then set a cutoff on it, effectively discarding results that are beyond that threshold.\n",

|

|

"Tuning this threshold correctly is not an easy problem: here, we'll just show you the way.\n",

|

|

"\n",

|

|

"To get a feeling on how this works, try the following query and play with the choice of quote and threshold to compare the results:\n",

|

|

"\n",

|

|

"_Note (for the mathematically inclined): this \"distance\" is not exactly the cosine difference between the vectors (i.e. the scalar product divided by the product of the norms of the two vectors), rather it is rescaled to fit the [0, 1] interval. Elsewhere (e.g. in the \"CassIO\" version of this example) you will see the actual bare cosine difference. As a result, if you compare the two notebooks, the numerical values and adequate thresholds will be slightly different._"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 24,

|

|

"id": "b9b43721-a3b0-4ac4-b730-7a6aeec52e70",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"name": "stdout",

|

|

"output_type": "stream",

|

|

"text": [

|

|

"8 quotes within the threshold:\n",

|

|

" 0. [similarity=0.927] \"The assumption that animals are without rights, and the illusion that ...\"\n",

|

|

" 1. [similarity=0.922] \"Animals are in possession of themselves; their soul is in possession o...\"\n",

|

|

" 2. [similarity=0.920] \"At his best, man is the noblest of all animals; separated from law and...\"\n",

|

|

" 3. [similarity=0.916] \"Man is the only animal that must be encouraged to live....\"\n",

|

|

" 4. [similarity=0.916] \".... we are a part of nature as a whole, whose order we follow....\"\n",

|

|

" 5. [similarity=0.912] \"Every human endeavor, however singular it seems, involves the whole hu...\"\n",

|

|

" 6. [similarity=0.910] \"Because Christian morality leaves animals out of account, they are at ...\"\n",

|

|

" 7. [similarity=0.910] \"A dog has the soul of a philosopher....\"\n"

|

|

]

|

|

}

|

|

],

|

|

"source": [

|

|

"quote = \"Animals are our equals.\"\n",

|

|

"# quote = \"Be good.\"\n",

|

|

"# quote = \"This teapot is strange.\"\n",

|

|

"\n",

|

|

"similarity_threshold = 0.9\n",

|

|

"\n",

|

|

"quote_vector = openai.Embedding.create(\n",

|

|

" input=[quote],\n",

|

|

" engine=embedding_model_name,\n",

|

|

").data[0].embedding\n",

|

|

"\n",

|

|

"# Once more: remember to prepare your statements in production for greater performance...\n",

|

|

"\n",

|

|

"search_statement = f\"\"\"SELECT body, similarity_dot_product(embedding_vector, %s) as similarity\n",

|

|

" FROM {keyspace}.philosophers_cql\n",

|

|

" ORDER BY embedding_vector ANN OF %s\n",

|

|

" LIMIT %s;\n",

|

|

"\"\"\"\n",

|

|

"query_values = (quote_vector, quote_vector, 8)\n",

|

|

"\n",

|

|

"result_rows = session.execute(search_statement, query_values)\n",

|

|

"results = [\n",

|

|

" (result_row.body, result_row.similarity)\n",

|

|

" for result_row in result_rows\n",

|

|

" if result_row.similarity >= similarity_threshold\n",

|

|

"]\n",

|

|

"\n",

|

|

"print(f\"{len(results)} quotes within the threshold:\")\n",

|

|

"for idx, (r_body, r_similarity) in enumerate(results):\n",

|

|

" print(f\" {idx}. [similarity={r_similarity:.3f}] \\\"{r_body[:70]}...\\\"\")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "71871251-169f-4d3f-a687-65f836a9a8fe",

|

|

"metadata": {},

|

|

"source": [

|

|

"## Use case 2: **quote generator**"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "b0a9cd63-a131-4819-bf41-c8ffa0b1e1ca",

|

|

"metadata": {},

|

|

"source": [

|

|

"For this task you need another component from OpenAI, namely an LLM to generate the quote for us (based on input obtained by querying the Vector Store).\n",

|

|

"\n",

|

|

"You also need a template for the prompt that will be filled for the generate-quote LLM completion task."

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 25,

|

|

"id": "a6dd366d-665a-45fd-917b-b6b5312b0865",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"completion_model_name = \"gpt-3.5-turbo\"\n",

|

|

"\n",

|

|

"generation_prompt_template = \"\"\"\"Generate a single short philosophical quote on the given topic,\n",

|

|

"similar in spirit and form to the provided actual example quotes.\n",

|

|

"Do not exceed 20-30 words in your quote.\n",

|

|

"\n",

|

|

"REFERENCE TOPIC: \"{topic}\"\n",

|

|

"\n",

|

|

"ACTUAL EXAMPLES:\n",

|

|

"{examples}\n",

|

|

"\"\"\""

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "53073a9e-16de-4e49-9e97-ff31b9b250c2",

|

|

"metadata": {},

|

|

"source": [

|

|

"Like for search, this functionality is best wrapped into a handy function (which internally uses search):"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 26,

|

|

"id": "397e6ebd-b30e-413b-be63-81a62947a7b8",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"def generate_quote(topic, n=2, author=None, tags=None):\n",

|

|

" quotes = find_quote_and_author(query_quote=topic, n=n, author=author, tags=tags)\n",

|

|

" if quotes:\n",

|

|

" prompt = generation_prompt_template.format(\n",

|

|

" topic=topic,\n",

|

|

" examples=\"\\n\".join(f\" - {quote[0]}\" for quote in quotes),\n",

|

|

" )\n",

|

|

" # a little logging:\n",

|

|

" print(\"** quotes found:\")\n",

|

|

" for q, a in quotes:\n",

|

|

" print(f\"** - {q} ({a})\")\n",

|

|

" print(\"** end of logging\")\n",

|

|

" #\n",

|

|

" response = openai.ChatCompletion.create(\n",

|

|

" model=completion_model_name,\n",

|

|

" messages=[{\"role\": \"user\", \"content\": prompt}],\n",

|

|

" temperature=0.7,\n",

|

|

" max_tokens=320,\n",

|

|

" )\n",

|

|

" return response.choices[0].message.content.replace('\"', '').strip()\n",

|

|

" else:\n",

|

|

" print(\"** no quotes found.\")\n",

|

|

" return None"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "63bcc157-e5d4-43ef-8028-d4dcc8a72b9c",

|

|

"metadata": {},

|

|

"source": [

|

|

"#### Putting quote generation to test"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "fe6b3f38-089d-486d-b32c-e665c725faa8",

|

|

"metadata": {},

|

|

"source": [

|

|

"Just passing a text (a \"quote\", but one can actually just suggest a topic since its vector embedding will still end up at the right place in the vector space):"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 27,

|

|

"id": "806ba758-8988-410e-9eeb-b9c6799e6b25",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"name": "stdout",

|

|

"output_type": "stream",

|

|

"text": [

|

|

"** quotes found:\n",

|

|

"** - Happiness is the reward of virtue. (aristotle)\n",

|

|

"** - It is better for a city to be governed by a good man than by good laws. (aristotle)\n",

|

|

"** end of logging\n",

|

|

"\n",

|

|

"A new generated quote:\n",

|

|

"Politics is the battleground where virtue fights for the soul of society.\n"

|

|

]

|

|

}

|

|

],

|

|

"source": [

|

|

"q_topic = generate_quote(\"politics and virtue\")\n",

|

|

"print(\"\\nA new generated quote:\")\n",

|

|

"print(q_topic)"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "ca032d30-4538-4d0b-aea1-731fb32d2d4b",

|

|

"metadata": {},

|

|

"source": [

|

|

"Use inspiration from just a single philosopher:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 28,

|

|

"id": "7c2e2d4e-865f-4b2d-80cd-a695271415d9",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"name": "stdout",

|

|

"output_type": "stream",

|

|

"text": [

|

|

"** quotes found:\n",

|

|

"** - Because Christian morality leaves animals out of account, they are at once outlawed in philosophical morals; they are mere 'things,' mere means to any ends whatsoever. They can therefore be used for vivisection, hunting, coursing, bullfights, and horse racing, and can be whipped to death as they struggle along with heavy carts of stone. Shame on such a morality that is worthy of pariahs, and that fails to recognize the eternal essence that exists in every living thing, and shines forth with inscrutable significance from all eyes that see the sun! (schopenhauer)\n",

|

|

"** - The assumption that animals are without rights, and the illusion that our treatment of them has no moral significance, is a positively outrageous example of Western crudity and barbarity. Universal compassion is the only guarantee of morality. (schopenhauer)\n",

|

|

"** end of logging\n",

|

|

"\n",

|

|

"A new generated quote:\n",

|

|

"By disregarding animals in our moral framework, we deny their inherent value and perpetuate a barbaric disregard for life.\n"

|

|

]

|

|

}

|

|

],

|

|

"source": [

|

|

"q_topic = generate_quote(\"animals\", author=\"schopenhauer\")\n",

|

|

"print(\"\\nA new generated quote:\")\n",

|

|

"print(q_topic)"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "e86bc6b3-8258-4f26-96df-29deb898d55e",

|

|

"metadata": {},

|

|

"source": [

|

|

"## (Optional) **Partitioning**"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "fb7cff21-973a-4cba-8e9e-d51518275b3c",

|

|

"metadata": {},

|

|

"source": [

|

|

"There's an interesting topic to examine before completing this quickstart. While, generally, tags and quotes can be in any relationship (e.g. a quote having multiple tags), _authors_ are effectively an exact grouping (they define a \"disjoint partitioning\" on the set of quotes): each quote has exactly one author (for us, at least).\n",

|

|

"\n",

|

|

"Now, suppose you know in advance your application will usually (or always) run queries on a _single author_. Then you can take full advantage of the underlying database structure: if you group quotes in **partitions** (one per author), vector queries on just an author will use less resources and return much faster.\n",

|

|

"\n",

|

|

"We'll not dive into the details here, which have to do with the Cassandra storage internals: the important message is that **if your queries are run within a group, consider partitioning accordingly to boost performance**.\n",

|

|

"\n",

|

|

"You'll now see this choice in action."

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "2b7eb294-85e0-4b5f-8a2f-4731361c2bd9",

|

|

"metadata": {},

|

|

"source": [

|

|

"The partitioning per author calls for a new table schema: create a new table called \"philosophers_cql_partitioned\", along with the necessary indexes:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 29,

|

|

"id": "d849003c-fce8-4bd9-96ee-d826bb4301eb",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"<cassandra.cluster.ResultSet at 0x7f046240c880>"

|

|

]

|

|

},

|

|

"execution_count": 29,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"create_table_p_statement = f\"\"\"CREATE TABLE IF NOT EXISTS {keyspace}.philosophers_cql_partitioned (\n",

|

|

" author TEXT,\n",

|

|

" quote_id UUID,\n",

|

|

" body TEXT,\n",

|

|

" embedding_vector VECTOR<FLOAT, 1536>,\n",

|

|

" tags SET<TEXT>,\n",

|

|

" PRIMARY KEY ( (author), quote_id )\n",

|

|

") WITH CLUSTERING ORDER BY (quote_id ASC);\"\"\"\n",

|

|

"\n",

|

|

"session.execute(create_table_p_statement)\n",

|

|

"\n",

|

|

"create_vector_index_p_statement = f\"\"\"CREATE CUSTOM INDEX IF NOT EXISTS idx_embedding_vector_p\n",

|

|

" ON {keyspace}.philosophers_cql_partitioned (embedding_vector)\n",

|

|

" USING 'org.apache.cassandra.index.sai.StorageAttachedIndex'\n",

|

|

" WITH OPTIONS = {{'similarity_function' : 'dot_product'}};\n",

|

|

"\"\"\"\n",

|

|

"\n",

|

|

"session.execute(create_vector_index_p_statement)\n",

|

|

"\n",

|

|

"create_tags_index_p_statement = f\"\"\"CREATE CUSTOM INDEX IF NOT EXISTS idx_tags_p\n",

|

|

" ON {keyspace}.philosophers_cql_partitioned (VALUES(tags))\n",

|

|

" USING 'org.apache.cassandra.index.sai.StorageAttachedIndex';\n",

|

|

"\"\"\"\n",

|

|

"session.execute(create_tags_index_p_statement)"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "6a9bf4a8-3ce7-4542-a636-18c25d1a4426",

|

|

"metadata": {},

|

|

"source": [

|

|

"Now repeat the compute-embeddings-and-insert step on the new table.\n",

|

|

"\n",

|

|

"You could use the very same insertion code as you did earlier, because the differences are hidden \"behind the scenes\": the database will store the inserted rows differently according to the partioning scheme of this new table.\n",

|

|

"\n",

|

|

"However, by way of demonstration, you will take advantage of a handy facility offered by the Cassandra drivers to easily run several queries (in this case, `INSERT`s) concurrently. This is something that Astra DB / Cassandra supports very well and can lead to a significant speedup, with very little changes in the client code.\n",

|

|

"\n",

|

|

"_(Note: one could additionally have cached the embeddings computed previously to save a few API tokens -- here, however, we wanted to keep the code easier to inspect.)_"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 30,

|

|

"id": "424513a6-0a9d-4164-bf30-22d5b7e3bb25",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"name": "stdout",

|

|

"output_type": "stream",

|

|

"text": [

|

|

"aristotle: Done (50 quotes inserted).\n",

|

|

"freud: Done (50 quotes inserted).\n",

|

|

"hegel: Done (50 quotes inserted).\n",

|

|

"kant: Done (50 quotes inserted).\n",

|

|

"nietzsche: Done (50 quotes inserted).\n",

|

|

"plato: Done (50 quotes inserted).\n",

|

|

"sartre: Done (50 quotes inserted).\n",

|

|

"schopenhauer: Done (50 quotes inserted).\n",

|

|

"spinoza: Done (50 quotes inserted).\n",

|

|

"Finished inserting.\n"

|

|

]

|

|

}

|

|

],

|

|

"source": [

|

|

"from cassandra.concurrent import execute_concurrent_with_args\n",

|

|

"\n",

|

|

"prepared_insertion = session.prepare(\n",

|

|

" f\"INSERT INTO {keyspace}.philosophers_cql_partitioned (quote_id, author, body, embedding_vector, tags) VALUES (?, ?, ?, ?, ?);\"\n",

|

|

")\n",

|

|

"\n",

|

|

"for philosopher, quotes in quote_dict[\"quotes\"].items():\n",

|

|

" print(f\"{philosopher}: \", end=\"\")\n",

|

|

" result = openai.Embedding.create(\n",

|

|

" input=[quote[\"body\"] for quote in quotes],\n",

|

|

" engine=embedding_model_name,\n",

|

|

" )\n",

|

|

" tuples_to_insert = []\n",

|

|

" for quote_idx, (quote, q_data) in enumerate(zip(quotes, result.data)):\n",

|

|

" quote_id = uuid4()\n",

|

|

" tuples_to_insert.append( (quote_id, philosopher, quote[\"body\"], q_data.embedding, set(quote[\"tags\"])) )\n",

|

|

" conc_results = execute_concurrent_with_args(\n",

|

|

" session,\n",

|

|

" prepared_insertion,\n",

|

|

" tuples_to_insert,\n",

|

|

" )\n",

|

|

" # check that all insertions succeed (better to always do this):\n",

|

|

" if any([not success for success, _ in conc_results]):\n",

|

|

" print(\"Something failed during the insertions!\")\n",

|

|

" else:\n",

|

|

" print(f\"Done ({len(quotes)} quotes inserted).\")\n",

|

|

"\n",

|

|

"print(\"Finished inserting.\")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "85a05f57-8a54-4ccf-98a2-f391a0597855",

|

|

"metadata": {},

|

|

"source": [

|

|

"Despite the different table schema, the DB query behind the similarity search is essentially the same:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 31,

|

|

"id": "a3217a90-c682-4c72-b834-7717ed13a3af",

|

|

"metadata": {},

|

|

"outputs": [],

|

|

"source": [

|

|

"def find_quote_and_author_p(query_quote, n, author=None, tags=None):\n",

|

|

" query_vector = openai.Embedding.create(\n",

|

|

" input=[query_quote],\n",

|

|

" engine=embedding_model_name,\n",

|

|

" ).data[0].embedding\n",

|

|

" # depending on what conditions are passed, the WHERE clause in the statement may vary.\n",

|

|

" where_clauses = []\n",

|

|

" where_values = []\n",

|

|

" if author:\n",

|

|

" where_clauses += [\"author = %s\"]\n",

|

|

" where_values += [author]\n",

|

|

" if tags:\n",

|

|

" for tag in tags:\n",

|

|

" where_clauses += [\"tags CONTAINS %s\"]\n",

|

|

" where_values += [tag]\n",

|

|

" if where_clauses:\n",

|

|

" search_statement = f\"\"\"SELECT body, author FROM {keyspace}.philosophers_cql_partitioned\n",

|

|

" WHERE {' AND '.join(where_clauses)}\n",

|

|

" ORDER BY embedding_vector ANN OF %s\n",

|

|

" LIMIT %s;\n",

|

|

" \"\"\"\n",

|

|

" else:\n",

|

|

" search_statement = f\"\"\"SELECT body, author FROM {keyspace}.philosophers_cql_partitioned\n",

|

|

" ORDER BY embedding_vector ANN OF %s\n",

|

|

" LIMIT %s;\n",

|

|

" \"\"\"\n",

|

|

" query_values = tuple(where_values + [query_vector] + [n])\n",

|

|

" result_rows = session.execute(search_statement, query_values)\n",

|

|

" return [\n",

|

|

" (result_row.body, result_row.author)\n",

|

|

" for result_row in result_rows\n",

|

|

" ]"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "cde7a7b3-5ad5-4d38-8125-d1bf07aaf84f",

|

|

"metadata": {},

|

|

"source": [

|

|

"That's it: the new table still supports the \"generic\" similarity searches all right ..."

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 33,

|

|

"id": "d7343a7a-5a06-47c5-ad96-8b60b6948352",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"[('Life to the great majority is only a constant struggle for mere existence, with the certainty of losing it at last.',\n",

|

|

" 'schopenhauer'),\n",

|

|

" ('We give up leisure in order that we may have leisure, just as we go to war in order that we may have peace.',\n",

|

|

" 'aristotle'),\n",

|

|

" ('Perhaps the gods are kind to us, by making life more disagreeable as we grow older. In the end death seems less intolerable than the manifold burdens we carry',\n",

|

|

" 'freud')]"

|

|

]

|

|

},

|

|

"execution_count": 33,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"find_quote_and_author_p(\"We struggle all our life for nothing\", 3)"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "fe7aa840-d17f-44f9-b3d4-786d361a9be0",

|

|

"metadata": {},

|

|

"source": [

|

|

"... but it's when an author is specified that you would notice a _huge_ performance advantage:"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 34,

|

|

"id": "d1abb677-5a8b-48c2-82c5-dbca94ef56f1",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"[('To live is to suffer, to survive is to find some meaning in the suffering.',\n",

|

|

" 'nietzsche'),\n",

|

|

" ('What makes us heroic?--Confronting simultaneously our supreme suffering and our supreme hope.',\n",

|

|

" 'nietzsche')]"

|

|

]

|

|

},

|

|

"execution_count": 34,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"find_quote_and_author_p(\"We struggle all our life for nothing\", 2, author=\"nietzsche\")"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "871da950-2a06-4a77-a86b-c528935da3a6",

|

|

"metadata": {},

|

|

"source": [

|

|

"Well, you _would_ notice a performance gain, if you had a realistic-size dataset. In this demo, with a few tens of entries, there's no noticeable difference -- but you get the idea."

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "08435bae-1bb9-4c14-ba21-7b4a7bdee3f5",

|

|

"metadata": {},

|

|

"source": [

|

|

"## Conclusion\n",

|

|

"\n",

|

|

"Congratulations! You have learned how to use OpenAI for vector embeddings and Astra DB / Cassandra for storage in order to build a sophisticated philosophical search engine and quote generator.\n",

|

|

"\n",

|

|

"This example used the [Cassandra drivers](https://docs.datastax.com/en/developer/python-driver/latest/) and runs CQL (Cassandra Query Language) statements directly to interface with the Vector Store - but this is not the only choice. Check the [README](https://github.com/openai/openai-cookbook/tree/main/examples/vector_databases/cassandra_astradb) for other options and integration with popular frameworks.\n",

|

|

"\n",

|

|

"To find out more on how Astra DB's Vector Search capabilities can be a key ingredient in your ML/GenAI applications, visit [Astra DB](https://docs.datastax.com/en/astra-serverless/docs/vector-search/overview.html)'s web page on the topic."

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "markdown",

|

|

"id": "bf7309d1-158c-4425-8f50-30918f4aee43",

|

|

"metadata": {},

|

|

"source": [

|

|

"## Cleanup\n",

|

|

"\n",

|

|

"If you want to remove all resources used for this demo, run this cell (_warning: this will delete the tables and the data inserted in them!_):"

|

|

]

|

|

},

|

|

{

|

|

"cell_type": "code",

|

|

"execution_count": 36,

|

|

"id": "17f2dd55-82df-4c3f-8aef-4ae0a03e7b79",

|

|

"metadata": {},

|

|

"outputs": [

|

|

{

|

|

"data": {

|

|

"text/plain": [

|

|

"<cassandra.cluster.ResultSet at 0x7f0462264eb0>"

|

|

]

|

|

},

|

|

"execution_count": 36,

|

|

"metadata": {},

|

|

"output_type": "execute_result"

|

|

}

|

|

],

|

|

"source": [

|

|

"session.execute(f\"DROP TABLE IF EXISTS {keyspace}.philosophers_cql;\")\n",

|

|

"session.execute(f\"DROP TABLE IF EXISTS {keyspace}.philosophers_cql_partitioned;\")"

|

|

]

|

|

}

|

|

],

|

|

"metadata": {

|

|

"kernelspec": {

|

|

"display_name": "Python 3 (ipykernel)",

|

|

"language": "python",

|

|

"name": "python3"

|

|

},

|

|

"language_info": {

|

|

"codemirror_mode": {

|

|

"name": "ipython",

|

|

"version": 3

|

|

},

|

|

"file_extension": ".py",

|

|

"mimetype": "text/x-python",

|

|

"name": "python",

|

|

"nbconvert_exporter": "python",

|

|

"pygments_lexer": "ipython3",

|

|

"version": "3.10.12"

|

|

}

|

|

},

|

|

"nbformat": 4,

|

|

"nbformat_minor": 5

|

|

}

|