mirror of

https://github.com/openai/openai-cookbook

synced 2024-11-11 13:11:02 +00:00

initial commit for Azure RAG cookbook (#1272)

Co-authored-by: juston <96567547+justonf@users.noreply.github.com>

This commit is contained in:

parent

f6ea13ebed

commit

5f552669f7

1

examples/chatgpt/rag-quickstart/azure/.funcignore

Normal file

1

examples/chatgpt/rag-quickstart/azure/.funcignore

Normal file

@ -0,0 +1 @@

|

||||

|

||||

50

examples/chatgpt/rag-quickstart/azure/.gitignore

vendored

Normal file

50

examples/chatgpt/rag-quickstart/azure/.gitignore

vendored

Normal file

@ -0,0 +1,50 @@

|

||||

bin

|

||||

obj

|

||||

csx

|

||||

.vs

|

||||

edge

|

||||

Publish

|

||||

|

||||

*.user

|

||||

*.suo

|

||||

*.cscfg

|

||||

*.Cache

|

||||

project.lock.json

|

||||

|

||||

/packages

|

||||

/TestResults

|

||||

|

||||

/tools/NuGet.exe

|

||||

/App_Data

|

||||

/secrets

|

||||

/data

|

||||

.secrets

|

||||

appsettings.json

|

||||

local.settings.json

|

||||

|

||||

node_modules

|

||||

dist

|

||||

vector_database_wikipedia_articles_embedded

|

||||

|

||||

# Local python packages

|

||||

.python_packages/

|

||||

|

||||

# Python Environments

|

||||

.env

|

||||

.venv

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

env.bak/

|

||||

venv.bak/

|

||||

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# Azurite artifacts

|

||||

__blobstorage__

|

||||

__queuestorage__

|

||||

__azurite_db*__.json

|

||||

vector_database_wikipedia_articles_embedded

|

||||

5

examples/chatgpt/rag-quickstart/azure/.vscode/extensions.json

vendored

Normal file

5

examples/chatgpt/rag-quickstart/azure/.vscode/extensions.json

vendored

Normal file

@ -0,0 +1,5 @@

|

||||

{

|

||||

"recommendations": [

|

||||

"ms-azuretools.vscode-azurefunctions"

|

||||

]

|

||||

}

|

||||

File diff suppressed because it is too large

Load Diff

153

examples/chatgpt/rag-quickstart/azure/function_app.py

Normal file

153

examples/chatgpt/rag-quickstart/azure/function_app.py

Normal file

@ -0,0 +1,153 @@

|

||||

import azure.functions as func

|

||||

import json

|

||||

import logging

|

||||

from azure.search.documents import SearchClient

|

||||

from azure.search.documents.indexes import SearchIndexClient

|

||||

from azure.core.credentials import AzureKeyCredential

|

||||

from openai import OpenAI

|

||||

import os

|

||||

from azure.search.documents.models import (

|

||||

VectorizedQuery

|

||||

)

|

||||

|

||||

# Initialize the Azure Function App

|

||||

app = func.FunctionApp()

|

||||

|

||||

def generate_embeddings(text):

|

||||

# Check if text is provided

|

||||

if not text:

|

||||

logging.error("No text provided in the query string.")

|

||||

return func.HttpResponse(

|

||||

"Please provide text in the query string.",

|

||||

status_code=400

|

||||

)

|

||||

|

||||

try:

|

||||

# Initialize OpenAI client

|

||||

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

|

||||

logging.info("OpenAI client initialized successfully.")

|

||||

|

||||

# Generate embeddings using OpenAI API

|

||||

response = client.embeddings.create(

|

||||

input=text,

|

||||

model=os.getenv("EMBEDDINGS_MODEL")

|

||||

)

|

||||

logging.info("Embeddings created successfully.")

|

||||

|

||||

# Extract the embedding from the response

|

||||

embedding = response.data[0].embedding

|

||||

logging.debug(f"Generated embedding: {embedding}")

|

||||

|

||||

return embedding

|

||||

except Exception as e:

|

||||

logging.error(f"Error generating embeddings: {str(e)}")

|

||||

return func.HttpResponse(

|

||||

f"Error generating embeddings: {str(e)}",

|

||||

status_code=500

|

||||

)

|

||||

|

||||

|

||||

@app.route(route="vector_similarity_search", auth_level=func.AuthLevel.ANONYMOUS)

|

||||

def vector_similarity_search(req: func.HttpRequest) -> func.HttpResponse:

|

||||

logging.info("Received request for vector similarity search.")

|

||||

try:

|

||||

# Parse the request body as JSON

|

||||

req_body = req.get_json()

|

||||

logging.info("Request body parsed successfully.")

|

||||

except ValueError:

|

||||

logging.error("Invalid JSON in request body.")

|

||||

return func.HttpResponse(

|

||||

"Invalid JSON in request body.",

|

||||

status_code=400

|

||||

)

|

||||

|

||||

# Extract parameters from the request body

|

||||

search_service_endpoint = req_body.get('search_service_endpoint')

|

||||

index_name = req_body.get('index_name')

|

||||

query = req_body.get('query')

|

||||

k_nearest_neighbors = req_body.get('k_nearest_neighbors')

|

||||

search_column = req_body.get('search_column')

|

||||

use_hybrid_query = req_body.get('use_hybrid_query')

|

||||

|

||||

logging.info(f"Parsed request parameters: search_service_endpoint={search_service_endpoint}, index_name={index_name}, query={query}, k_nearest_neighbors={k_nearest_neighbors}, search_column={search_column}, use_hybrid_query={use_hybrid_query}")

|

||||

|

||||

# Generate embeddings for the query

|

||||

embeddings = generate_embeddings(query)

|

||||

logging.info(f"Generated embeddings: {embeddings}")

|

||||

|

||||

# Check for required parameters

|

||||

if not (search_service_endpoint and index_name and query):

|

||||

logging.error("Missing required parameters in request body.")

|

||||

return func.HttpResponse(

|

||||

"Please provide search_service_endpoint, index_name, and query in the request body.",

|

||||

status_code=400

|

||||

)

|

||||

try:

|

||||

# Create a vectorized query

|

||||

vector_query = VectorizedQuery(vector=embeddings, k_nearest_neighbors=float(k_nearest_neighbors), fields=search_column)

|

||||

logging.info("Vector query generated successfully.")

|

||||

except Exception as e:

|

||||

logging.error(f"Error generating vector query: {str(e)}")

|

||||

return func.HttpResponse(

|

||||

f"Error generating vector query: {str(e)}",

|

||||

status_code=500

|

||||

)

|

||||

|

||||

try:

|

||||

# Initialize the search client

|

||||

search_client = SearchClient(

|

||||

endpoint=search_service_endpoint,

|

||||

index_name=index_name,

|

||||

credential=AzureKeyCredential(os.getenv("SEARCH_SERVICE_API_KEY"))

|

||||

)

|

||||

logging.info("Search client created successfully.")

|

||||

|

||||

# Initialize the index client and get the index schema

|

||||

index_client = SearchIndexClient(endpoint=search_service_endpoint, credential=AzureKeyCredential(os.getenv("SEARCH_SERVICE_API_KEY")))

|

||||

index_schema = index_client.get_index(index_name)

|

||||

for field in index_schema.fields:

|

||||

logging.info(f"Field: {field.name}, Type: {field.type}")

|

||||

# Filter out non-vector fields

|

||||

non_vector_fields = [field.name for field in index_schema.fields if field.type not in ["Edm.ComplexType", "Collection(Edm.ComplexType)","Edm.Vector","Collection(Edm.Single)"]]

|

||||

|

||||

logging.info(f"Non-vector fields in the index: {non_vector_fields}")

|

||||

except Exception as e:

|

||||

logging.error(f"Error creating search client: {str(e)}")

|

||||

return func.HttpResponse(

|

||||

f"Error creating search client: {str(e)}",

|

||||

status_code=500

|

||||

)

|

||||

|

||||

# Determine if hybrid query should be used

|

||||

search_text = query if use_hybrid_query else None

|

||||

|

||||

try:

|

||||

# Perform the search

|

||||

results = search_client.search(

|

||||

search_text=search_text,

|

||||

vector_queries=[vector_query],

|

||||

select=non_vector_fields,

|

||||

top=3

|

||||

)

|

||||

logging.info("Search performed successfully.")

|

||||

except Exception as e:

|

||||

logging.error(f"Error performing search: {str(e)}")

|

||||

return func.HttpResponse(

|

||||

f"Error performing search: {str(e)}",

|

||||

status_code=500

|

||||

)

|

||||

|

||||

try:

|

||||

# Extract relevant data from results and put it into a list of dictionaries

|

||||

response_data = [result for result in results]

|

||||

response_data = json.dumps(response_data)

|

||||

logging.info("Search results processed successfully.")

|

||||

except Exception as e:

|

||||

logging.error(f"Error processing search results: {str(e)}")

|

||||

return func.HttpResponse(

|

||||

f"Error processing search results: {str(e)}",

|

||||

status_code=500

|

||||

)

|

||||

|

||||

logging.info("Returning search results.")

|

||||

return func.HttpResponse(response_data, mimetype="application/json")

|

||||

15

examples/chatgpt/rag-quickstart/azure/host.json

Normal file

15

examples/chatgpt/rag-quickstart/azure/host.json

Normal file

@ -0,0 +1,15 @@

|

||||

{

|

||||

"version": "2.0",

|

||||

"logging": {

|

||||

"applicationInsights": {

|

||||

"samplingSettings": {

|

||||

"isEnabled": true,

|

||||

"excludedTypes": "Request"

|

||||

}

|

||||

}

|

||||

},

|

||||

"extensionBundle": {

|

||||

"id": "Microsoft.Azure.Functions.ExtensionBundle",

|

||||

"version": "[4.*, 5.0.0)"

|

||||

}

|

||||

}

|

||||

17

examples/chatgpt/rag-quickstart/azure/requirements.txt

Normal file

17

examples/chatgpt/rag-quickstart/azure/requirements.txt

Normal file

@ -0,0 +1,17 @@

|

||||

# Do not include azure-functions-worker in this file

|

||||

# The Python Worker is managed by the Azure Functions platform

|

||||

# Manually managing azure-functions-worker may cause unexpected issues

|

||||

|

||||

azure-functions

|

||||

azure-search-documents

|

||||

azure-identity

|

||||

openai

|

||||

azure-mgmt-search

|

||||

pandas

|

||||

azure-mgmt-resource

|

||||

azure-mgmt-storage

|

||||

azure-mgmt-web

|

||||

python-dotenv

|

||||

pyperclip

|

||||

PyPDF2

|

||||

tiktoken

|

||||

@ -0,0 +1,19 @@

|

||||

{

|

||||

"scriptFile": "__init__.py",

|

||||

"bindings": [

|

||||

{

|

||||

"authLevel": "Anonymous",

|

||||

"type": "httpTrigger",

|

||||

"direction": "in",

|

||||

"name": "req",

|

||||

"methods": [

|

||||

"post"

|

||||

]

|

||||

},

|

||||

{

|

||||

"type": "http",

|

||||

"direction": "out",

|

||||

"name": "$return"

|

||||

}

|

||||

]

|

||||

}

|

||||

34

examples/data/oai_docs/authentication.txt

Normal file

34

examples/data/oai_docs/authentication.txt

Normal file

@ -0,0 +1,34 @@

|

||||

|

||||

# Action authentication

|

||||

|

||||

Actions offer different authentication schemas to accommodate various use cases. To specify the authentication schema for your action, use the GPT editor and select "None", "API Key", or "OAuth".

|

||||

|

||||

By default, the authentication method for all actions is set to "None", but you can change this and allow different actions to have different authentication methods.

|

||||

|

||||

## No authentication

|

||||

|

||||

We support flows without authentication for applications where users can send requests directly to your API without needing an API key or signing in with OAuth.

|

||||

|

||||

Consider using no authentication for initial user interactions as you might experience a user drop off if they are forced to sign into an application. You can create a "signed out" experience and then move users to a "signed in" experience by enabling a separate action.

|

||||

|

||||

## API key authentication

|

||||

|

||||

Just like how a user might already be using your API, we allow API key authentication through the GPT editor UI. We encrypt the secret key when we store it in our database to keep your API key secure.

|

||||

|

||||

This approach is useful if you have an API that takes slightly more consequential actions than the no authentication flow but does not require an individual user to sign in. Adding API key authentication can protect your API and give you more fine-grained access controls along with visibility into where requests are coming from.

|

||||

|

||||

## OAuth

|

||||

|

||||

Actions allow OAuth sign in for each user. This is the best way to provide personalized experiences and make the most powerful actions available to users. A simple example of the OAuth flow with actions will look like the following:

|

||||

|

||||

- To start, select "Authentication" in the GPT editor UI, and select "OAuth".

|

||||

- You will be prompted to enter the OAuth client ID, client secret, authorization URL, token URL, and scope.

|

||||

- The client ID and secret can be simple text strings but should [follow OAuth best practices](https://www.oauth.com/oauth2-servers/client-registration/client-id-secret/).

|

||||

- We store an encrypted version of the client secret, while the client ID is available to end users.

|

||||

- OAuth requests will include the following information: `request={'grant_type': 'authorization_code', 'client_id': 'YOUR_CLIENT_ID', 'client_secret': 'YOUR_CLIENT_SECRET', 'code': 'abc123', 'redirect_uri': 'https://chatgpt.com/aip/g-some_gpt_id/oauth/callback'}`

|

||||

- In order for someone to use an action with OAuth, they will need to send a message that invokes the action and then the user will be presented with a "Sign in to [domain]" button in the ChatGPT UI.

|

||||

- The `authorization_url` endpoint should return a response that looks like:

|

||||

`{ "access_token": "example_token", "token_type": "bearer", "refresh_token": "example_token", "expires_in": 59 }`

|

||||

- During the user sign in process, ChatGPT makes a request to your `authorization_url` using the specified `authorization_content_type`, we expect to get back an access token and optionally a [refresh token](https://auth0.com/learn/refresh-tokens) which we use to periodically fetch a new access token.

|

||||

- Each time a user makes a request to the action, the user’s token will be passed in the Authorization header: (“Authorization”: “[Bearer/Basic] [user’s token]”).

|

||||

- We require that OAuth applications make use of the [state parameter](https://auth0.com/docs/secure/attack-protection/state-parameters#set-and-compare-state-parameter-values) for security reasons.

|

||||

333

examples/data/oai_docs/batch.txt

Normal file

333

examples/data/oai_docs/batch.txt

Normal file

@ -0,0 +1,333 @@

|

||||

|

||||

# Batch API

|

||||

|

||||

Learn how to use OpenAI's Batch API to send asynchronous groups of requests with 50% lower costs, a separate pool of significantly higher rate limits, and a clear 24-hour turnaround time. The service is ideal for processing jobs that don't require immediate responses. You can also [explore the API reference directly here](/docs/api-reference/batch).

|

||||

|

||||

## Overview

|

||||

|

||||

While some uses of the OpenAI Platform require you to send synchronous requests, there are many cases where requests do not need an immediate response or [rate limits](/docs/guides/rate-limits) prevent you from executing a large number of queries quickly. Batch processing jobs are often helpful in use cases like:

|

||||

|

||||

1. running evaluations

|

||||

2. classifying large datasets

|

||||

3. embedding content repositories

|

||||

|

||||

The Batch API offers a straightforward set of endpoints that allow you to collect a set of requests into a single file, kick off a batch processing job to execute these requests, query for the status of that batch while the underlying requests execute, and eventually retrieve the collected results when the batch is complete.

|

||||

|

||||

Compared to using standard endpoints directly, Batch API has:

|

||||

|

||||

1. **Better cost efficiency:** 50% cost discount compared to synchronous APIs

|

||||

2. **Higher rate limits:** [Substantially more headroom](/settings/organization/limits) compared to the synchronous APIs

|

||||

3. **Fast completion times:** Each batch completes within 24 hours (and often more quickly)

|

||||

|

||||

## Getting Started

|

||||

|

||||

### 1. Preparing Your Batch File

|

||||

|

||||

Batches start with a `.jsonl` file where each line contains the details of an individual request to the API. For now, the available endpoints are `/v1/chat/completions` ([Chat Completions API](/docs/api-reference/chat)) and `/v1/embeddings` ([Embeddings API](/docs/api-reference/embeddings)). For a given input file, the parameters in each line's `body` field are the same as the parameters for the underlying endpoint. Each request must include a unique `custom_id` value, which you can use to reference results after completion. Here's an example of an input file with 2 requests. Note that each input file can only include requests to a single model.

|

||||

|

||||

```jsonl

|

||||

{"custom_id": "request-1", "method": "POST", "url": "/v1/chat/completions", "body": {"model": "gpt-3.5-turbo-0125", "messages": [{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": "Hello world!"}],"max_tokens": 1000}}

|

||||

{"custom_id": "request-2", "method": "POST", "url": "/v1/chat/completions", "body": {"model": "gpt-3.5-turbo-0125", "messages": [{"role": "system", "content": "You are an unhelpful assistant."},{"role": "user", "content": "Hello world!"}],"max_tokens": 1000}}

|

||||

```

|

||||

|

||||

### 2. Uploading Your Batch Input File

|

||||

|

||||

Similar to our [Fine-tuning API](/docs/guides/fine-tuning/), you must first upload your input file so that you can reference it correctly when kicking off batches. Upload your `.jsonl` file using the [Files API](/docs/api-reference/files).

|

||||

|

||||

<CodeSample

|

||||

title="Upload files for Batch API"

|

||||

defaultLanguage="python"

|

||||

code={{

|

||||

python: `

|

||||

from openai import OpenAI

|

||||

client = OpenAI()\n

|

||||

batch_input_file = client.files.create(

|

||||

file=open("batchinput.jsonl", "rb"),

|

||||

purpose="batch"

|

||||

)

|

||||

`.trim(),

|

||||

curl: `

|

||||

curl https://api.openai.com/v1/files \\

|

||||

-H "Authorization: Bearer $OPENAI_API_KEY" \\

|

||||

-F purpose="batch" \\

|

||||

-F file="@batchinput.jsonl"

|

||||

`.trim(),

|

||||

node: `

|

||||

import OpenAI from "openai";\n

|

||||

const openai = new OpenAI();\n

|

||||

async function main() {

|

||||

const file = await openai.files.create({

|

||||

file: fs.createReadStream("batchinput.jsonl"),

|

||||

purpose: "batch",

|

||||

});\n

|

||||

console.log(file);

|

||||

}\n

|

||||

main();

|

||||

`.trim(),

|

||||

}}

|

||||

/>

|

||||

|

||||

### 3. Creating the Batch

|

||||

|

||||

Once you've successfully uploaded your input file, you can use the input File object's ID to create a batch. In this case, let's assume the file ID is `file-abc123`. For now, the completion window can only be set to `24h`. You can also provide custom metadata via an optional `metadata` parameter.

|

||||

|

||||

<CodeSample

|

||||

title="Create the Batch"

|

||||

defaultLanguage="python"

|

||||

code={{

|

||||

python: `

|

||||

batch_input_file_id = batch_input_file.id\n

|

||||

client.batches.create(

|

||||

input_file_id=batch_input_file_id,

|

||||

endpoint="/v1/chat/completions",

|

||||

completion_window="24h",

|

||||

metadata={

|

||||

"description": "nightly eval job"

|

||||

}

|

||||

)

|

||||

`.trim(),

|

||||

curl: `

|

||||

curl https://api.openai.com/v1/batches \\

|

||||

-H "Authorization: Bearer $OPENAI_API_KEY" \\

|

||||

-H "Content-Type: application/json" \\

|

||||

-d '{

|

||||

"input_file_id": "file-abc123",

|

||||

"endpoint": "/v1/chat/completions",

|

||||

"completion_window": "24h"

|

||||

}'

|

||||

`.trim(),

|

||||

node: `

|

||||

import OpenAI from "openai";\n

|

||||

const openai = new OpenAI();\n

|

||||

async function main() {

|

||||

const batch = await openai.batches.create({

|

||||

input_file_id: "file-abc123",

|

||||

endpoint: "/v1/chat/completions",

|

||||

completion_window: "24h"

|

||||

});\n

|

||||

console.log(batch);

|

||||

}\n

|

||||

main();

|

||||

`.trim(),

|

||||

}}

|

||||

/>

|

||||

|

||||

This request will return a [Batch object](/docs/api-reference/batch/object) with metadata about your batch:

|

||||

|

||||

```python

|

||||

{

|

||||

"id": "batch_abc123",

|

||||

"object": "batch",

|

||||

"endpoint": "/v1/chat/completions",

|

||||

"errors": null,

|

||||

"input_file_id": "file-abc123",

|

||||

"completion_window": "24h",

|

||||

"status": "validating",

|

||||

"output_file_id": null,

|

||||

"error_file_id": null,

|

||||

"created_at": 1714508499,

|

||||

"in_progress_at": null,

|

||||

"expires_at": 1714536634,

|

||||

"completed_at": null,

|

||||

"failed_at": null,

|

||||

"expired_at": null,

|

||||

"request_counts": {

|

||||

"total": 0,

|

||||

"completed": 0,

|

||||

"failed": 0

|

||||

},

|

||||

"metadata": null

|

||||

}

|

||||

```

|

||||

|

||||

### 4. Checking the Status of a Batch

|

||||

|

||||

You can check the status of a batch at any time, which will also return a Batch object.

|

||||

|

||||

<CodeSample

|

||||

title="Check the status of a batch"

|

||||

defaultLanguage="python"

|

||||

code={{

|

||||

python: `

|

||||

from openai import OpenAI

|

||||

client = OpenAI()\n

|

||||

client.batches.retrieve("batch_abc123")

|

||||

`.trim(),

|

||||

curl: `

|

||||

curl https://api.openai.com/v1/batches/batch_abc123 \\

|

||||

-H "Authorization: Bearer $OPENAI_API_KEY" \\

|

||||

-H "Content-Type: application/json" \\

|

||||

`.trim(),

|

||||

node: `

|

||||

import OpenAI from "openai";\n

|

||||

const openai = new OpenAI();\n

|

||||

async function main() {

|

||||

const batch = await openai.batches.retrieve("batch_abc123");\n

|

||||

console.log(batch);

|

||||

}\n

|

||||

main();

|

||||

`.trim(),

|

||||

}}

|

||||

/>

|

||||

|

||||

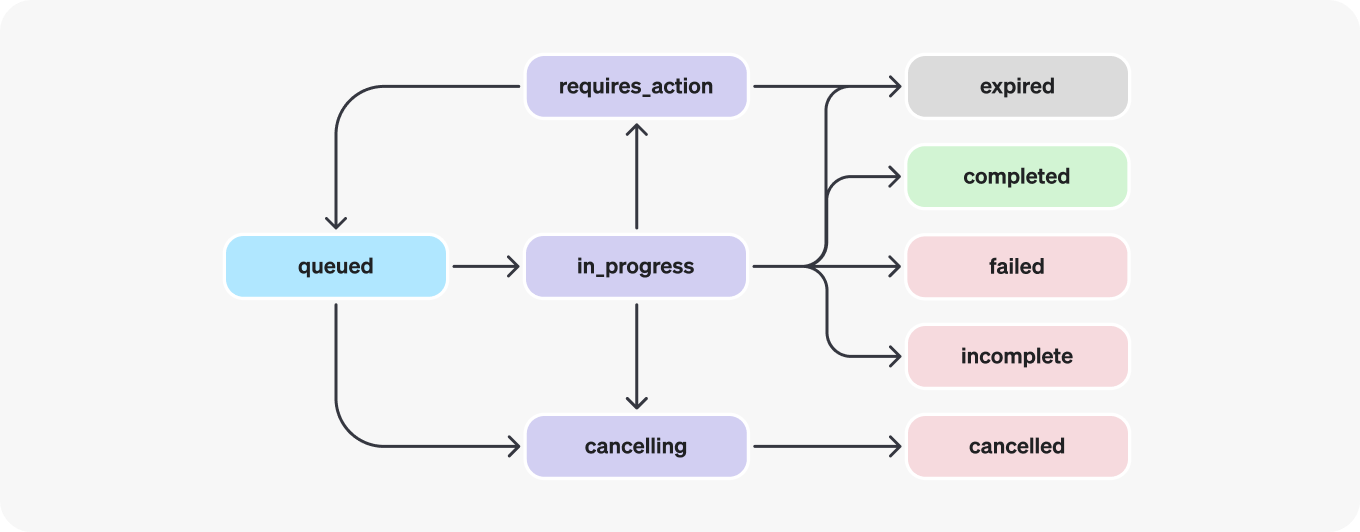

The status of a given Batch object can be any of the following:

|

||||

|

||||

| Status | Description |

|

||||

| ------------- | ------------------------------------------------------------------------------ |

|

||||

| `validating` | the input file is being validated before the batch can begin |

|

||||

| `failed` | the input file has failed the validation process |

|

||||

| `in_progress` | the input file was successfully validated and the batch is currently being run |

|

||||

| `finalizing` | the batch has completed and the results are being prepared |

|

||||

| `completed` | the batch has been completed and the results are ready |

|

||||

| `expired` | the batch was not able to be completed within the 24-hour time window |

|

||||

| `cancelling` | the batch is being cancelled (may take up to 10 minutes) |

|

||||

| `cancelled` | the batch was cancelled |

|

||||

|

||||

### 5. Retrieving the Results

|

||||

|

||||

Once the batch is complete, you can download the output by making a request against the [Files API](/docs/api-reference/files) via the `output_file_id` field from the Batch object and writing it to a file on your machine, in this case `batch_output.jsonl`

|

||||

|

||||

<CodeSample

|

||||

title="Retrieving the batch results"

|

||||

defaultLanguage="python"

|

||||

code={{

|

||||

python: `

|

||||

from openai import OpenAI

|

||||

client = OpenAI()\n

|

||||

content = client.files.content("file-xyz123")

|

||||

`.trim(),

|

||||

curl: `

|

||||

curl https://api.openai.com/v1/files/file-xyz123/content \\

|

||||

-H "Authorization: Bearer $OPENAI_API_KEY" > batch_output.jsonl

|

||||

`.trim(),

|

||||

node: `

|

||||

import OpenAI from "openai";\n

|

||||

const openai = new OpenAI();\n

|

||||

async function main() {

|

||||

const file = await openai.files.content("file-xyz123");\n

|

||||

console.log(file);

|

||||

}\n

|

||||

main();

|

||||

`.trim(),

|

||||

}}

|

||||

/>

|

||||

|

||||

The output `.jsonl` file will have one response line for every successful request line in the input file. Any failed requests in the batch will have their error information written to an error file that can be found via the batch's `error_file_id`.

|

||||

|

||||

|

||||

Note that the output line order may not match the input line order. Instead of

|

||||

relying on order to process your results, use the custom_id field which will be

|

||||

present in each line of your output file and allow you to map requests in your input

|

||||

to results in your output.

|

||||

|

||||

|

||||

```jsonl

|

||||

{"id": "batch_req_123", "custom_id": "request-2", "response": {"status_code": 200, "request_id": "req_123", "body": {"id": "chatcmpl-123", "object": "chat.completion", "created": 1711652795, "model": "gpt-3.5-turbo-0125", "choices": [{"index": 0, "message": {"role": "assistant", "content": "Hello."}, "logprobs": null, "finish_reason": "stop"}], "usage": {"prompt_tokens": 22, "completion_tokens": 2, "total_tokens": 24}, "system_fingerprint": "fp_123"}}, "error": null}

|

||||

{"id": "batch_req_456", "custom_id": "request-1", "response": {"status_code": 200, "request_id": "req_789", "body": {"id": "chatcmpl-abc", "object": "chat.completion", "created": 1711652789, "model": "gpt-3.5-turbo-0125", "choices": [{"index": 0, "message": {"role": "assistant", "content": "Hello! How can I assist you today?"}, "logprobs": null, "finish_reason": "stop"}], "usage": {"prompt_tokens": 20, "completion_tokens": 9, "total_tokens": 29}, "system_fingerprint": "fp_3ba"}}, "error": null}

|

||||

```

|

||||

|

||||

### 6. Cancelling a Batch

|

||||

|

||||

If necessary, you can cancel an ongoing batch. The batch's status will change to `cancelling` until in-flight requests are complete (up to 10 minutes), after which the status will change to `cancelled`.

|

||||

|

||||

<CodeSample

|

||||

title="Cancelling a batch"

|

||||

defaultLanguage="python"

|

||||

code={{

|

||||

python: `

|

||||

from openai import OpenAI

|

||||

client = OpenAI()\n

|

||||

client.batches.cancel("batch_abc123")

|

||||

`.trim(),

|

||||

curl: `

|

||||

curl https://api.openai.com/v1/batches/batch_abc123/cancel \\

|

||||

-H "Authorization: Bearer $OPENAI_API_KEY" \\

|

||||

-H "Content-Type: application/json" \\

|

||||

-X POST

|

||||

`.trim(),

|

||||

node: `

|

||||

import OpenAI from "openai";\n

|

||||

const openai = new OpenAI();\n

|

||||

async function main() {

|

||||

const batch = await openai.batches.cancel("batch_abc123");\n

|

||||

console.log(batch);

|

||||

}\n

|

||||

main();

|

||||

`.trim(),

|

||||

}}

|

||||

/>

|

||||

|

||||

### 7. Getting a List of All Batches

|

||||

|

||||

At any time, you can see all your batches. For users with many batches, you can use the `limit` and `after` parameters to paginate your results.

|

||||

|

||||

<CodeSample

|

||||

title="Getting a list of all batches"

|

||||

defaultLanguage="python"

|

||||

code={{

|

||||

python: `

|

||||

from openai import OpenAI

|

||||

client = OpenAI()\n

|

||||

client.batches.list(limit=10)

|

||||

`.trim(),

|

||||

curl: `

|

||||

curl https://api.openai.com/v1/batches?limit=10 \\

|

||||

-H "Authorization: Bearer $OPENAI_API_KEY" \\

|

||||

-H "Content-Type: application/json"

|

||||

`.trim(),

|

||||

node: `

|

||||

import OpenAI from "openai";\n

|

||||

const openai = new OpenAI();\n

|

||||

async function main() {

|

||||

const list = await openai.batches.list();\n

|

||||

for await (const batch of list) {

|

||||

console.log(batch);

|

||||

}

|

||||

}\n

|

||||

main();

|

||||

`.trim(),

|

||||

}}

|

||||

/>

|

||||

|

||||

## Model Availability

|

||||

|

||||

The Batch API can currently be used to execute queries against the following models. The Batch API supports text and vision inputs in the same format as the endpoints for these models:

|

||||

|

||||

- `gpt-4o`

|

||||

- `gpt-4-turbo`

|

||||

- `gpt-4`

|

||||

- `gpt-4-32k`

|

||||

- `gpt-3.5-turbo`

|

||||

- `gpt-3.5-turbo-16k`

|

||||

- `gpt-4-turbo-preview`

|

||||

- `gpt-4-vision-preview`

|

||||

- `gpt-4-turbo-2024-04-09`

|

||||

- `gpt-4-0314`

|

||||

- `gpt-4-32k-0314`

|

||||

- `gpt-4-32k-0613`

|

||||

- `gpt-3.5-turbo-0301`

|

||||

- `gpt-3.5-turbo-16k-0613`

|

||||

- `gpt-3.5-turbo-1106`

|

||||

- `gpt-3.5-turbo-0613`

|

||||

- `text-embedding-3-large`

|

||||

- `text-embedding-3-small`

|

||||

- `text-embedding-ada-002`

|

||||

|

||||

The Batch API also supports [fine-tuned models](/docs/guides/fine-tuning/what-models-can-be-fine-tuned).

|

||||

|

||||

## Rate Limits

|

||||

|

||||

Batch API rate limits are separate from existing per-model rate limits. The Batch API has two new types of rate limits:

|

||||

|

||||

1. **Per-batch limits:** A single batch may include up to 50,000 requests, and a batch input file can be up to 100 MB in size. Note that `/v1/embeddings` batches are also restricted to a maximum of 50,000 embedding inputs across all requests in the batch.

|

||||

2. **Enqueued prompt tokens per model:** Each model has a maximum number of enqueued prompt tokens allowed for batch processing. You can find these limits on the [Platform Settings page](/settings/organization/limits).

|

||||

|

||||

There are no limits for output tokens or number of submitted requests for the Batch API today. Because Batch API rate limits are a new, separate pool, **using the Batch API will not consume tokens from your standard per-model rate limits**, thereby offering you a convenient way to increase the number of requests and processed tokens you can use when querying our API.

|

||||

|

||||

## Batch Expiration

|

||||

|

||||

Batches that do not complete in time eventually move to an `expired` state; unfinished requests within that batch are cancelled, and any responses to completed requests are made available via the batch's output file. You will be charged for tokens consumed from any completed requests.

|

||||

|

||||

## Other Resources

|

||||

|

||||

For more concrete examples, visit **[the OpenAI Cookbook](https://cookbook.openai.com/examples/batch_processing)**, which contains sample code for use cases like classification, sentiment analysis, and summary generation.

|

||||

393

examples/data/oai_docs/changelog.txt

Normal file

393

examples/data/oai_docs/changelog.txt

Normal file

@ -0,0 +1,393 @@

|

||||

|

||||

# Changelog

|

||||

|

||||

Keep track of changes to the OpenAI API. You can also track changes via our [public OpenAPI specification](https://github.com/openai/openai-openapi) which is used to generate our SDKs, documentation, and more. This changelog is maintained in a best effort fashion and may not reflect all changes

|

||||

being made.

|

||||

|

||||

### Jun 6th, 2024

|

||||

|

||||

-

|

||||

<MarkdownLink

|

||||

href="/docs/guides/function-calling/parallel-function-calling"

|

||||

useSpan

|

||||

>

|

||||

Parallel function calling

|

||||

{" "}

|

||||

can be disabled in Chat Completions and the Assistants API by passing{" "}

|

||||

parallel_tool_calls=false.

|

||||

|

||||

-

|

||||

|

||||

.NET SDK

|

||||

{" "}

|

||||

launched in Beta.

|

||||

|

||||

|

||||

### Jun 3rd, 2024

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

<MarkdownLink

|

||||

href="/docs/assistants/tools/file-search/customizing-file-search-settings"

|

||||

useSpan

|

||||

>

|

||||

file search customizations

|

||||

|

||||

.

|

||||

|

||||

|

||||

### May 15th, 2024

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

|

||||

archiving projects

|

||||

|

||||

. Only organization owners can access this functionality.

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

|

||||

setting cost limits

|

||||

{" "}

|

||||

on a per-project basis for pay as you go customers.

|

||||

|

||||

|

||||

### May 13th, 2024

|

||||

|

||||

-

|

||||

Released{" "}

|

||||

|

||||

GPT-4o

|

||||

{" "}

|

||||

in the API. GPT-4o is our fastest and most affordable flagship model.

|

||||

|

||||

|

||||

### May 9th, 2024

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

<MarkdownLink

|

||||

href="/docs/assistants/how-it-works/creating-image-input-content"

|

||||

useSpan

|

||||

>

|

||||

image inputs to the Assistants API.

|

||||

|

||||

|

||||

|

||||

### May 7th, 2024

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

|

||||

fine-tuned models to the Batch API

|

||||

|

||||

.

|

||||

|

||||

|

||||

### May 6th, 2024

|

||||

|

||||

-

|

||||

Added{" "}

|

||||

<MarkdownLink

|

||||

href="/docs/api-reference/chat/create#chat-create-stream_options"

|

||||

useSpan

|

||||

>

|

||||

{'`stream_options: {"include_usage": true}`'}

|

||||

{" "}

|

||||

parameter to the Chat Completions and Completions APIs. Setting this gives

|

||||

developers access to usage stats when using streaming.

|

||||

|

||||

|

||||

### May 2nd, 2024

|

||||

|

||||

-

|

||||

Added{" "}

|

||||

|

||||

{"a new endpoint"}

|

||||

{" "}

|

||||

to delete a message from a thread in the Assistants API.

|

||||

|

||||

|

||||

### Apr 29th, 2024

|

||||

|

||||

-

|

||||

Added a new{" "}

|

||||

<MarkdownLink

|

||||

href="/docs/guides/function-calling/function-calling-behavior"

|

||||

useSpan

|

||||

>

|

||||

function calling option `tool_choice: "required"`

|

||||

{" "}

|

||||

to the Chat Completions and Assistants APIs.

|

||||

|

||||

-

|

||||

Added a{" "}

|

||||

|

||||

guide for the Batch API

|

||||

{" "}

|

||||

and Batch API support for{" "}

|

||||

|

||||

embeddings models

|

||||

|

||||

|

||||

|

||||

### Apr 17th, 2024

|

||||

|

||||

-

|

||||

Introduced a{" "}

|

||||

|

||||

series of updates to the Assistants API

|

||||

|

||||

, including a new file search tool allowing up to 10,000 files per assistant, new token

|

||||

controls, and support for tool choice.

|

||||

|

||||

|

||||

### Apr 16th, 2024

|

||||

|

||||

-

|

||||

Introduced{" "}

|

||||

|

||||

project based hierarchy

|

||||

{" "}

|

||||

for organizing work by projects, including the ability to create{" "}

|

||||

API keys {" "}

|

||||

and manage rate and cost limits on a per-project basis (cost limits available only

|

||||

for Enterprise customers).

|

||||

|

||||

|

||||

### Apr 15th, 2024

|

||||

|

||||

-

|

||||

Released{" "}

|

||||

|

||||

Batch API

|

||||

|

||||

|

||||

|

||||

### Apr 9th, 2024

|

||||

|

||||

-

|

||||

Released{" "}

|

||||

|

||||

GPT-4 Turbo with Vision

|

||||

{" "}

|

||||

in general availability in the API

|

||||

|

||||

|

||||

### Apr 4th, 2024

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

|

||||

seed

|

||||

{" "}

|

||||

in the fine-tuning API

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

|

||||

checkpoints

|

||||

{" "}

|

||||

in the fine-tuning API

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

<MarkdownLink

|

||||

href="/docs/api-reference/runs/createRun#runs-createrun-additional_messages"

|

||||

useSpan

|

||||

>

|

||||

adding Messages when creating a Run

|

||||

{" "}

|

||||

in the Assistants API

|

||||

|

||||

|

||||

### Apr 1st, 2024

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

<MarkdownLink

|

||||

href="/docs/api-reference/messages/listMessages#messages-listmessages-run_id"

|

||||

useSpan

|

||||

>

|

||||

filtering Messages by run_id

|

||||

{" "}

|

||||

in the Assistants API

|

||||

|

||||

|

||||

### Mar 29th, 2024

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

<MarkdownLink

|

||||

href="/docs/api-reference/runs/createRun#runs-createrun-temperature"

|

||||

useSpan

|

||||

>

|

||||

temperature

|

||||

{" "}

|

||||

and{" "}

|

||||

<MarkdownLink

|

||||

href="/docs/api-reference/messages/createMessage#messages-createmessage-role"

|

||||

useSpan

|

||||

>

|

||||

assistant message creation

|

||||

{" "}

|

||||

in the Assistants API

|

||||

|

||||

|

||||

### Mar 14th, 2024

|

||||

|

||||

-

|

||||

Added support for{" "}

|

||||

|

||||

streaming

|

||||

{" "}

|

||||

in the Assistants API

|

||||

|

||||

|

||||

### Feb 9th, 2024

|

||||

|

||||

-

|

||||

Added

|

||||

|

||||

{" "}

|

||||

timestamp_granularities parameter

|

||||

to the Audio API

|

||||

|

||||

|

||||

### Feb 1st, 2024

|

||||

|

||||

-

|

||||

Released

|

||||

|

||||

{" "}

|

||||

gpt-3.5-turbo-0125, an updated GPT-3.5 Turbo model

|

||||

|

||||

|

||||

|

||||

### Jan 25th, 2024

|

||||

|

||||

-

|

||||

Released

|

||||

|

||||

{" "}

|

||||

embedding V3 models and an updated GPT-4 Turbo preview

|

||||

|

||||

|

||||

-

|

||||

Added

|

||||

<MarkdownLink

|

||||

href="/docs/api-reference/embeddings/create#embeddings-create-dimensions"

|

||||

useSpan

|

||||

>

|

||||

{" "}

|

||||

dimensions parameter

|

||||

to the Embeddings API

|

||||

|

||||

|

||||

### Dec 20th, 2023

|

||||

|

||||

-

|

||||

Added

|

||||

<MarkdownLink

|

||||

href="/docs/api-reference/runs/createRun#runs-createrun-additional_instructions"

|

||||

useSpan

|

||||

>

|

||||

{" "}

|

||||

additional_instructions parameter

|

||||

to run creation in the Assistants API

|

||||

|

||||

|

||||

### Dec 15th, 2023

|

||||

|

||||

-

|

||||

Added

|

||||

|

||||

{" "}

|

||||

logprobs and

|

||||

top_logprobs

|

||||

parameters

|

||||

to the Chat Completions API

|

||||

|

||||

|

||||

### Dec 14th, 2023

|

||||

|

||||

-

|

||||

Changed{" "}

|

||||

|

||||

function parameters

|

||||

{" "}

|

||||

argument on a tool call to be optional

|

||||

|

||||

|

||||

### Nov 30th, 2023

|

||||

|

||||

-

|

||||

Released{" "}

|

||||

|

||||

OpenAI Deno SDK

|

||||

|

||||

|

||||

|

||||

### Nov 6th, 2023

|

||||

|

||||

-

|

||||

Released{" "}

|

||||

|

||||

GPT-4 Turbo Preview

|

||||

|

||||

,

|

||||

updated GPT-3.5 Turbo

|

||||

,

|

||||

GPT-4 Turbo with Vision

|

||||

,

|

||||

Assistants API

|

||||

,

|

||||

DALL·E 3 in the API

|

||||

, and

|

||||

text-to-speech API

|

||||

|

||||

|

||||

-

|

||||

Deprecated the Chat Completions functions{" "}

|

||||

parameter{" "}

|

||||

|

||||

in favor of

|

||||

tools

|

||||

{" "}

|

||||

|

||||

-

|

||||

Released{" "}

|

||||

|

||||

OpenAI Python SDK V1.0

|

||||

|

||||

|

||||

|

||||

### Oct 16th, 2023

|

||||

|

||||

-

|

||||

Added

|

||||

<MarkdownLink

|

||||

href="/docs/api-reference/embeddings/create#embeddings-create-encoding_format"

|

||||

useSpan

|

||||

>

|

||||

{" "}

|

||||

encoding_format parameter

|

||||

to the Embeddings API

|

||||

|

||||

-

|

||||

Added max_tokens to the{" "}

|

||||

|

||||

Moderation models

|

||||

|

||||

|

||||

|

||||

### Oct 6th, 2023

|

||||

|

||||

-

|

||||

Added{" "}

|

||||

|

||||

function calling support

|

||||

{" "}

|

||||

to the Fine-tuning API

|

||||

|

||||

560

examples/data/oai_docs/crawl-website-embeddings.txt

Normal file

560

examples/data/oai_docs/crawl-website-embeddings.txt

Normal file

@ -0,0 +1,560 @@

|

||||

|

||||

# How to build an AI that can answer questions about your website

|

||||

|

||||

This tutorial walks through a simple example of crawling a website (in this example, the OpenAI website), turning the crawled pages into embeddings using the [Embeddings API](/docs/guides/embeddings), and then creating a basic search functionality that allows a user to ask questions about the embedded information. This is intended to be a starting point for more sophisticated applications that make use of custom knowledge bases.

|

||||

|

||||

# Getting started

|

||||

|

||||

Some basic knowledge of Python and GitHub is helpful for this tutorial. Before diving in, make sure to [set up an OpenAI API key](/docs/api-reference/introduction) and walk through the [quickstart tutorial](/docs/quickstart). This will give a good intuition on how to use the API to its full potential.

|

||||

|

||||

Python is used as the main programming language along with the OpenAI, Pandas, transformers, NumPy, and other popular packages. If you run into any issues working through this tutorial, please ask a question on the [OpenAI Community Forum](https://community.openai.com).

|

||||

|

||||

To start with the code, clone the [full code for this tutorial on GitHub](https://github.com/openai/web-crawl-q-and-a-example). Alternatively, follow along and copy each section into a Jupyter notebook and run the code step by step, or just read along. A good way to avoid any issues is to set up a new virtual environment and install the required packages by running the following commands:

|

||||

|

||||

```bash

|

||||

python -m venv env

|

||||

|

||||

source env/bin/activate

|

||||

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

## Setting up a web crawler

|

||||

|

||||

The primary focus of this tutorial is the OpenAI API so if you prefer, you can skip the context on how to create a web crawler and just [download the source code](https://github.com/openai/web-crawl-q-and-a-example). Otherwise, expand the section below to work through the scraping mechanism implementation.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

<Image

|

||||

png="https://cdn.openai.com/API/docs/images/tutorials/web-qa/DALL-E-coding-a-web-crawling-system-pixel-art.png"

|

||||

webp="https://cdn.openai.com/API/docs/images/tutorials/web-qa/DALL-E-coding-a-web-crawling-system-pixel-art.webp"

|

||||

alt="DALL-E: Coding a web crawling system pixel art"

|

||||

width="1024"

|

||||

height="1024"

|

||||

/>

|

||||

|

||||

|

||||

|

||||

Acquiring data in text form is the first step to use embeddings. This tutorial

|

||||

creates a new set of data by crawling the OpenAI website, a technique that you

|

||||

can also use for your own company or personal website.

|

||||

|

||||

|

||||

<Button

|

||||

size="small"

|

||||

color={ButtonColor.neutral}

|

||||

href="https://github.com/openai/web-crawl-q-and-a-example"

|

||||

target="_blank"

|

||||

>

|

||||

View source code

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

While this crawler is written from scratch, open source packages like [Scrapy](https://github.com/scrapy/scrapy) can also help with these operations.

|

||||

|

||||

This crawler will start from the root URL passed in at the bottom of the code below, visit each page, find additional links, and visit those pages as well (as long as they have the same root domain). To begin, import the required packages, set up the basic URL, and define a HTMLParser class.

|

||||

|

||||

```python

|

||||

import requests

|

||||

import re

|

||||

import urllib.request

|

||||

from bs4 import BeautifulSoup

|

||||

from collections import deque

|

||||

from html.parser import HTMLParser

|

||||

from urllib.parse import urlparse

|

||||

import os

|

||||

|

||||

# Regex pattern to match a URL

|

||||

HTTP_URL_PATTERN = r'^http[s]*://.+'

|

||||

|

||||

domain = "openai.com" # <- put your domain to be crawled

|

||||

full_url = "https://openai.com/" # <- put your domain to be crawled with https or http

|

||||

|

||||

# Create a class to parse the HTML and get the hyperlinks

|

||||

class HyperlinkParser(HTMLParser):

|

||||

def __init__(self):

|

||||

super().__init__()

|

||||

# Create a list to store the hyperlinks

|

||||

self.hyperlinks = []

|

||||

|

||||

# Override the HTMLParser's handle_starttag method to get the hyperlinks

|

||||

def handle_starttag(self, tag, attrs):

|

||||

attrs = dict(attrs)

|

||||

|

||||

# If the tag is an anchor tag and it has an href attribute, add the href attribute to the list of hyperlinks

|

||||

if tag == "a" and "href" in attrs:

|

||||

self.hyperlinks.append(attrs["href"])

|

||||

```

|

||||

|

||||

The next function takes a URL as an argument, opens the URL, and reads the HTML content. Then, it returns all the hyperlinks found on that page.

|

||||

|

||||

```python

|

||||

# Function to get the hyperlinks from a URL

|

||||

def get_hyperlinks(url):

|

||||

|

||||

# Try to open the URL and read the HTML

|

||||

try:

|

||||

# Open the URL and read the HTML

|

||||

with urllib.request.urlopen(url) as response:

|

||||

|

||||

# If the response is not HTML, return an empty list

|

||||

if not response.info().get('Content-Type').startswith("text/html"):

|

||||

return []

|

||||

|

||||

# Decode the HTML

|

||||

html = response.read().decode('utf-8')

|

||||

except Exception as e:

|

||||

print(e)

|

||||

return []

|

||||

|

||||

# Create the HTML Parser and then Parse the HTML to get hyperlinks

|

||||

parser = HyperlinkParser()

|

||||

parser.feed(html)

|

||||

|

||||

return parser.hyperlinks

|

||||

```

|

||||

|

||||

The goal is to crawl through and index only the content that lives under the OpenAI domain. For this purpose, a function that calls the `get_hyperlinks` function but filters out any URLs that are not part of the specified domain is needed.

|

||||

|

||||

```python

|

||||

# Function to get the hyperlinks from a URL that are within the same domain

|

||||

def get_domain_hyperlinks(local_domain, url):

|

||||

clean_links = []

|

||||

for link in set(get_hyperlinks(url)):

|

||||

clean_link = None

|

||||

|

||||

# If the link is a URL, check if it is within the same domain

|

||||

if re.search(HTTP_URL_PATTERN, link):

|

||||

# Parse the URL and check if the domain is the same

|

||||

url_obj = urlparse(link)

|

||||

if url_obj.netloc == local_domain:

|

||||

clean_link = link

|

||||

|

||||

# If the link is not a URL, check if it is a relative link

|

||||

else:

|

||||

if link.startswith("/"):

|

||||

link = link[1:]

|

||||

elif link.startswith("#") or link.startswith("mailto:"):

|

||||

continue

|

||||

clean_link = "https://" + local_domain + "/" + link

|

||||

|

||||

if clean_link is not None:

|

||||

if clean_link.endswith("/"):

|

||||

clean_link = clean_link[:-1]

|

||||

clean_links.append(clean_link)

|

||||

|

||||

# Return the list of hyperlinks that are within the same domain

|

||||

return list(set(clean_links))

|

||||

```

|

||||

|

||||

The `crawl` function is the final step in the web scraping task setup. It keeps track of the visited URLs to avoid repeating the same page, which might be linked across multiple pages on a site. It also extracts the raw text from a page without the HTML tags, and writes the text content into a local .txt file specific to the page.

|

||||

|

||||

```python

|

||||

def crawl(url):

|

||||

# Parse the URL and get the domain

|

||||

local_domain = urlparse(url).netloc

|

||||

|

||||

# Create a queue to store the URLs to crawl

|

||||

queue = deque([url])

|

||||

|

||||

# Create a set to store the URLs that have already been seen (no duplicates)

|

||||

seen = set([url])

|

||||

|

||||

# Create a directory to store the text files

|

||||

if not os.path.exists("text/"):

|

||||

os.mkdir("text/")

|

||||

|

||||

if not os.path.exists("text/"+local_domain+"/"):

|

||||

os.mkdir("text/" + local_domain + "/")

|

||||

|

||||

# Create a directory to store the csv files

|

||||

if not os.path.exists("processed"):

|

||||

os.mkdir("processed")

|

||||

|

||||

# While the queue is not empty, continue crawling

|

||||

while queue:

|

||||

|

||||

# Get the next URL from the queue

|

||||

url = queue.pop()

|

||||

print(url) # for debugging and to see the progress

|

||||

|

||||

# Save text from the url to a .txt file

|

||||

with open('text/'+local_domain+'/'+url[8:].replace("/", "_") + ".txt", "w", encoding="UTF-8") as f:

|

||||

|

||||

# Get the text from the URL using BeautifulSoup

|

||||

soup = BeautifulSoup(requests.get(url).text, "html.parser")

|

||||

|

||||

# Get the text but remove the tags

|

||||

text = soup.get_text()

|

||||

|

||||

# If the crawler gets to a page that requires JavaScript, it will stop the crawl

|

||||

if ("You need to enable JavaScript to run this app." in text):

|

||||

print("Unable to parse page " + url + " due to JavaScript being required")

|

||||

|

||||

# Otherwise, write the text to the file in the text directory

|

||||

f.write(text)

|

||||

|

||||

# Get the hyperlinks from the URL and add them to the queue

|

||||

for link in get_domain_hyperlinks(local_domain, url):

|

||||

if link not in seen:

|

||||

queue.append(link)

|

||||

seen.add(link)

|

||||

|

||||

crawl(full_url)

|

||||

```

|

||||

|

||||

The last line of the above example runs the crawler which goes through all the accessible links and turns those pages into text files. This will take a few minutes to run depending on the size and complexity of your site.

|

||||

|

||||

|

||||

|

||||

## Building an embeddings index

|

||||

|

||||

|

||||

|

||||

<Image

|

||||

png="https://cdn.openai.com/API/docs/images/tutorials/web-qa/DALL-E-woman-turning-a-stack-of-papers-into-numbers-pixel-art.png"

|

||||

webp="https://cdn.openai.com/API/docs/images/tutorials/web-qa/DALL-E-woman-turning-a-stack-of-papers-into-numbers-pixel-art.webp"

|

||||

alt="DALL-E: Woman turning a stack of papers into numbers pixel art"

|

||||

width="1024"

|

||||

height="1024"

|

||||

/>

|

||||

|

||||

|

||||

|

||||

CSV is a common format for storing embeddings. You can use this format with

|

||||

Python by converting the raw text files (which are in the text directory) into

|

||||

Pandas data frames. Pandas is a popular open source library that helps you

|

||||

work with tabular data (data stored in rows and columns).

|

||||

|

||||

|

||||

Blank empty lines can clutter the text files and make them harder to process.

|

||||

A simple function can remove those lines and tidy up the files.

|

||||

|

||||

|

||||

|

||||

|

||||

```python

|

||||

def remove_newlines(serie):

|

||||

serie = serie.str.replace('\n', ' ')

|

||||

serie = serie.str.replace('\\n', ' ')

|

||||

serie = serie.str.replace(' ', ' ')

|

||||

serie = serie.str.replace(' ', ' ')

|

||||

return serie

|

||||

```

|

||||

|

||||

Converting the text to CSV requires looping through the text files in the text directory created earlier. After opening each file, remove the extra spacing and append the modified text to a list. Then, add the text with the new lines removed to an empty Pandas data frame and write the data frame to a CSV file.

|

||||

|

||||

|

||||

Extra spacing and new lines can clutter the text and complicate the embeddings

|

||||

process. The code used here helps to remove some of them but you may find 3rd party

|

||||

libraries or other methods useful to get rid of more unnecessary characters.

|

||||

|

||||

|

||||

```python

|

||||

import pandas as pd

|

||||

|

||||

# Create a list to store the text files

|

||||

texts=[]

|

||||

|

||||

# Get all the text files in the text directory

|

||||

for file in os.listdir("text/" + domain + "/"):

|

||||

|

||||

# Open the file and read the text

|

||||

with open("text/" + domain + "/" + file, "r", encoding="UTF-8") as f:

|

||||

text = f.read()

|

||||

|

||||

# Omit the first 11 lines and the last 4 lines, then replace -, _, and #update with spaces.

|

||||

texts.append((file[11:-4].replace('-',' ').replace('_', ' ').replace('#update',''), text))

|

||||

|

||||

# Create a dataframe from the list of texts

|

||||

df = pd.DataFrame(texts, columns = ['fname', 'text'])

|

||||

|

||||

# Set the text column to be the raw text with the newlines removed

|

||||

df['text'] = df.fname + ". " + remove_newlines(df.text)

|

||||

df.to_csv('processed/scraped.csv')

|

||||

df.head()

|

||||

```

|

||||

|

||||

Tokenization is the next step after saving the raw text into a CSV file. This process splits the input text into tokens by breaking down the sentences and words. A visual demonstration of this can be seen by [checking out our Tokenizer](/tokenizer) in the docs.

|

||||

|

||||

> A helpful rule of thumb is that one token generally corresponds to ~4 characters of text for common English text. This translates to roughly ¾ of a word (so 100 tokens ~= 75 words).

|

||||

|

||||

The API has a limit on the maximum number of input tokens for embeddings. To stay below the limit, the text in the CSV file needs to be broken down into multiple rows. The existing length of each row will be recorded first to identify which rows need to be split.

|

||||

|

||||

```python

|

||||

import tiktoken

|

||||

|

||||

# Load the cl100k_base tokenizer which is designed to work with the ada-002 model

|

||||

tokenizer = tiktoken.get_encoding("cl100k_base")

|

||||

|

||||

df = pd.read_csv('processed/scraped.csv', index_col=0)

|

||||

df.columns = ['title', 'text']

|

||||

|

||||

# Tokenize the text and save the number of tokens to a new column

|

||||

df['n_tokens'] = df.text.apply(lambda x: len(tokenizer.encode(x)))

|

||||

|

||||

# Visualize the distribution of the number of tokens per row using a histogram

|

||||

df.n_tokens.hist()

|

||||

```

|

||||

|

||||

|

||||

|

||||

<img

|

||||

src="https://cdn.openai.com/API/docs/images/tutorials/web-qa/embeddings-initial-histrogram.png"

|

||||

alt="Embeddings histogram"

|

||||

width="553"

|

||||

height="413"

|

||||

/>

|

||||

|

||||

|

||||

|

||||

The newest embeddings model can handle inputs with up to 8191 input tokens so most of the rows would not need any chunking, but this may not be the case for every subpage scraped so the next code chunk will split the longer lines into smaller chunks.

|

||||

|

||||

```Python

|

||||

max_tokens = 500

|

||||

|

||||

# Function to split the text into chunks of a maximum number of tokens

|

||||

def split_into_many(text, max_tokens = max_tokens):

|

||||

|

||||

# Split the text into sentences

|

||||

sentences = text.split('. ')

|

||||

|

||||

# Get the number of tokens for each sentence

|

||||

n_tokens = [len(tokenizer.encode(" " + sentence)) for sentence in sentences]

|

||||

|

||||

chunks = []

|

||||

tokens_so_far = 0

|

||||

chunk = []

|

||||

|

||||

# Loop through the sentences and tokens joined together in a tuple

|

||||

for sentence, token in zip(sentences, n_tokens):

|

||||

|

||||

# If the number of tokens so far plus the number of tokens in the current sentence is greater

|

||||

# than the max number of tokens, then add the chunk to the list of chunks and reset

|

||||

# the chunk and tokens so far

|

||||

if tokens_so_far + token > max_tokens:

|

||||

chunks.append(". ".join(chunk) + ".")

|

||||

chunk = []

|

||||

tokens_so_far = 0

|

||||

|

||||

# If the number of tokens in the current sentence is greater than the max number of

|

||||

# tokens, go to the next sentence

|

||||

if token > max_tokens:

|

||||

continue

|

||||

|

||||

# Otherwise, add the sentence to the chunk and add the number of tokens to the total

|

||||

chunk.append(sentence)

|

||||

tokens_so_far += token + 1

|

||||

|

||||

return chunks

|

||||

|

||||

|

||||

shortened = []

|

||||

|

||||

# Loop through the dataframe

|

||||

for row in df.iterrows():

|

||||

|

||||

# If the text is None, go to the next row

|

||||

if row[1]['text'] is None:

|

||||

continue

|

||||

|

||||

# If the number of tokens is greater than the max number of tokens, split the text into chunks

|

||||

if row[1]['n_tokens'] > max_tokens:

|

||||

shortened += split_into_many(row[1]['text'])

|

||||

|

||||

# Otherwise, add the text to the list of shortened texts

|

||||

else:

|

||||

shortened.append( row[1]['text'] )

|

||||

```

|

||||

|

||||

Visualizing the updated histogram again can help to confirm if the rows were successfully split into shortened sections.

|

||||

|

||||

```python

|

||||

df = pd.DataFrame(shortened, columns = ['text'])

|

||||

df['n_tokens'] = df.text.apply(lambda x: len(tokenizer.encode(x)))

|

||||

df.n_tokens.hist()

|

||||

```

|

||||

|

||||

|

||||

|

||||

<img

|

||||

src="https://cdn.openai.com/API/docs/images/tutorials/web-qa/embeddings-tokenized-output.png"

|

||||