mirror of

https://github.com/hwchase17/langchain

synced 2024-11-10 01:10:59 +00:00

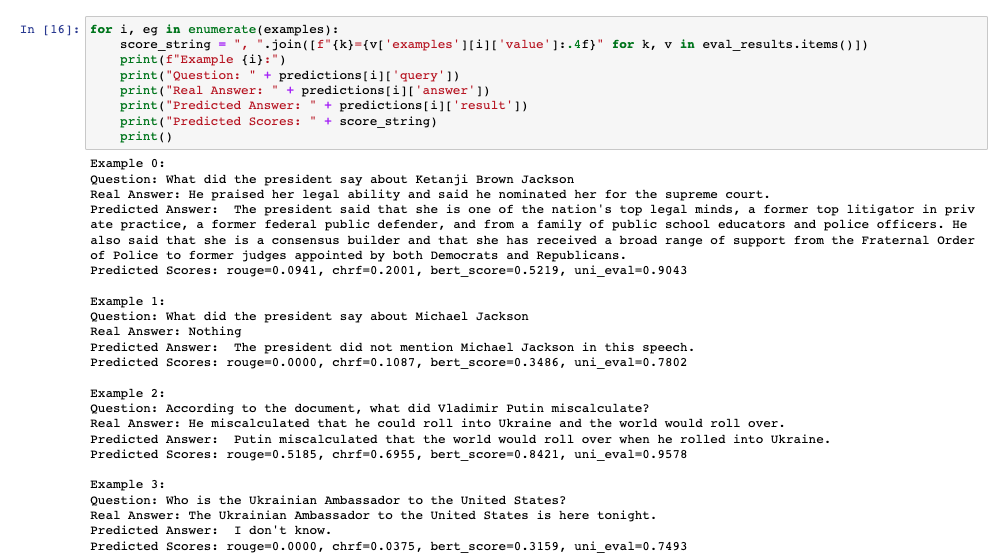

This PR adds additional evaluation metrics for data-augmented QA, resulting in a report like this at the end of the notebook:  The score calculation is based on the [Critique](https://docs.inspiredco.ai/critique/) toolkit, an API-based toolkit (like OpenAI) that has minimal dependencies, so it should be easy for people to run if they choose. The code could further be simplified by actually adding a chain that calls Critique directly, but that probably should be saved for another PR if necessary. Any comments or change requests are welcome! |

||

|---|---|---|

| .. | ||

| data_augmented_question_answering.ipynb | ||

| huggingface_datasets.ipynb | ||

| question_answering.ipynb | ||