mirror of

https://github.com/hwchase17/langchain

synced 2024-11-08 07:10:35 +00:00

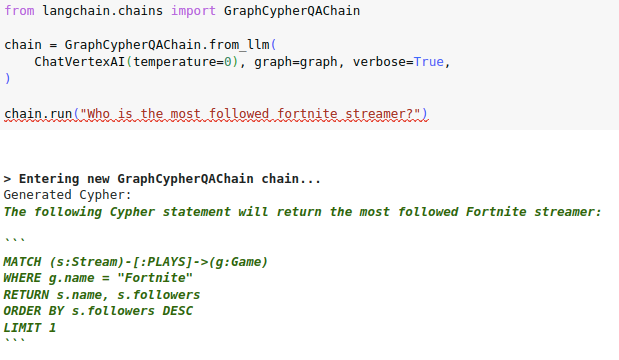

# Check if generated Cypher code is wrapped in backticks Some LLMs like the VertexAI like to explain how they generated the Cypher statement and wrap the actual code in three backticks:  I have observed a similar pattern with OpenAI chat models in a conversational settings, where multiple user and assistant message are provided to the LLM to generate Cypher statements, where then the LLM wants to maybe apologize for previous steps or explain its thoughts. Interestingly, both OpenAI and VertexAI wrap the code in three backticks if they are doing any explaining or apologizing. Checking if the generated cypher is wrapped in backticks seems like a low-hanging fruit to expand the cypher search to other LLMs and conversational settings.

16 lines

541 B

Python

16 lines

541 B

Python

from langchain.chains.graph_qa.cypher import extract_cypher

|

|

|

|

|

|

def test_no_backticks() -> None:

|

|

"""Test if there are no backticks, so the original text should be returned."""

|

|

query = "MATCH (n) RETURN n"

|

|

output = extract_cypher(query)

|

|

assert output == query

|

|

|

|

|

|

def test_backticks() -> None:

|

|

"""Test if there are backticks. Query from within backticks should be returned."""

|

|

query = "You can use the following query: ```MATCH (n) RETURN n```"

|

|

output = extract_cypher(query)

|

|

assert output == "MATCH (n) RETURN n"

|