### Description

This PR moves the Elasticsearch classes to a partners package.

Note that we will not move (and later remove) `ElasticKnnSearch`. It

were previously deprecated.

`ElasticVectorSearch` is going to stay in the community package since it

is used quite a lot still.

Also note that I left the `ElasticsearchTranslator` for self query

untouched because it resides in main `langchain` package.

### Dependencies

There will be another PR that updates the notebooks (potentially pulling

them into the partners package) and templates and removes the classes

from the community package, see

https://github.com/langchain-ai/langchain/pull/17468

#### Open question

How to make the transition smooth for users? Do we move the import

aliases and require people to install `langchain-elasticsearch`? Or do

we remove the import aliases from the `langchain` package all together?

What has worked well for other partner packages?

---------

Co-authored-by: Erick Friis <erick@langchain.dev>

**Description**

Adding different threshold types to the semantic chunker. I’ve had much

better and predictable performance when using standard deviations

instead of percentiles.

For all the documents I’ve tried, the distribution of distances look

similar to the above: positively skewed normal distribution. All skews

I’ve seen are less than 1 so that explains why standard deviations

perform well, but I’ve included IQR if anyone wants something more

robust.

Also, using the percentile method backwards, you can declare the number

of clusters and use semantic chunking to get an ‘optimal’ splitting.

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

- **Description:** By default it expects a list but that's not the case

in corner scenarios when there is no document ingested(use case:

Bootstrap application).

\

Hence added as check, if the instance is panda Dataframe instead of list

then it will procced with return immediately.

- **Issue:** NA

- **Dependencies:** NA

- **Twitter handle:** jaskiratsingh1

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

## Description & Issue

While following the official doc to use clickhouse as a vectorstore, I

found only the default `annoy` index is properly supported. But I want

to try another engine `usearch` for `annoy` is not properly supported on

ARM platforms.

Here is the settings I prefer:

``` python

settings = ClickhouseSettings(

table="wiki_Ethereum",

index_type="usearch", # annoy by default

index_param=[],

)

```

The above settings do not work for the command `set

allow_experimental_annoy_index=1` is hard-coded.

This PR will make sure the experimental feature follow the `index_type`

which is also consistent with Clickhouse's naming conventions.

**Description:** Update the example fiddler notebook to use community

path, instead of langchain.callback

**Dependencies:** None

**Twitter handle:** @bhalder

Co-authored-by: Barun Halder <barun@fiddler.ai>

h/t @hinthornw

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

Thank you for contributing to LangChain!

- [ ] **PR title**: "package: description"

- Where "package" is whichever of langchain, community, core,

experimental, etc. is being modified. Use "docs: ..." for purely docs

changes, "templates: ..." for template changes, "infra: ..." for CI

changes.

- Example: "community: add foobar LLM"

- [ ] **PR message**: ***Delete this entire checklist*** and replace

with

- **Description:** a description of the change

- **Issue:** the issue # it fixes, if applicable

- **Dependencies:** any dependencies required for this change

- **Twitter handle:** if your PR gets announced, and you'd like a

mention, we'll gladly shout you out!

- [ ] **Add tests and docs**: If you're adding a new integration, please

include

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

- [ ] **Lint and test**: Run `make format`, `make lint` and `make test`

from the root of the package(s) you've modified. See contribution

guidelines for more: https://python.langchain.com/docs/contributing/

Additional guidelines:

- Make sure optional dependencies are imported within a function.

- Please do not add dependencies to pyproject.toml files (even optional

ones) unless they are required for unit tests.

- Most PRs should not touch more than one package.

- Changes should be backwards compatible.

- If you are adding something to community, do not re-import it in

langchain.

If no one reviews your PR within a few days, please @-mention one of

baskaryan, efriis, eyurtsev, hwchase17.

Avoids deprecation warning that triggered at import time, e.g. with

`python -c 'import langchain.smith'`

/opt/venv/lib/python3.12/site-packages/langchain/callbacks/__init__.py:37:

LangChainDeprecationWarning: Importing this callback from langchain is

deprecated. Importing it from langchain will no longer be supported as

of langchain==0.2.0. Please import from langchain-community instead:

`from langchain_community.callbacks import base`.

To install langchain-community run `pip install -U langchain-community`.

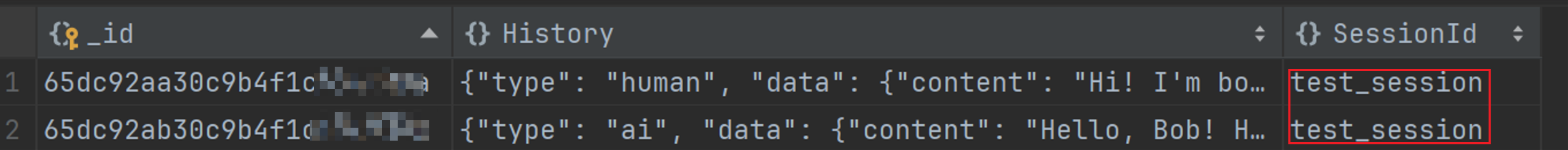

I tried to configure MongoDBChatMessageHistory using the code from the

original documentation to store messages based on the passed session_id

in MongoDB. However, this configuration did not take effect, and the

session id in the database remained as 'test_session'. To resolve this

issue, I found that when configuring MongoDBChatMessageHistory, it is

necessary to set session_id=session_id instead of

session_id=test_session.

Issue: DOC: Ineffective Configuration of MongoDBChatMessageHistory for

Custom session_id Storage

previous code:

```python

chain_with_history = RunnableWithMessageHistory(

chain,

lambda session_id: MongoDBChatMessageHistory(

session_id="test_session",

connection_string="mongodb://root:Y181491117cLj@123.56.224.232:27017",

database_name="my_db",

collection_name="chat_histories",

),

input_messages_key="question",

history_messages_key="history",

)

config = {"configurable": {"session_id": "mmm"}}

chain_with_history.invoke({"question": "Hi! I'm bob"}, config)

```

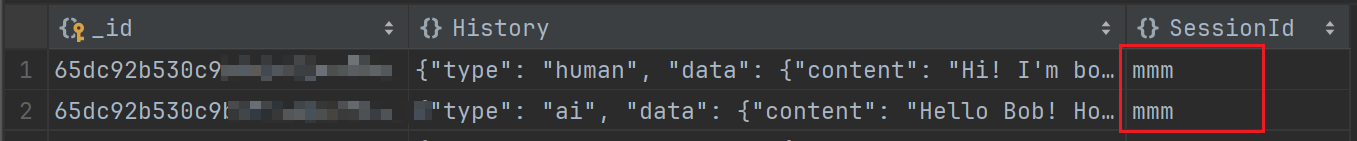

Modified code:

```python

chain_with_history = RunnableWithMessageHistory(

chain,

lambda session_id: MongoDBChatMessageHistory(

session_id=session_id, # here is my modify code

connection_string="mongodb://root:Y181491117cLj@123.56.224.232:27017",

database_name="my_db",

collection_name="chat_histories",

),

input_messages_key="question",

history_messages_key="history",

)

config = {"configurable": {"session_id": "mmm"}}

chain_with_history.invoke({"question": "Hi! I'm bob"}, config)

```

Effect after modification (it works):

These packages have moved to

https://github.com/langchain-ai/langchain-google

Left tombstone readmes incase anyone ends up at the "Source Code" link

from old pypi releases. Can keep these around for a few months.

- **Description:** Introduce a new parameter `graph_kwargs` to

`RdfGraph` - parameters used to initialize the `rdflib.Graph` if

`query_endpoint` is set. Also, do not set

`rdflib.graph.DATASET_DEFAULT_GRAPH_ID` as default value for the

`rdflib.Graph` `identifier` if `query_endpoint` is set.

- **Issue:** N/A

- **Dependencies:** N/A

- **Twitter handle:** N/A

- **Description:** I encountered this error when I tried to use

LLMChainFilter. Even if the message slightly differs, like `Not relevant

(NO)` this results in an error. It has been reported already here:

https://github.com/langchain-ai/langchain/issues/. This change hopefully

makes it more robust.

- **Issue:** #11408

- **Dependencies:** No

- **Twitter handle:** dokatox

**Description:** Update the azure search notebook to have more

descriptive comments, and an option to choose between OpenAI and

AzureOpenAI Embeddings

---------

Co-authored-by: Matt Gotteiner <[email protected]>

Co-authored-by: Bagatur <baskaryan@gmail.com>

**Description:** Llama Guard is deprecated from Anyscale public

endpoint.

**Issue:** Change the default model. and remove the limitation of only

use Llama Guard with Anyscale LLMs

Anyscale LLM can also works with all other Chat model hosted on

Anyscale.

Also added `async_client` for Anyscale LLM

**Description:** Callback handler to integrate fiddler with langchain.

This PR adds the following -

1. `FiddlerCallbackHandler` implementation into langchain/community

2. Example notebook `fiddler.ipynb` for usage documentation

[Internal Tracker : FDL-14305]

**Issue:**

NA

**Dependencies:**

- Installation of langchain-community is unaffected.

- Usage of FiddlerCallbackHandler requires installation of latest

fiddler-client (2.5+)

**Twitter handle:** @fiddlerlabs @behalder

Co-authored-by: Barun Halder <barun@fiddler.ai>

- **Description:** Fixing outdated imports after v0.10 llama index

update and updating metadata and source text access

- **Issue:** #17860

- **Twitter handle:** @maximeperrin_

---------

Co-authored-by: Maxime Perrin <mperrin@doing.fr>

- **Description:**

- Add DocumentManager class, which is a nosql record manager.

- In order to use index and aindex in

libs/langchain/langchain/indexes/_api.py, DocumentManager inherits

RecordManager.

- Also I added the MongoDB implementation of Document Manager too.

- **Dependencies:** pymongo, motor

<!-- Thank you for contributing to LangChain!

Please title your PR "<package>: <description>", where <package> is

whichever of langchain, community, core, experimental, etc. is being

modified.

Replace this entire comment with:

- **Description:** Add DocumentManager class, which is a no sql record

manager. To use index method and aindex method in indexes._api.py,

Document Manager inherits RecordManager.Add the MongoDB implementation

of Document Manager.

- **Dependencies:** pymongo, motor

Please make sure your PR is passing linting and testing before

submitting. Run `make format`, `make lint` and `make test` from the root

of the package you've modified to check this locally.

See contribution guidelines for more information on how to write/run

tests, lint, etc: https://python.langchain.com/docs/contributing/

If you're adding a new integration, please include:

1. a test for the integration, preferably unit tests that do not rely on

network access,

2. an example notebook showing its use. It lives in

`docs/docs/integrations` directory.

If no one reviews your PR within a few days, please @-mention one of

@baskaryan, @eyurtsev, @hwchase17.

-->

---------

Co-authored-by: Eugene Yurtsev <eyurtsev@gmail.com>

This PR updates RunnableWithMessageHistory to use add_messages which

will save on round-trips for any chat

history abstractions that implement the optimization. If the

optimization isn't

implemented, add_messages automatically invokes add_message serially.