```

class Joke(BaseModel):

setup: str = Field(description="question to set up a joke")

punchline: str = Field(description="answer to resolve the joke")

joke_query = "Tell me a joke."

# Or, an example with compound type fields.

#class FloatArray(BaseModel):

# values: List[float] = Field(description="list of floats")

#

#float_array_query = "Write out a few terms of fiboacci."

model = OpenAI(model_name='text-davinci-003', temperature=0.0)

parser = PydanticOutputParser(pydantic_object=Joke)

prompt = PromptTemplate(

template="Answer the user query.\n{format_instructions}\n{query}\n",

input_variables=["query"],

partial_variables={"format_instructions": parser.get_format_instructions()}

)

_input = prompt.format_prompt(query=joke_query)

print("Prompt:\n", _input.to_string())

output = model(_input.to_string())

print("Completion:\n", output)

parsed_output = parser.parse(output)

print("Parsed completion:\n", parsed_output)

```

```

Prompt:

Answer the user query.

The output should be formatted as a JSON instance that conforms to the JSON schema below. For example, the object {"foo": ["bar", "baz"]} conforms to the schema {"foo": {"description": "a list of strings field", "type": "string"}}.

Here is the output schema:

---

{"setup": {"description": "question to set up a joke", "type": "string"}, "punchline": {"description": "answer to resolve the joke", "type": "string"}}

---

Tell me a joke.

Completion:

{"setup": "Why don't scientists trust atoms?", "punchline": "Because they make up everything!"}

Parsed completion:

setup="Why don't scientists trust atoms?" punchline='Because they make up everything!'

```

Ofc, works only with LMs of sufficient capacity. DaVinci is reliable but

not always.

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

PromptLayer now has support for [several different tracking

features.](https://magniv.notion.site/Track-4deee1b1f7a34c1680d085f82567dab9)

In order to use any of these features you need to have a request id

associated with the request.

In this PR we add a boolean argument called `return_pl_id` which will

add `pl_request_id` to the `generation_info` dictionary associated with

a generation.

We also updated the relevant documentation.

add the state_of_the_union.txt file so that its easier to follow through

with the example.

---------

Co-authored-by: Jithin James <jjmachan@pop-os.localdomain>

* Zapier Wrapper and Tools (implemented by Zapier Team)

* Zapier Toolkit, examples with mrkl agent

---------

Co-authored-by: Mike Knoop <mikeknoop@gmail.com>

Co-authored-by: Robert Lewis <robert.lewis@zapier.com>

### Summary

Allows users to pass in `**unstructured_kwargs` to Unstructured document

loaders. Implemented with the `strategy` kwargs in mind, but will pass

in other kwargs like `include_page_breaks` as well. The two currently

supported strategies are `"hi_res"`, which is more accurate but takes

longer, and `"fast"`, which processes faster but with lower accuracy.

The `"hi_res"` strategy is the default. For PDFs, if `detectron2` is not

available and the user selects `"hi_res"`, the loader will fallback to

using the `"fast"` strategy.

### Testing

#### Make sure the `strategy` kwarg works

Run the following in iPython to verify that the `"fast"` strategy is

indeed faster.

```python

from langchain.document_loaders import UnstructuredFileLoader

loader = UnstructuredFileLoader("layout-parser-paper-fast.pdf", strategy="fast", mode="elements")

%timeit loader.load()

loader = UnstructuredFileLoader("layout-parser-paper-fast.pdf", mode="elements")

%timeit loader.load()

```

On my system I get:

```python

In [3]: from langchain.document_loaders import UnstructuredFileLoader

In [4]: loader = UnstructuredFileLoader("layout-parser-paper-fast.pdf", strategy="fast", mode="elements")

In [5]: %timeit loader.load()

247 ms ± 369 µs per loop (mean ± std. dev. of 7 runs, 1 loop each)

In [6]: loader = UnstructuredFileLoader("layout-parser-paper-fast.pdf", mode="elements")

In [7]: %timeit loader.load()

2.45 s ± 31 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

```

#### Make sure older versions of `unstructured` still work

Run `pip install unstructured==0.5.3` and then verify the following runs

without error:

```python

from langchain.document_loaders import UnstructuredFileLoader

loader = UnstructuredFileLoader("layout-parser-paper-fast.pdf", mode="elements")

loader.load()

```

# Description

Add `RediSearch` vectorstore for LangChain

RediSearch: [RediSearch quick

start](https://redis.io/docs/stack/search/quick_start/)

# How to use

```

from langchain.vectorstores.redisearch import RediSearch

rds = RediSearch.from_documents(docs, embeddings,redisearch_url="redis://localhost:6379")

```

Seeing a lot of issues in Discord in which the LLM is not using the

correct LIMIT clause for different SQL dialects. ie, it's using `LIMIT`

for mssql instead of `TOP`, or instead of `ROWNUM` for Oracle, etc.

I think this could be due to us specifying the LIMIT statement in the

example rows portion of `table_info`. So the LLM is seeing the `LIMIT`

statement used in the prompt.

Since we can't specify each dialect's method here, I think it's fine to

just replace the `SELECT... LIMIT 3;` statement with `3 rows from

table_name table:`, and wrap everything in a block comment directly

following the `CREATE` statement. The Rajkumar et al paper wrapped the

example rows and `SELECT` statement in a block comment as well anyway.

Thoughts @fpingham?

`OnlinePDFLoader` and `PagedPDFSplitter` lived separate from the rest of

the pdf loaders.

Because they're all similar, I propose moving all to `pdy.py` and the

same docs/examples page.

Additionally, `PagedPDFSplitter` naming doesn't match the pattern the

rest of the loaders follow, so I renamed to `PyPDFLoader` and had it

inherit from `BasePDFLoader` so it can now load from remote file

sources.

Provide shared memory capability for the Agent.

Inspired by #1293 .

## Problem

If both Agent and Tools (i.e., LLMChain) use the same memory, both of

them will save the context. It can be annoying in some cases.

## Solution

Create a memory wrapper that ignores the save and clear, thereby

preventing updates from Agent or Tools.

Simple CSV document loader which wraps `csv` reader, and preps the file

with a single `Document` per row.

The column header is prepended to each value for context which is useful

for context with embedding and semantic search

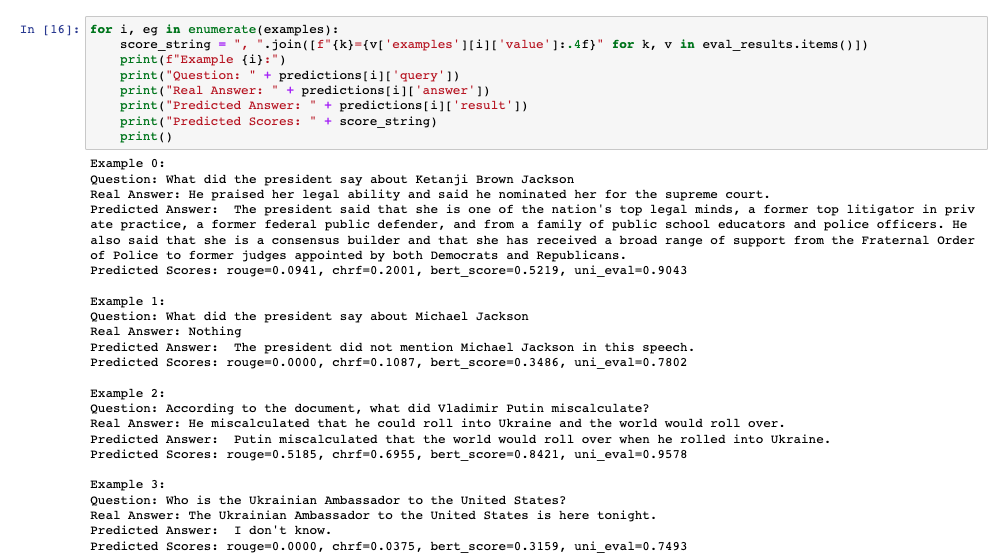

This PR adds additional evaluation metrics for data-augmented QA,

resulting in a report like this at the end of the notebook:

The score calculation is based on the

[Critique](https://docs.inspiredco.ai/critique/) toolkit, an API-based

toolkit (like OpenAI) that has minimal dependencies, so it should be

easy for people to run if they choose.

The code could further be simplified by actually adding a chain that

calls Critique directly, but that probably should be saved for another

PR if necessary. Any comments or change requests are welcome!

This pull request proposes an update to the Lightweight wrapper

library's documentation. The current documentation provides an example

of how to use the library's requests.run method, as follows:

requests.run("https://www.google.com"). However, this example does not

work for the 0.0.102 version of the library.

Testing:

The changes have been tested locally to ensure they are working as

intended.

Thank you for considering this pull request.