# Unstructured Excel Loader

Adds an `UnstructuredExcelLoader` class for `.xlsx` and `.xls` files.

Works with `unstructured>=0.6.7`. A plain text representation of the

Excel file will be available under the `page_content` attribute in the

doc. If you use the loader in `"elements"` mode, an HTML representation

of the Excel file will be available under the `text_as_html` metadata

key. Each sheet in the Excel document is its own document.

### Testing

```python

from langchain.document_loaders import UnstructuredExcelLoader

loader = UnstructuredExcelLoader(

"example_data/stanley-cups.xlsx",

mode="elements"

)

docs = loader.load()

```

## Who can review?

@hwchase17

@eyurtsev

# Chroma update_document full document embeddings bugfix

Chroma update_document takes a single document, but treats the

page_content sting of that document as a list when getting the new

document embedding.

This is a two-fold problem, where the resulting embedding for the

updated document is incorrect (it's only an embedding of the first

character in the new page_content) and it calls the embedding function

for every character in the new page_content string, using many tokens in

the process.

Fixes#5582

Co-authored-by: Caleb Ellington <calebellington@Calebs-MBP.hsd1.ca.comcast.net>

# Fix Qdrant ids creation

There has been a bug in how the ids were created in the Qdrant vector

store. They were previously calculated based on the texts. However,

there are some scenarios in which two documents may have the same piece

of text but different metadata, and that's a valid case. Deduplication

should be done outside of insertion.

It has been fixed and covered with the integration tests.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Fixes SQLAlchemy truncating the result if you have a big/text column

with many chars.

SQLAlchemy truncates columns if you try to convert a Row or Sequence to

a string directly

For comparison:

- Before:

```[('Harrison', 'That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio ... (2 characters truncated) ... hat is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio That is my Bio ')]```

- After:

```[('Harrison', 'That is my Bio That is my Bio That is my Bio That is

my Bio That is my Bio That is my Bio That is my Bio That is my Bio That

is my Bio That is my Bio That is my Bio That is my Bio That is my Bio

That is my Bio That is my Bio That is my Bio That is my Bio That is my

Bio That is my Bio That is my Bio ')]```

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

I'm not sure who to tag for chains, maybe @vowelparrot ?

Similar to #1813 for faiss, this PR is to extend functionality to pass

text and its vector pair to initialize and add embeddings to the

PGVector wrapper.

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

- @dev2049

# Support Qdrant filters

Qdrant has an [extensive filtering

system](https://qdrant.tech/documentation/concepts/filtering/) with rich

type support. This PR makes it possible to use the filters in Langchain

by passing an additional param to both the

`similarity_search_with_score` and `similarity_search` methods.

## Who can review?

@dev2049 @hwchase17

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

when the LLMs output 'yes|no',BooleanOutputParser can parse it to

'True|False', fix the ValueError in parse().

<!--

when use the BooleanOutputParser in the chain_filter.py, the LLMs output

'yes|no',the function 'parse' will throw ValueError。

-->

Fixes # (issue)

#5396https://github.com/hwchase17/langchain/issues/5396

---------

Co-authored-by: gaofeng27692 <gaofeng27692@hundsun.com>

# Add maximal relevance search to SKLearnVectorStore

This PR implements the maximum relevance search in SKLearnVectorStore.

Twitter handle: jtolgyesi (I submitted also the original implementation

of SKLearnVectorStore)

## Before submitting

Unit tests are included.

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Add batching to Qdrant

Several people requested a batching mechanism while uploading data to

Qdrant. It is important, as there are some limits for the maximum size

of the request payload, and without batching implemented in Langchain,

users need to implement it on their own. This PR exposes a new optional

`batch_size` parameter, so all the documents/texts are loaded in batches

of the expected size (64, by default).

The integration tests of Qdrant are extended to cover two cases:

1. Documents are sent in separate batches.

2. All the documents are sent in a single request.

# Handles the edge scenario in which the action input is a well formed

SQL query which ends with a quoted column

There may be a cleaner option here (or indeed other edge scenarios) but

this seems to robustly determine if the action input is likely to be a

well formed SQL query in which we don't want to arbitrarily trim off `"`

characters

Fixes#5423

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Agents / Tools / Toolkits

- @vowelparrot

# What does this PR do?

Bring support of `encode_kwargs` for ` HuggingFaceInstructEmbeddings`,

change the docstring example and add a test to illustrate with

`normalize_embeddings`.

Fixes#3605

(Similar to #3914)

Use case:

```python

from langchain.embeddings import HuggingFaceInstructEmbeddings

model_name = "hkunlp/instructor-large"

model_kwargs = {'device': 'cpu'}

encode_kwargs = {'normalize_embeddings': True}

hf = HuggingFaceInstructEmbeddings(

model_name=model_name,

model_kwargs=model_kwargs,

encode_kwargs=encode_kwargs

)

```

As the title says, I added more code splitters.

The implementation is trivial, so i don't add separate tests for each

splitter.

Let me know if any concerns.

Fixes # (issue)

https://github.com/hwchase17/langchain/issues/5170

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@eyurtsev @hwchase17

---------

Signed-off-by: byhsu <byhsu@linkedin.com>

Co-authored-by: byhsu <byhsu@linkedin.com>

# Creates GitHubLoader (#5257)

GitHubLoader is a DocumentLoader that loads issues and PRs from GitHub.

Fixes#5257

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Added New Trello loader class and documentation

Simple Loader on top of py-trello wrapper.

With a board name you can pull cards and to do some field parameter

tweaks on load operation.

I included documentation and examples.

Included unit test cases using patch and a fixture for py-trello client

class.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

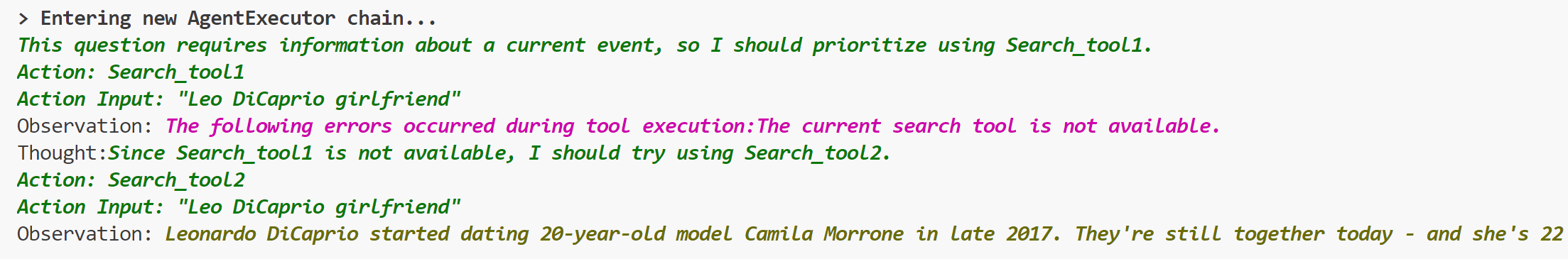

# Add ToolException that a tool can throw

This is an optional exception that tool throws when execution error

occurs.

When this exception is thrown, the agent will not stop working,but will

handle the exception according to the handle_tool_error variable of the

tool,and the processing result will be returned to the agent as

observation,and printed in pink on the console.It can be used like this:

```python

from langchain.schema import ToolException

from langchain import LLMMathChain, SerpAPIWrapper, OpenAI

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool, tool

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

class Error_tool:

def run(self, s: str):

raise ToolException('The current search tool is not available.')

def handle_tool_error(error) -> str:

return "The following errors occurred during tool execution:"+str(error)

search_tool1 = Error_tool()

search_tool2 = SerpAPIWrapper()

tools = [

Tool.from_function(

func=search_tool1.run,

name="Search_tool1",

description="useful for when you need to answer questions about current events.You should give priority to using it.",

handle_tool_error=handle_tool_error,

),

Tool.from_function(

func=search_tool2.run,

name="Search_tool2",

description="useful for when you need to answer questions about current events",

return_direct=True,

)

]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True,

handle_tool_errors=handle_tool_error)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

```

## Who can review?

- @vowelparrot

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Implemented appending arbitrary messages to the base chat message

history, the in-memory and cosmos ones.

<!--

Thank you for contributing to LangChain! Your PR will appear in our next

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

-->

As discussed this is the alternative way instead of #4480, with a

add_message method added that takes a BaseMessage as input, so that the

user can control what is in the base message like kwargs.

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

<!-- If you're adding a new integration, include an integration test and

an example notebook showing its use! -->

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@hwchase17

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Fix for `update_document` Function in Chroma

## Summary

This pull request addresses an issue with the `update_document` function

in the Chroma class, as described in

[#5031](https://github.com/hwchase17/langchain/issues/5031#issuecomment-1562577947).

The issue was identified as an `AttributeError` raised when calling

`update_document` due to a missing corresponding method in the

`Collection` object. This fix refactors the `update_document` method in

`Chroma` to correctly interact with the `Collection` object.

## Changes

1. Fixed the `update_document` method in the `Chroma` class to correctly

call methods on the `Collection` object.

2. Added the corresponding test `test_chroma_update_document` in

`tests/integration_tests/vectorstores/test_chroma.py` to reflect the

updated method call.

3. Added an example and explanation of how to use the `update_document`

function in the Jupyter notebook tutorial for Chroma.

## Test Plan

All existing tests pass after this change. In addition, the

`test_chroma_update_document` test case now correctly checks the

functionality of `update_document`, ensuring that the function works as

expected and updates the content of documents correctly.

## Reviewers

@dev2049

This fix will ensure that users are able to use the `update_document`

function as expected, without encountering the previous

`AttributeError`. This will enhance the usability and reliability of the

Chroma class for all users.

Thank you for considering this pull request. I look forward to your

feedback and suggestions.

# Fix lost mimetype when using Blob.from_data method

The mimetype is lost due to a typo in the class attribue name

Fixes # - (no issue opened but I can open one if needed)

## Changes

* Fixed typo in name

* Added unit-tests to validate the output Blob

## Review

@eyurtsev

# Add path validation to DirectoryLoader

This PR introduces a minor adjustment to the DirectoryLoader by adding

validation for the path argument. Previously, if the provided path

didn't exist or wasn't a directory, DirectoryLoader would return an

empty document list due to the behavior of the `glob` method. This could

potentially cause confusion for users, as they might expect a

file-loading error instead.

So, I've added two validations to the load method of the

DirectoryLoader:

- Raise a FileNotFoundError if the provided path does not exist

- Raise a ValueError if the provided path is not a directory

Due to the relatively small scope of these changes, a new issue was not

created.

## Before submitting

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@eyurtsev

# Add SKLearnVectorStore

This PR adds SKLearnVectorStore, a simply vector store based on

NearestNeighbors implementations in the scikit-learn package. This

provides a simple drop-in vector store implementation with minimal

dependencies (scikit-learn is typically installed in a data scientist /

ml engineer environment). The vector store can be persisted and loaded

from json, bson and parquet format.

SKLearnVectorStore has soft (dynamic) dependency on the scikit-learn,

numpy and pandas packages. Persisting to bson requires the bson package,

persisting to parquet requires the pyarrow package.

## Before submitting

Integration tests are provided under

`tests/integration_tests/vectorstores/test_sklearn.py`

Sample usage notebook is provided under

`docs/modules/indexes/vectorstores/examples/sklear.ipynb`

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Add Momento as a standard cache and chat message history provider

This PR adds Momento as a standard caching provider. Implements the

interface, adds integration tests, and documentation. We also add

Momento as a chat history message provider along with integration tests,

and documentation.

[Momento](https://www.gomomento.com/) is a fully serverless cache.

Similar to S3 or DynamoDB, it requires zero configuration,

infrastructure management, and is instantly available. Users sign up for

free and get 50GB of data in/out for free every month.

## Before submitting

✅ We have added documentation, notebooks, and integration tests

demonstrating usage.

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

Add Multi-CSV/DF support in CSV and DataFrame Toolkits

* CSV and DataFrame toolkits now accept list of CSVs/DFs

* Add default prompts for many dataframes in `pandas_dataframe` toolkit

Fixes#1958

Potentially fixes#4423

## Testing

* Add single and multi-dataframe integration tests for

`pandas_dataframe` toolkit with permutations of `include_df_in_prompt`

* Add single and multi-CSV integration tests for csv toolkit

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Add C Transformers for GGML Models

I created Python bindings for the GGML models:

https://github.com/marella/ctransformers

Currently it supports GPT-2, GPT-J, GPT-NeoX, LLaMA, MPT, etc. See

[Supported

Models](https://github.com/marella/ctransformers#supported-models).

It provides a unified interface for all models:

```python

from langchain.llms import CTransformers

llm = CTransformers(model='/path/to/ggml-gpt-2.bin', model_type='gpt2')

print(llm('AI is going to'))

```

It can be used with models hosted on the Hugging Face Hub:

```py

llm = CTransformers(model='marella/gpt-2-ggml')

```

It supports streaming:

```py

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

llm = CTransformers(model='marella/gpt-2-ggml', callbacks=[StreamingStdOutCallbackHandler()])

```

Please see [README](https://github.com/marella/ctransformers#readme) for

more details.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

zep-python's sync methods no longer need an asyncio wrapper. This was

causing issues with FastAPI deployment.

Zep also now supports putting and getting of arbitrary message metadata.

Bump zep-python version to v0.30

Remove nest-asyncio from Zep example notebooks.

Modify tests to include metadata.

---------

Co-authored-by: Daniel Chalef <daniel.chalef@private.org>

Co-authored-by: Daniel Chalef <131175+danielchalef@users.noreply.github.com>

# Bibtex integration

Wrap bibtexparser to retrieve a list of docs from a bibtex file.

* Get the metadata from the bibtex entries

* `page_content` get from the local pdf referenced in the `file` field

of the bibtex entry using `pymupdf`

* If no valid pdf file, `page_content` set to the `abstract` field of

the bibtex entry

* Support Zotero flavour using regex to get the file path

* Added usage example in

`docs/modules/indexes/document_loaders/examples/bibtex.ipynb`

---------

Co-authored-by: Sébastien M. Popoff <sebastien.popoff@espci.fr>

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Add Joplin document loader

[Joplin](https://joplinapp.org/) is an open source note-taking app.

Joplin has a [REST API](https://joplinapp.org/api/references/rest_api/)

for accessing its local database. The proposed `JoplinLoader` uses the

API to retrieve all notes in the database and their metadata. Joplin

needs to be installed and running locally, and an access token is

required.

- The PR includes an integration test.

- The PR includes an example notebook.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

## Description

The html structure of readthedocs can differ. Currently, the html tag is

hardcoded in the reader, and unable to fit into some cases. This pr

includes the following changes:

1. Replace `find_all` with `find` because we just want one tag.

2. Provide `custom_html_tag` to the loader.

3. Add tests for readthedoc loader

4. Refactor code

## Issues

See more in https://github.com/hwchase17/langchain/pull/2609. The

problem was not completely fixed in that pr.

---------

Signed-off-by: byhsu <byhsu@linkedin.com>

Co-authored-by: byhsu <byhsu@linkedin.com>

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# OpanAI finetuned model giving zero tokens cost

Very simple fix to the previously committed solution to allowing

finetuned Openai models.

Improves #5127

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>