# Creates GitHubLoader (#5257)

GitHubLoader is a DocumentLoader that loads issues and PRs from GitHub.

Fixes#5257

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Added New Trello loader class and documentation

Simple Loader on top of py-trello wrapper.

With a board name you can pull cards and to do some field parameter

tweaks on load operation.

I included documentation and examples.

Included unit test cases using patch and a fixture for py-trello client

class.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

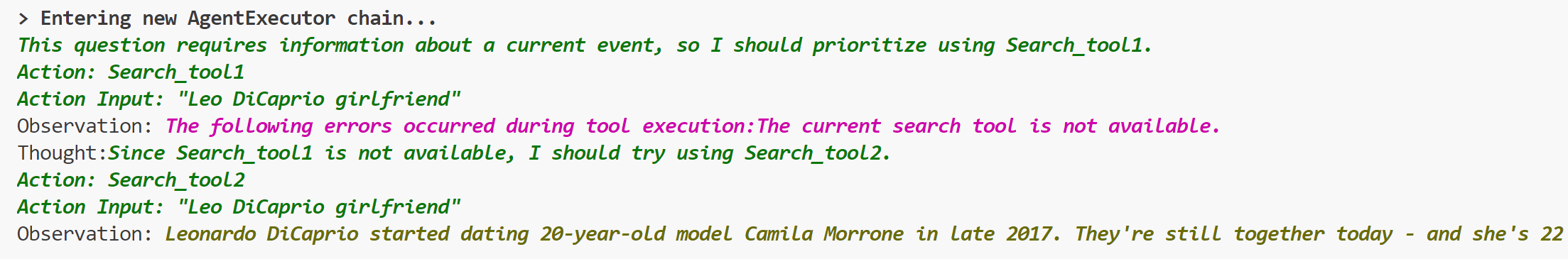

# Add ToolException that a tool can throw

This is an optional exception that tool throws when execution error

occurs.

When this exception is thrown, the agent will not stop working,but will

handle the exception according to the handle_tool_error variable of the

tool,and the processing result will be returned to the agent as

observation,and printed in pink on the console.It can be used like this:

```python

from langchain.schema import ToolException

from langchain import LLMMathChain, SerpAPIWrapper, OpenAI

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool, tool

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

class Error_tool:

def run(self, s: str):

raise ToolException('The current search tool is not available.')

def handle_tool_error(error) -> str:

return "The following errors occurred during tool execution:"+str(error)

search_tool1 = Error_tool()

search_tool2 = SerpAPIWrapper()

tools = [

Tool.from_function(

func=search_tool1.run,

name="Search_tool1",

description="useful for when you need to answer questions about current events.You should give priority to using it.",

handle_tool_error=handle_tool_error,

),

Tool.from_function(

func=search_tool2.run,

name="Search_tool2",

description="useful for when you need to answer questions about current events",

return_direct=True,

)

]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True,

handle_tool_errors=handle_tool_error)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

```

## Who can review?

- @vowelparrot

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# docs: ecosystem/integrations update

It is the first in a series of `ecosystem/integrations` updates.

The ecosystem/integrations list is missing many integrations.

I'm adding the missing integrations in a consistent format:

1. description of the integrated system

2. `Installation and Setup` section with 'pip install ...`, Key setup,

and other necessary settings

3. Sections like `LLM`, `Text Embedding Models`, `Chat Models`... with

links to correspondent examples and imports of the used classes.

This PR keeps new docs, that are presented in the

`docs/modules/models/text_embedding/examples` but missed in the

`ecosystem/integrations`. The next PRs will cover the next example

sections.

Also updated `integrations.rst`: added the `Dependencies` section with a

link to the packages used in LangChain.

## Who can review?

@hwchase17

@eyurtsev

@dev2049

# docs: ecosystem/integrations update 2

#5219 - part 1

The second part of this update (parts are independent of each other! no

overlap):

- added diffbot.md

- updated confluence.ipynb; added confluence.md

- updated college_confidential.md

- updated openai.md

- added blackboard.md

- added bilibili.md

- added azure_blob_storage.md

- added azlyrics.md

- added aws_s3.md

## Who can review?

@hwchase17@agola11

@agola11

@vowelparrot

@dev2049

# Update llamacpp demonstration notebook

Add instructions to install with BLAS backend, and update the example of

model usage.

Fixes#5071. However, it is more like a prevention of similar issues in

the future, not a fix, since there was no problem in the framework

functionality

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

- @hwchase17

- @agola11

# Fix for `update_document` Function in Chroma

## Summary

This pull request addresses an issue with the `update_document` function

in the Chroma class, as described in

[#5031](https://github.com/hwchase17/langchain/issues/5031#issuecomment-1562577947).

The issue was identified as an `AttributeError` raised when calling

`update_document` due to a missing corresponding method in the

`Collection` object. This fix refactors the `update_document` method in

`Chroma` to correctly interact with the `Collection` object.

## Changes

1. Fixed the `update_document` method in the `Chroma` class to correctly

call methods on the `Collection` object.

2. Added the corresponding test `test_chroma_update_document` in

`tests/integration_tests/vectorstores/test_chroma.py` to reflect the

updated method call.

3. Added an example and explanation of how to use the `update_document`

function in the Jupyter notebook tutorial for Chroma.

## Test Plan

All existing tests pass after this change. In addition, the

`test_chroma_update_document` test case now correctly checks the

functionality of `update_document`, ensuring that the function works as

expected and updates the content of documents correctly.

## Reviewers

@dev2049

This fix will ensure that users are able to use the `update_document`

function as expected, without encountering the previous

`AttributeError`. This will enhance the usability and reliability of the

Chroma class for all users.

Thank you for considering this pull request. I look forward to your

feedback and suggestions.

# Add SKLearnVectorStore

This PR adds SKLearnVectorStore, a simply vector store based on

NearestNeighbors implementations in the scikit-learn package. This

provides a simple drop-in vector store implementation with minimal

dependencies (scikit-learn is typically installed in a data scientist /

ml engineer environment). The vector store can be persisted and loaded

from json, bson and parquet format.

SKLearnVectorStore has soft (dynamic) dependency on the scikit-learn,

numpy and pandas packages. Persisting to bson requires the bson package,

persisting to parquet requires the pyarrow package.

## Before submitting

Integration tests are provided under

`tests/integration_tests/vectorstores/test_sklearn.py`

Sample usage notebook is provided under

`docs/modules/indexes/vectorstores/examples/sklear.ipynb`

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Sample Notebook for DynamoDB Chat Message History

@dev2049

Adding a sample notebook for the DynamoDB Chat Message History class.

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

# Add Chainlit to deployment options

Add [Chainlit](https://github.com/Chainlit/chainlit) as deployment

options

Used links to Github examples and Chainlit doc on the LangChain

integration

Co-authored-by: Dan Constantini <danconstantini@Dan-Constantini-MacBook.local>

# docs: improve flow of llm caching notebook

The notebook `llm_caching` demos various caching providers. In the

previous version, there was setup common to all examples but under the

`In Memory Caching` heading.

If a user comes and only wants to try a particular example, they will

run the common setup, then the cells for the specific provider they are

interested in. Then they will get import and variable reference errors.

This commit moves the common setup to the top to avoid this.

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@dev2049

# Better docs for weaviate hybrid search

<!--

Thank you for contributing to LangChain! Your PR will appear in our next

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

-->

<!-- Remove if not applicable -->

Fixes: NA

## Before submitting

<!-- If you're adding a new integration, include an integration test and

an example notebook showing its use! -->

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

@dev2049

This PR adds LLM wrapper for Databricks. It supports two endpoint types:

* serving endpoint

* cluster driver proxy app

An integration notebook is included to show how it works.

Co-authored-by: Davis Chase <130488702+dev2049@users.noreply.github.com>

Co-authored-by: Gengliang Wang <gengliang@apache.org>

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Fixed typo: 'ouput' to 'output' in all documentation

In this instance, the typo 'ouput' was amended to 'output' in all

occurrences within the documentation. There are no dependencies required

for this change.

# Add Momento as a standard cache and chat message history provider

This PR adds Momento as a standard caching provider. Implements the

interface, adds integration tests, and documentation. We also add

Momento as a chat history message provider along with integration tests,

and documentation.

[Momento](https://www.gomomento.com/) is a fully serverless cache.

Similar to S3 or DynamoDB, it requires zero configuration,

infrastructure management, and is instantly available. Users sign up for

free and get 50GB of data in/out for free every month.

## Before submitting

✅ We have added documentation, notebooks, and integration tests

demonstrating usage.

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

Add Multi-CSV/DF support in CSV and DataFrame Toolkits

* CSV and DataFrame toolkits now accept list of CSVs/DFs

* Add default prompts for many dataframes in `pandas_dataframe` toolkit

Fixes#1958

Potentially fixes#4423

## Testing

* Add single and multi-dataframe integration tests for

`pandas_dataframe` toolkit with permutations of `include_df_in_prompt`

* Add single and multi-CSV integration tests for csv toolkit

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Add C Transformers for GGML Models

I created Python bindings for the GGML models:

https://github.com/marella/ctransformers

Currently it supports GPT-2, GPT-J, GPT-NeoX, LLaMA, MPT, etc. See

[Supported

Models](https://github.com/marella/ctransformers#supported-models).

It provides a unified interface for all models:

```python

from langchain.llms import CTransformers

llm = CTransformers(model='/path/to/ggml-gpt-2.bin', model_type='gpt2')

print(llm('AI is going to'))

```

It can be used with models hosted on the Hugging Face Hub:

```py

llm = CTransformers(model='marella/gpt-2-ggml')

```

It supports streaming:

```py

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

llm = CTransformers(model='marella/gpt-2-ggml', callbacks=[StreamingStdOutCallbackHandler()])

```

Please see [README](https://github.com/marella/ctransformers#readme) for

more details.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

zep-python's sync methods no longer need an asyncio wrapper. This was

causing issues with FastAPI deployment.

Zep also now supports putting and getting of arbitrary message metadata.

Bump zep-python version to v0.30

Remove nest-asyncio from Zep example notebooks.

Modify tests to include metadata.

---------

Co-authored-by: Daniel Chalef <daniel.chalef@private.org>

Co-authored-by: Daniel Chalef <131175+danielchalef@users.noreply.github.com>

For most queries it's the `size` parameter that determines final number

of documents to return. Since our abstractions refer to this as `k`, set

this to be `k` everywhere instead of expecting a separate param. Would

be great to have someone more familiar with OpenSearch validate that

this is reasonable (e.g. that having `size` and what OpenSearch calls

`k` be the same won't lead to any strange behavior). cc @naveentatikonda

Closes#5212

# Resolve error in StructuredOutputParser docs

Documentation for `StructuredOutputParser` currently not reproducible,

that is, `output_parser.parse(output)` raises an error because the LLM

returns a response with an invalid format

```python

_input = prompt.format_prompt(question="what's the capital of france")

output = model(_input.to_string())

output

# ?

#

# ```json

# {

# "answer": "Paris",

# "source": "https://www.worldatlas.com/articles/what-is-the-capital-of-france.html"

# }

# ```

```

Was fixed by adding a question mark to the prompt

# Add QnA with sources example

<!--

Thank you for contributing to LangChain! Your PR will appear in our next

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

-->

<!-- Remove if not applicable -->

Fixes: see

https://stackoverflow.com/questions/76207160/langchain-doesnt-work-with-weaviate-vector-database-getting-valueerror/76210017#76210017

## Before submitting

<!-- If you're adding a new integration, include an integration test and

an example notebook showing its use! -->

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

@dev2049

# Bibtex integration

Wrap bibtexparser to retrieve a list of docs from a bibtex file.

* Get the metadata from the bibtex entries

* `page_content` get from the local pdf referenced in the `file` field

of the bibtex entry using `pymupdf`

* If no valid pdf file, `page_content` set to the `abstract` field of

the bibtex entry

* Support Zotero flavour using regex to get the file path

* Added usage example in

`docs/modules/indexes/document_loaders/examples/bibtex.ipynb`

---------

Co-authored-by: Sébastien M. Popoff <sebastien.popoff@espci.fr>

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

I found an API key for `serpapi_api_key` while reading the docs. It

seems to have been modified very recently. Removed it in this PR

@hwchase17 - project lead

# fix a mistake in concepts.md

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

# Add Joplin document loader

[Joplin](https://joplinapp.org/) is an open source note-taking app.

Joplin has a [REST API](https://joplinapp.org/api/references/rest_api/)

for accessing its local database. The proposed `JoplinLoader` uses the

API to retrieve all notes in the database and their metadata. Joplin

needs to be installed and running locally, and an access token is

required.

- The PR includes an integration test.

- The PR includes an example notebook.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Beam

Calls the Beam API wrapper to deploy and make subsequent calls to an

instance of the gpt2 LLM in a cloud deployment. Requires installation of

the Beam library and registration of Beam Client ID and Client Secret.

Additional calls can then be made through the instance of the large

language model in your code or by calling the Beam API.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Vectara Integration

This PR provides integration with Vectara. Implemented here are:

* langchain/vectorstore/vectara.py

* tests/integration_tests/vectorstores/test_vectara.py

* langchain/retrievers/vectara_retriever.py

And two IPYNB notebooks to do more testing:

* docs/modules/chains/index_examples/vectara_text_generation.ipynb

* docs/modules/indexes/vectorstores/examples/vectara.ipynb

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# DOCS added missed document_loader examples

Added missed examples: `JSON`, `Open Document Format (ODT)`,

`Wikipedia`, `tomarkdown`.

Updated them to a consistent format.

## Who can review?

@hwchase17

@dev2049

# Clarification of the reference to the "get_text_legth" function in

getting_started.md

Reference to the function "get_text_legth" in the documentation did not

make sense. Comment added for clarification.

@hwchase17