Provide shared memory capability for the Agent.

Inspired by #1293 .

## Problem

If both Agent and Tools (i.e., LLMChain) use the same memory, both of

them will save the context. It can be annoying in some cases.

## Solution

Create a memory wrapper that ignores the save and clear, thereby

preventing updates from Agent or Tools.

Simple CSV document loader which wraps `csv` reader, and preps the file

with a single `Document` per row.

The column header is prepended to each value for context which is useful

for context with embedding and semantic search

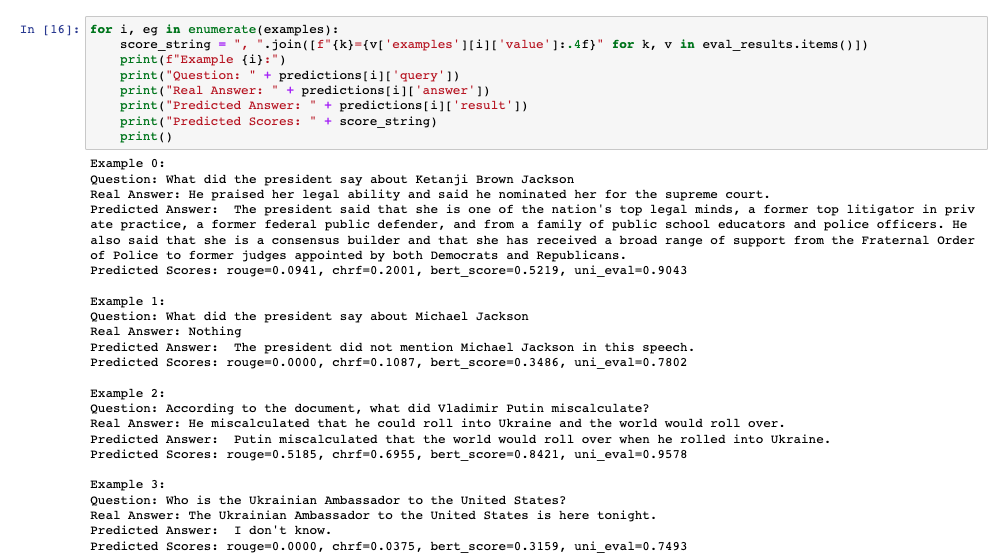

This PR adds additional evaluation metrics for data-augmented QA,

resulting in a report like this at the end of the notebook:

The score calculation is based on the

[Critique](https://docs.inspiredco.ai/critique/) toolkit, an API-based

toolkit (like OpenAI) that has minimal dependencies, so it should be

easy for people to run if they choose.

The code could further be simplified by actually adding a chain that

calls Critique directly, but that probably should be saved for another

PR if necessary. Any comments or change requests are welcome!

This pull request proposes an update to the Lightweight wrapper

library's documentation. The current documentation provides an example

of how to use the library's requests.run method, as follows:

requests.run("https://www.google.com"). However, this example does not

work for the 0.0.102 version of the library.

Testing:

The changes have been tested locally to ensure they are working as

intended.

Thank you for considering this pull request.

Different PDF libraries have different strengths and weaknesses. PyMuPDF

does a good job at extracting the most amount of content from the doc,

regardless of the source quality, extremely fast (especially compared to

Unstructured).

https://pymupdf.readthedocs.io/en/latest/index.html

- Added instructions on setting up self hosted searx

- Add notebook example with agent

- Use `localhost:8888` as example url to stay consistent since public

instances are not really usable.

Co-authored-by: blob42 <spike@w530>

The YAML and JSON examples of prompt serialization now give a strange

`No '_type' key found, defaulting to 'prompt'` message when you try to

run them yourself or copy the format of the files. The reason for this

harmless warning is that the _type key was not in the config files,

which means they are parsed as a standard prompt.

This could be confusing to new users (like it was confusing to me after

upgrading from 0.0.85 to 0.0.86+ for my few_shot prompts that needed a

_type added to the example_prompt config), so this update includes the

_type key just for clarity.

Obviously this is not critical as the warning is harmless, but it could

be confusing to track down or be interpreted as an error by a new user,

so this update should resolve that.

This PR:

- Increases `qdrant-client` version to 1.0.4

- Introduces custom content and metadata keys (as requested in #1087)

- Moves all the `QdrantClient` parameters into the method parameters to

simplify code completion

This PR adds

* `ZeroShotAgent.as_sql_agent`, which returns an agent for interacting

with a sql database. This builds off of `SQLDatabaseChain`. The main

advantages are 1) answering general questions about the db, 2) access to

a tool for double checking queries, and 3) recovering from errors

* `ZeroShotAgent.as_json_agent` which returns an agent for interacting

with json blobs.

* Several examples in notebooks

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

Currently, table information is gathered through SQLAlchemy as complete

table DDL and a user-selected number of sample rows from each table.

This PR adds the option to use user-defined table information instead of

automatically collecting it. This will use the provided table

information and fall back to the automatic gathering for tables that the

user didn't provide information for.

Off the top of my head, there are a few cases where this can be quite

useful:

- The first n rows of a table are uninformative, or very similar to one

another. In this case, hand-crafting example rows for a table such that

they provide the good, diverse information can be very helpful. Another

approach we can think about later is getting a random sample of n rows

instead of the first n rows, but there are some performance

considerations that need to be taken there. Even so, hand-crafting the

sample rows is useful and can guarantee the model sees informative data.

- The user doesn't want every column to be available to the model. This

is not an elegant way to fulfill this specific need since the user would

have to provide the table definition instead of a simple list of columns

to include or ignore, but it does work for this purpose.

- For the developers, this makes it a lot easier to compare/benchmark

the performance of different prompting structures for providing table

information in the prompt.

These are cases I've run into myself (particularly cases 1 and 3) and

I've found these changes useful. Personally, I keep custom table info

for a few tables in a yaml file for versioning and easy loading.

Definitely open to other opinions/approaches though!

iFixit is a wikipedia-like site that has a huge amount of open content

on how to fix things, questions/answers for common troubleshooting and

"things" related content that is more technical in nature. All content

is licensed under CC-BY-SA-NC 3.0

Adding docs from iFixit as context for user questions like "I dropped my

phone in water, what do I do?" or "My macbook pro is making a whining

noise, what's wrong with it?" can yield significantly better responses

than context free response from LLMs.