The Python `wikipedia` package gives easy access for searching and

fetching pages from Wikipedia, see https://pypi.org/project/wikipedia/.

It can serve as an additional search and retrieval tool, like the

existing Google and SerpAPI helpers, for both chains and agents.

First of all, big kudos on what you guys are doing, langchain is

enabling some really amazing usecases and I'm having lot's of fun

playing around with it. It's really cool how many data sources it

supports out of the box.

However, I noticed some limitations of the current `GitbookLoader` which

this PR adresses:

The main change is that I added an optional `base_url` arg to

`GitbookLoader`. This enables use cases where one wants to crawl docs

from a start page other than the index page, e.g., the following call

would scrape all pages that are reachable via nav bar links from

"https://docs.zenml.io/v/0.35.0":

```python

GitbookLoader(

web_page="https://docs.zenml.io/v/0.35.0",

load_all_paths=True,

base_url="https://docs.zenml.io",

)

```

Previously, this would fail because relative links would be of the form

`/v/0.35.0/...` and the full link URLs would become

`docs.zenml.io/v/0.35.0/v/0.35.0/...`.

I also fixed another issue of the `GitbookLoader` where the link URLs

were constructed incorrectly as `website//relative_url` if the provided

`web_page` had a trailing slash.

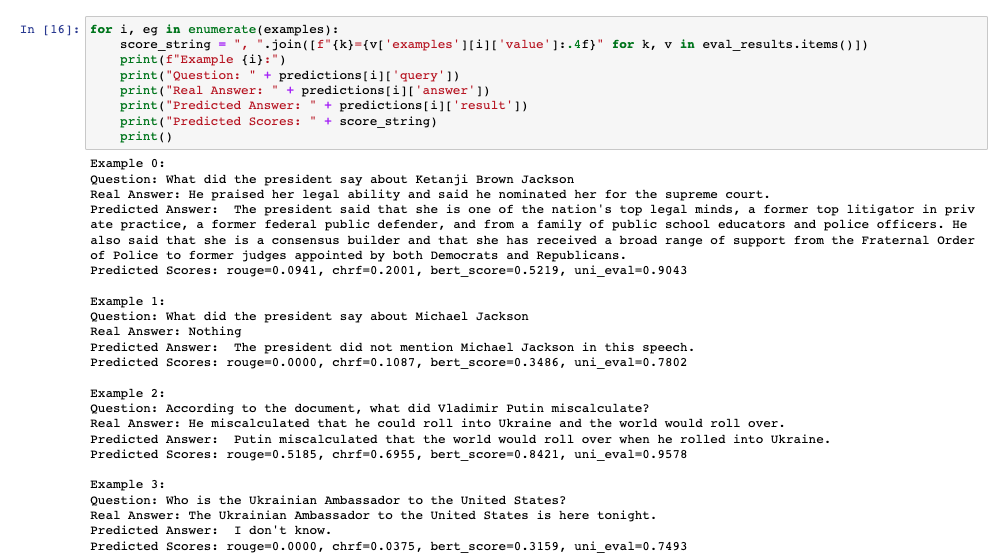

This PR adds additional evaluation metrics for data-augmented QA,

resulting in a report like this at the end of the notebook:

The score calculation is based on the

[Critique](https://docs.inspiredco.ai/critique/) toolkit, an API-based

toolkit (like OpenAI) that has minimal dependencies, so it should be

easy for people to run if they choose.

The code could further be simplified by actually adding a chain that

calls Critique directly, but that probably should be saved for another

PR if necessary. Any comments or change requests are welcome!

# Problem

The ChromaDB vecstore only supported local connection. There was no way

to use a chromadb server.

# Fix

Added `client_settings` as Chroma attribute.

# Usage

```

from chromadb.config import Settings

from langchain.vectorstores import Chroma

chroma_settings = Settings(chroma_api_impl="rest",

chroma_server_host="localhost",

chroma_server_http_port="80")

docsearch = Chroma.from_documents(chunks, embeddings, metadatas=metadatas, client_settings=chroma_settings, collection_name=COLLECTION_NAME)

```

Resolves https://github.com/hwchase17/langchain/issues/1510

### Problem

When loading S3 Objects with `/` in the object key (eg.

`folder/some-document.txt`) using `S3FileLoader`, the objects are

downloaded into a temporary directory and saved as a file.

This errors out when the parent directory does not exist within the

temporary directory.

See

https://github.com/hwchase17/langchain/issues/1510#issuecomment-1459583696

on how to reproduce this bug

### What this pr does

Creates parent directories based on object key.

This also works with deeply nested keys:

`folder/subfolder/some-document.txt`

This pull request proposes an update to the Lightweight wrapper

library's documentation. The current documentation provides an example

of how to use the library's requests.run method, as follows:

requests.run("https://www.google.com"). However, this example does not

work for the 0.0.102 version of the library.

Testing:

The changes have been tested locally to ensure they are working as

intended.

Thank you for considering this pull request.

Solves https://github.com/hwchase17/langchain/issues/1412

Currently `OpenAIChat` inherits the way it calculates the number of

tokens, `get_num_token`, from `BaseLLM`.

In the other hand `OpenAI` inherits from `BaseOpenAI`.

`BaseOpenAI` and `BaseLLM` uses different methodologies for doing this.

The first relies on `tiktoken` while the second on `GPT2TokenizerFast`.

The motivation of this PR is:

1. Bring consistency about the way of calculating number of tokens

`get_num_token` to the `OpenAI` family, regardless of `Chat` vs `non

Chat` scenarios.

2. Give preference to the `tiktoken` method as it's serverless friendly.

It doesn't require downloading models which might make it incompatible

with `readonly` filesystems.

Fix an issue that occurs when using OpenAIChat for llm_math, refer to

the code style of the "Final Answer:" in Mrkl。 the reason is I found a

issue when I try OpenAIChat for llm_math, when I try the question in

Chinese, the model generate the format like "\n\nQuestion: What is the

square of 29?\nAnswer: 841", it translate the question first , then

answer. below is my snapshot:

<img width="945" alt="snapshot"

src="https://user-images.githubusercontent.com/82029664/222642193-10ecca77-db7b-4759-bc46-32a8f8ddc48f.png">

Hello! Thank you for the amazing library you've created!

While following the tutorial at [the link(`Using an example

selector`)](https://langchain.readthedocs.io/en/latest/modules/prompts/examples/few_shot_examples.html#using-an-example-selector),

I noticed that passing Chroma as an argument to from_examples results in

a type hint error.

Error message(mypy):

```

Argument 3 to "from_examples" of "SemanticSimilarityExampleSelector" has incompatible type "Type[Chroma]"; expected "VectorStore" [arg-type]mypy(error)

```

This pull request fixes the type hint and allows the VectorStore class

to be specified as an argument.

Different PDF libraries have different strengths and weaknesses. PyMuPDF

does a good job at extracting the most amount of content from the doc,

regardless of the source quality, extremely fast (especially compared to

Unstructured).

https://pymupdf.readthedocs.io/en/latest/index.html

- Added instructions on setting up self hosted searx

- Add notebook example with agent

- Use `localhost:8888` as example url to stay consistent since public

instances are not really usable.

Co-authored-by: blob42 <spike@w530>