# Add instructions to pyproject.toml

* Add instructions to pyproject.toml about how to handle optional

dependencies.

## Before submitting

## Who can review?

---------

Co-authored-by: Davis Chase <130488702+dev2049@users.noreply.github.com>

Co-authored-by: Zander Chase <130414180+vowelparrot@users.noreply.github.com>

# Better docs for weaviate hybrid search

<!--

Thank you for contributing to LangChain! Your PR will appear in our next

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

-->

<!-- Remove if not applicable -->

Fixes: NA

## Before submitting

<!-- If you're adding a new integration, include an integration test and

an example notebook showing its use! -->

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

@dev2049

# Fixed passing creds to VertexAI LLM

Fixes #5279

It looks like we should drop a type annotation for Credentials.

Co-authored-by: Leonid Kuligin <kuligin@google.com>

# Update contribution guidelines and PR template

This PR updates the contribution guidelines to include more information

on how to handle optional dependencies.

The PR template is updated to include a link to the contribution guidelines document.

# Add example to LLMMath to help with power operator

Add example to LLMMath that helps the model to interpret `^` as the power operator rather than the python xor operator.

This PR adds LLM wrapper for Databricks. It supports two endpoint types:

* serving endpoint

* cluster driver proxy app

An integration notebook is included to show how it works.

Co-authored-by: Davis Chase <130488702+dev2049@users.noreply.github.com>

Co-authored-by: Gengliang Wang <gengliang@apache.org>

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Fixed typo: 'ouput' to 'output' in all documentation

In this instance, the typo 'ouput' was amended to 'output' in all

occurrences within the documentation. There are no dependencies required

for this change.

# Add Momento as a standard cache and chat message history provider

This PR adds Momento as a standard caching provider. Implements the

interface, adds integration tests, and documentation. We also add

Momento as a chat history message provider along with integration tests,

and documentation.

[Momento](https://www.gomomento.com/) is a fully serverless cache.

Similar to S3 or DynamoDB, it requires zero configuration,

infrastructure management, and is instantly available. Users sign up for

free and get 50GB of data in/out for free every month.

## Before submitting

✅ We have added documentation, notebooks, and integration tests

demonstrating usage.

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Your PR Title (What it does)

Adding an if statement to deal with bigquery sql dialect. When I use

bigquery dialect before, it failed while using SET search_path TO. So

added a condition to set dataset as the schema parameter which is

equivalent to SET search_path TO . I have tested and it works.

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@dev2049

The current `HuggingFacePipeline.from_model_id` does not allow passing

of pipeline arguments to the transformer pipeline.

This PR enables adding important pipeline parameters like setting

`max_new_tokens` for example.

Previous to this PR it would be necessary to manually create the

pipeline through huggingface transformers then handing it to langchain.

For example instead of this

```py

model_id = "gpt2"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id)

pipe = pipeline(

"text-generation", model=model, tokenizer=tokenizer, max_new_tokens=10

)

hf = HuggingFacePipeline(pipeline=pipe)

```

You can write this

```py

hf = HuggingFacePipeline.from_model_id(

model_id="gpt2", task="text-generation", pipeline_kwargs={"max_new_tokens": 10}

)

```

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

Add Multi-CSV/DF support in CSV and DataFrame Toolkits

* CSV and DataFrame toolkits now accept list of CSVs/DFs

* Add default prompts for many dataframes in `pandas_dataframe` toolkit

Fixes#1958

Potentially fixes#4423

## Testing

* Add single and multi-dataframe integration tests for

`pandas_dataframe` toolkit with permutations of `include_df_in_prompt`

* Add single and multi-CSV integration tests for csv toolkit

---------

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Add C Transformers for GGML Models

I created Python bindings for the GGML models:

https://github.com/marella/ctransformers

Currently it supports GPT-2, GPT-J, GPT-NeoX, LLaMA, MPT, etc. See

[Supported

Models](https://github.com/marella/ctransformers#supported-models).

It provides a unified interface for all models:

```python

from langchain.llms import CTransformers

llm = CTransformers(model='/path/to/ggml-gpt-2.bin', model_type='gpt2')

print(llm('AI is going to'))

```

It can be used with models hosted on the Hugging Face Hub:

```py

llm = CTransformers(model='marella/gpt-2-ggml')

```

It supports streaming:

```py

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

llm = CTransformers(model='marella/gpt-2-ggml', callbacks=[StreamingStdOutCallbackHandler()])

```

Please see [README](https://github.com/marella/ctransformers#readme) for

more details.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

zep-python's sync methods no longer need an asyncio wrapper. This was

causing issues with FastAPI deployment.

Zep also now supports putting and getting of arbitrary message metadata.

Bump zep-python version to v0.30

Remove nest-asyncio from Zep example notebooks.

Modify tests to include metadata.

---------

Co-authored-by: Daniel Chalef <daniel.chalef@private.org>

Co-authored-by: Daniel Chalef <131175+danielchalef@users.noreply.github.com>

Fixes a regression in JoplinLoader that was introduced during the code

review (bad `page` wildcard in _get_note_url).

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@dev2049

@leo-gan

For most queries it's the `size` parameter that determines final number

of documents to return. Since our abstractions refer to this as `k`, set

this to be `k` everywhere instead of expecting a separate param. Would

be great to have someone more familiar with OpenSearch validate that

this is reasonable (e.g. that having `size` and what OpenSearch calls

`k` be the same won't lead to any strange behavior). cc @naveentatikonda

Closes#5212

# Resolve error in StructuredOutputParser docs

Documentation for `StructuredOutputParser` currently not reproducible,

that is, `output_parser.parse(output)` raises an error because the LLM

returns a response with an invalid format

```python

_input = prompt.format_prompt(question="what's the capital of france")

output = model(_input.to_string())

output

# ?

#

# ```json

# {

# "answer": "Paris",

# "source": "https://www.worldatlas.com/articles/what-is-the-capital-of-france.html"

# }

# ```

```

Was fixed by adding a question mark to the prompt

# Add QnA with sources example

<!--

Thank you for contributing to LangChain! Your PR will appear in our next

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

-->

<!-- Remove if not applicable -->

Fixes: see

https://stackoverflow.com/questions/76207160/langchain-doesnt-work-with-weaviate-vector-database-getting-valueerror/76210017#76210017

## Before submitting

<!-- If you're adding a new integration, include an integration test and

an example notebook showing its use! -->

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

@dev2049

# Bibtex integration

Wrap bibtexparser to retrieve a list of docs from a bibtex file.

* Get the metadata from the bibtex entries

* `page_content` get from the local pdf referenced in the `file` field

of the bibtex entry using `pymupdf`

* If no valid pdf file, `page_content` set to the `abstract` field of

the bibtex entry

* Support Zotero flavour using regex to get the file path

* Added usage example in

`docs/modules/indexes/document_loaders/examples/bibtex.ipynb`

---------

Co-authored-by: Sébastien M. Popoff <sebastien.popoff@espci.fr>

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Allow to specify ID when adding to the FAISS vectorstore

This change allows unique IDs to be specified when adding documents /

embeddings to a faiss vectorstore.

- This reflects the current approach with the chroma vectorstore.

- It allows rejection of inserts on duplicate IDs

- will allow deletion / update by searching on deterministic ID (such as

a hash).

- If not specified, a random UUID is generated (as per previous

behaviour, so non-breaking).

This commit fixes#5065 and #3896 and should fix#2699 indirectly. I've

tested adding and merging.

Kindly tagging @Xmaster6y @dev2049 for review.

---------

Co-authored-by: Ati Sharma <ati@agalmic.ltd>

Co-authored-by: Harrison Chase <hw.chase.17@gmail.com>

# Change Default GoogleDriveLoader Behavior to not Load Trashed Files

(issue #5104)

Fixes#5104

If the previous behavior of loading files that used to live in the

folder, but are now trashed, you can use the `load_trashed_files`

parameter:

```

loader = GoogleDriveLoader(

folder_id="1yucgL9WGgWZdM1TOuKkeghlPizuzMYb5",

recursive=False,

load_trashed_files=True

)

```

As not loading trashed files should be expected behavior, should we

1. even provide the `load_trashed_files` parameter?

2. add documentation? Feels most users will stick with default behavior

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

DataLoaders

- @eyurtsev

Twitter: [@nicholasliu77](https://twitter.com/nicholasliu77)

I found an API key for `serpapi_api_key` while reading the docs. It

seems to have been modified very recently. Removed it in this PR

@hwchase17 - project lead

Copies `GraphIndexCreator.from_text()` to make an async version called

`GraphIndexCreator.afrom_text()`.

This is (should be) a trivial change: it just adds a copy of

`GraphIndexCreator.from_text()` which is async and awaits a call to

`chain.apredict()` instead of `chain.predict()`. There is no unit test

for GraphIndexCreator, and I did not create one, but this code works for

me locally.

@agola11 @hwchase17

# fix a mistake in concepts.md

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

Example:

```

$ langchain plus start --expose

...

$ langchain plus status

The LangChainPlus server is currently running.

Service Status Published Ports

langchain-backend Up 40 seconds 1984

langchain-db Up 41 seconds 5433

langchain-frontend Up 40 seconds 80

ngrok Up 41 seconds 4040

To connect, set the following environment variables in your LangChain application:

LANGCHAIN_TRACING_V2=true

LANGCHAIN_ENDPOINT=https://5cef-70-23-89-158.ngrok.io

$ langchain plus stop

$ langchain plus status

The LangChainPlus server is not running.

$ langchain plus start

The LangChainPlus server is currently running.

Service Status Published Ports

langchain-backend Up 5 seconds 1984

langchain-db Up 6 seconds 5433

langchain-frontend Up 5 seconds 80

To connect, set the following environment variables in your LangChain application:

LANGCHAIN_TRACING_V2=true

LANGCHAIN_ENDPOINT=http://localhost:1984

```

# Add Joplin document loader

[Joplin](https://joplinapp.org/) is an open source note-taking app.

Joplin has a [REST API](https://joplinapp.org/api/references/rest_api/)

for accessing its local database. The proposed `JoplinLoader` uses the

API to retrieve all notes in the database and their metadata. Joplin

needs to be installed and running locally, and an access token is

required.

- The PR includes an integration test.

- The PR includes an example notebook.

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

## Description

The html structure of readthedocs can differ. Currently, the html tag is

hardcoded in the reader, and unable to fit into some cases. This pr

includes the following changes:

1. Replace `find_all` with `find` because we just want one tag.

2. Provide `custom_html_tag` to the loader.

3. Add tests for readthedoc loader

4. Refactor code

## Issues

See more in https://github.com/hwchase17/langchain/pull/2609. The

problem was not completely fixed in that pr.

---------

Signed-off-by: byhsu <byhsu@linkedin.com>

Co-authored-by: byhsu <byhsu@linkedin.com>

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# Output parsing variation allowance for self-ask with search

This change makes self-ask with search easier for Llama models to

follow, as they tend toward returning 'Followup:' instead of 'Follow

up:' despite an otherwise valid remaining output.

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

`vectorstore.PGVector`: The transactional boundary should be increased

to cover the query itself

Currently, within the `similarity_search_with_score_by_vector` the

transactional boundary (created via the `Session` call) does not include

the select query being made.

This can result in un-intended consequences when interacting with the

PGVector instance methods directly

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

# OpanAI finetuned model giving zero tokens cost

Very simple fix to the previously committed solution to allowing

finetuned Openai models.

Improves #5127

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

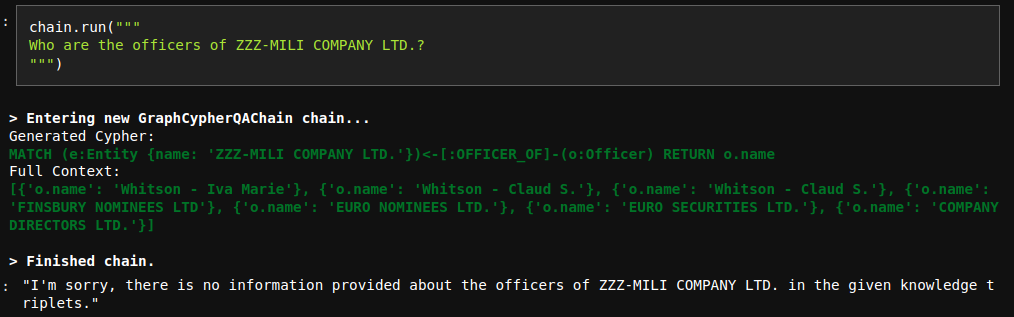

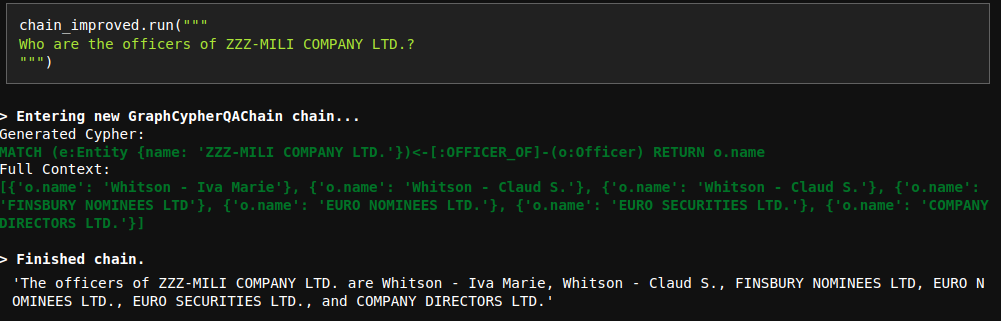

# Improve Cypher QA prompt

The current QA prompt is optimized for networkX answer generation, which

returns all the possible triples.

However, Cypher search is a bit more focused and doesn't necessary

return all the context information.

Due to that reason, the model sometimes refuses to generate an answer

even though the information is provided:

To fix this issue, I have updated the prompt. Interestingly, I tried

many variations with less instructions and they didn't work properly.

However, the current fix works nicely.

# Reuse `length_func` in `MapReduceDocumentsChain`

Pretty straightforward refactor in `MapReduceDocumentsChain`. Reusing

the local variable `length_func`, instead of the longer alternative

`self.combine_document_chain.prompt_length`.

@hwchase17