2022-12-20 01:32:45 +00:00

{

"cells": [

{

2023-05-02 02:07:26 +00:00

"attachments": {},

2022-12-20 01:32:45 +00:00

"cell_type": "markdown",

"id": "5436020b",

"metadata": {},

"source": [

"# Defining Custom Tools\n",

"\n",

2023-02-18 21:40:43 +00:00

"When constructing your own agent, you will need to provide it with a list of Tools that it can use. Besides the actual function that is called, the Tool consists of several components:\n",

2022-12-20 01:32:45 +00:00

"\n",

2023-04-18 04:35:29 +00:00

"- name (str), is required and must be unique within a set of tools provided to an agent\n",

"- description (str), is optional but recommended, as it is used by an agent to determine tool use\n",

2023-02-18 21:40:43 +00:00

"- return_direct (bool), defaults to False\n",

2023-05-02 02:07:26 +00:00

"- args_schema (Pydantic BaseModel), is optional but recommended, can be used to provide more information (e.g., few-shot examples) or validation for expected parameters.\n",

2022-12-20 01:32:45 +00:00

"\n",

"\n",

2023-05-02 02:07:26 +00:00

"There are two main ways to define a tool, we will cover both in the example below."

2022-12-20 01:32:45 +00:00

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 1,

2022-12-20 01:32:45 +00:00

"id": "1aaba18c",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2022-12-20 01:32:45 +00:00

"outputs": [],

"source": [

"# Import things that are needed generically\n",

2023-04-18 04:35:29 +00:00

"from langchain import LLMMathChain, SerpAPIWrapper\n",

2023-05-02 02:07:26 +00:00

"from langchain.agents import AgentType, initialize_agent\n",

2023-04-18 04:35:29 +00:00

"from langchain.chat_models import ChatOpenAI\n",

2023-05-02 02:07:26 +00:00

"from langchain.tools import BaseTool, StructuredTool, Tool, tool"

2022-12-20 01:32:45 +00:00

]

},

{

"cell_type": "markdown",

"id": "8e2c3874",

"metadata": {},

"source": [

"Initialize the LLM to use for the agent."

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 2,

2022-12-20 01:32:45 +00:00

"id": "36ed392e",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2022-12-20 01:32:45 +00:00

"outputs": [],

"source": [

2023-04-18 04:35:29 +00:00

"llm = ChatOpenAI(temperature=0)"

2022-12-20 01:32:45 +00:00

]

},

2022-12-28 14:03:16 +00:00

{

2023-05-02 02:07:26 +00:00

"attachments": {},

2022-12-28 14:03:16 +00:00

"cell_type": "markdown",

"id": "f8bc72c2",

"metadata": {},

"source": [

2023-05-02 02:07:26 +00:00

"## Completely New Tools - String Input and Output\n",

"\n",

"The simplest tools accept a single query string and return a string output. If your tool function requires multiple arguments, you might want to skip down to the `StructuredTool` section below.\n",

2023-02-18 21:40:43 +00:00

"\n",

"There are two ways to do this: either by using the Tool dataclass, or by subclassing the BaseTool class."

]

},

{

2023-05-02 02:07:26 +00:00

"attachments": {},

2023-02-18 21:40:43 +00:00

"cell_type": "markdown",

"id": "b63fcc3b",

"metadata": {},

"source": [

2023-05-02 02:07:26 +00:00

"### Tool dataclass\n",

"\n",

"The 'Tool' dataclass wraps functions that accept a single string input and returns a string output."

2022-12-28 14:03:16 +00:00

]

},

2022-12-20 01:32:45 +00:00

{

"cell_type": "code",

2023-01-06 14:40:32 +00:00

"execution_count": 3,

2022-12-20 01:32:45 +00:00

"id": "56ff7670",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2023-05-02 02:07:26 +00:00

"outputs": [

{

"name": "stderr",

"output_type": "stream",

"text": [

"/Users/wfh/code/lc/lckg/langchain/chains/llm_math/base.py:50: UserWarning: Directly instantiating an LLMMathChain with an llm is deprecated. Please instantiate with llm_chain argument or using the from_llm class method.\n",

" warnings.warn(\n"

]

}

],

2022-12-20 01:32:45 +00:00

"source": [

"# Load the tool configs that are needed.\n",

"search = SerpAPIWrapper()\n",

"llm_math_chain = LLMMathChain(llm=llm, verbose=True)\n",

"tools = [\n",

2023-05-02 02:07:26 +00:00

" Tool.from_function(\n",

2022-12-20 01:32:45 +00:00

" func=search.run,\n",

2023-06-16 18:52:56 +00:00

" name=\"Search\",\n",

2022-12-20 01:32:45 +00:00

" description=\"useful for when you need to answer questions about current events\"\n",

2023-05-02 02:07:26 +00:00

" # coroutine= ... <- you can specify an async method if desired as well\n",

2022-12-20 01:32:45 +00:00

" ),\n",

2023-05-02 02:07:26 +00:00

"]"

]

},

{

"attachments": {},

"cell_type": "markdown",

"id": "e9b560f7",

"metadata": {},

"source": [

"You can also define a custom `args_schema`` to provide more information about inputs."

]

},

{

"cell_type": "code",

"execution_count": 4,

"id": "631361e7",

"metadata": {},

"outputs": [],

"source": [

2023-04-19 01:18:33 +00:00

"from pydantic import BaseModel, Field\n",

"\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-04-19 01:18:33 +00:00

"class CalculatorInput(BaseModel):\n",

2023-04-19 05:43:52 +00:00

" question: str = Field()\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-04-19 01:18:33 +00:00

"\n",

"tools.append(\n",

2023-05-02 02:07:26 +00:00

" Tool.from_function(\n",

2022-12-20 01:32:45 +00:00

" func=llm_math_chain.run,\n",

2023-05-02 02:07:26 +00:00

" name=\"Calculator\",\n",

2023-04-19 01:18:33 +00:00

" description=\"useful for when you need to answer questions about math\",\n",

" args_schema=CalculatorInput\n",

2023-05-02 02:07:26 +00:00

" # coroutine= ... <- you can specify an async method if desired as well\n",

2022-12-20 01:32:45 +00:00

" )\n",

2023-04-19 01:18:33 +00:00

")"

2022-12-20 01:32:45 +00:00

]

},

{

"cell_type": "code",

2023-05-02 02:07:26 +00:00

"execution_count": 5,

2022-12-20 01:32:45 +00:00

"id": "5b93047d",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2022-12-20 01:32:45 +00:00

"outputs": [],

"source": [

"# Construct the agent. We will use the default agent type here.\n",

"# See documentation for a full list of options.\n",

2023-06-16 18:52:56 +00:00

"agent = initialize_agent(\n",

" tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True\n",

")"

2022-12-20 01:32:45 +00:00

]

},

{

"cell_type": "code",

2023-05-02 02:07:26 +00:00

"execution_count": 6,

2022-12-20 01:32:45 +00:00

"id": "6f96a891",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2022-12-20 01:32:45 +00:00

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\n",

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

2023-04-18 04:35:29 +00:00

"\u001b[32;1m\u001b[1;3mI need to find out Leo DiCaprio's girlfriend's name and her age\n",

"Action: Search\n",

2023-05-02 02:07:26 +00:00

"Action Input: \"Leo DiCaprio girlfriend\"\u001b[0m\n",

"Observation: \u001b[36;1m\u001b[1;3mAfter rumours of a romance with Gigi Hadid, the Oscar winner has seemingly moved on. First being linked to the television personality in September 2022, it appears as if his \"age bracket\" has moved up. This follows his rumoured relationship with mere 19-year-old Eden Polani.\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mI still need to find out his current girlfriend's name and age\n",

"Action: Search\n",

"Action Input: \"Leo DiCaprio current girlfriend\"\u001b[0m\n",

"Observation: \u001b[36;1m\u001b[1;3mJust Jared on Instagram: “Leonardo DiCaprio & girlfriend Camila Morrone couple up for a lunch date!\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mNow that I know his girlfriend's name is Camila Morrone, I need to find her current age\n",

"Action: Search\n",

"Action Input: \"Camila Morrone age\"\u001b[0m\n",

"Observation: \u001b[36;1m\u001b[1;3m25 years\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mNow that I have her age, I need to calculate her age raised to the 0.43 power\n",

2022-12-20 01:32:45 +00:00

"Action: Calculator\n",

2023-04-19 01:18:33 +00:00

"Action Input: 25^(0.43)\u001b[0m\n",

2022-12-20 01:32:45 +00:00

"\n",

"\u001b[1m> Entering new LLMMathChain chain...\u001b[0m\n",

2023-04-19 01:18:33 +00:00

"25^(0.43)\u001b[32;1m\u001b[1;3m```text\n",

"25**(0.43)\n",

2022-12-20 01:32:45 +00:00

"```\n",

2023-04-19 01:18:33 +00:00

"...numexpr.evaluate(\"25**(0.43)\")...\n",

2022-12-20 01:32:45 +00:00

"\u001b[0m\n",

2023-04-19 01:18:33 +00:00

"Answer: \u001b[33;1m\u001b[1;3m3.991298452658078\u001b[0m\n",

2023-02-18 21:40:43 +00:00

"\u001b[1m> Finished chain.\u001b[0m\n",

2023-05-02 02:07:26 +00:00

"\n",

"Observation: \u001b[33;1m\u001b[1;3mAnswer: 3.991298452658078\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mI now know the final answer\n",

"Final Answer: Camila Morrone's current age raised to the 0.43 power is approximately 3.99.\u001b[0m\n",

2023-02-18 21:40:43 +00:00

"\n",

"\u001b[1m> Finished chain.\u001b[0m\n"

2022-12-28 14:03:16 +00:00

]

},

{

"data": {

"text/plain": [

2023-05-02 02:07:26 +00:00

"\"Camila Morrone's current age raised to the 0.43 power is approximately 3.99.\""

2022-12-28 14:03:16 +00:00

]

},

2023-05-02 02:07:26 +00:00

"execution_count": 6,

2022-12-28 14:03:16 +00:00

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

2023-06-16 18:52:56 +00:00

"agent.run(\n",

" \"Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?\"\n",

")"

2023-02-18 21:40:43 +00:00

]

},

{

2023-05-02 02:07:26 +00:00

"attachments": {},

2023-02-18 21:40:43 +00:00

"cell_type": "markdown",

"id": "6f12eaf0",

"metadata": {},

"source": [

2023-05-02 02:07:26 +00:00

"### Subclassing the BaseTool class\n",

"\n",

"You can also directly subclass `BaseTool`. This is useful if you want more control over the instance variables or if you want to propagate callbacks to nested chains or other tools."

2023-02-18 21:40:43 +00:00

]

},

{

"cell_type": "code",

2023-05-02 02:07:26 +00:00

"execution_count": 7,

2023-02-18 21:40:43 +00:00

"id": "c58a7c40",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2023-02-18 21:40:43 +00:00

"outputs": [],

"source": [

2023-05-02 02:07:26 +00:00

"from typing import Optional, Type\n",

"\n",

2023-06-16 18:52:56 +00:00

"from langchain.callbacks.manager import (\n",

" AsyncCallbackManagerForToolRun,\n",

" CallbackManagerForToolRun,\n",

")\n",

"\n",

2023-04-21 22:51:13 +00:00

"\n",

2023-02-18 21:40:43 +00:00

"class CustomSearchTool(BaseTool):\n",

2023-05-02 02:07:26 +00:00

" name = \"custom_search\"\n",

2023-02-18 21:40:43 +00:00

" description = \"useful for when you need to answer questions about current events\"\n",

"\n",

2023-06-16 18:52:56 +00:00

" def _run(\n",

" self, query: str, run_manager: Optional[CallbackManagerForToolRun] = None\n",

" ) -> str:\n",

2023-02-18 21:40:43 +00:00

" \"\"\"Use the tool.\"\"\"\n",

" return search.run(query)\n",

2023-06-16 18:52:56 +00:00

"\n",

" async def _arun(\n",

" self, query: str, run_manager: Optional[AsyncCallbackManagerForToolRun] = None\n",

" ) -> str:\n",

2023-02-18 21:40:43 +00:00

" \"\"\"Use the tool asynchronously.\"\"\"\n",

2023-05-02 02:07:26 +00:00

" raise NotImplementedError(\"custom_search does not support async\")\n",

2023-06-16 18:52:56 +00:00

"\n",

"\n",

2023-02-18 21:40:43 +00:00

"class CustomCalculatorTool(BaseTool):\n",

" name = \"Calculator\"\n",

" description = \"useful for when you need to answer questions about math\"\n",

2023-04-21 22:51:13 +00:00

" args_schema: Type[BaseModel] = CalculatorInput\n",

2023-02-18 21:40:43 +00:00

"\n",

2023-06-16 18:52:56 +00:00

" def _run(\n",

" self, query: str, run_manager: Optional[CallbackManagerForToolRun] = None\n",

" ) -> str:\n",

2023-02-18 21:40:43 +00:00

" \"\"\"Use the tool.\"\"\"\n",

" return llm_math_chain.run(query)\n",

2023-06-16 18:52:56 +00:00

"\n",

" async def _arun(\n",

" self, query: str, run_manager: Optional[AsyncCallbackManagerForToolRun] = None\n",

" ) -> str:\n",

2023-02-18 21:40:43 +00:00

" \"\"\"Use the tool asynchronously.\"\"\"\n",

2023-05-02 02:07:26 +00:00

" raise NotImplementedError(\"Calculator does not support async\")"

2023-02-18 21:40:43 +00:00

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 8,

2023-05-02 02:07:26 +00:00

"id": "3318a46f",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2023-02-18 21:40:43 +00:00

"outputs": [],

"source": [

2023-05-02 02:07:26 +00:00

"tools = [CustomSearchTool(), CustomCalculatorTool()]\n",

2023-06-16 18:52:56 +00:00

"agent = initialize_agent(\n",

" tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True\n",

")"

2023-02-18 21:40:43 +00:00

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 9,

2023-02-18 21:40:43 +00:00

"id": "6a2cebbf",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2023-02-18 21:40:43 +00:00

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\n",

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

2023-05-02 02:07:26 +00:00

"\u001b[32;1m\u001b[1;3mI need to use custom_search to find out who Leo DiCaprio's girlfriend is, and then use the Calculator to raise her age to the 0.43 power.\n",

"Action: custom_search\n",

"Action Input: \"Leo DiCaprio girlfriend\"\u001b[0m\n",

"Observation: \u001b[36;1m\u001b[1;3mAfter rumours of a romance with Gigi Hadid, the Oscar winner has seemingly moved on. First being linked to the television personality in September 2022, it appears as if his \"age bracket\" has moved up. This follows his rumoured relationship with mere 19-year-old Eden Polani.\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mI need to find out the current age of Eden Polani.\n",

"Action: custom_search\n",

"Action Input: \"Eden Polani age\"\u001b[0m\n",

"Observation: \u001b[36;1m\u001b[1;3m19 years old\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mNow I can use the Calculator to raise her age to the 0.43 power.\n",

2023-02-18 21:40:43 +00:00

"Action: Calculator\n",

2023-05-02 02:07:26 +00:00

"Action Input: 19 ^ 0.43\u001b[0m\n",

2023-02-18 21:40:43 +00:00

"\n",

"\u001b[1m> Entering new LLMMathChain chain...\u001b[0m\n",

2023-05-02 02:07:26 +00:00

"19 ^ 0.43\u001b[32;1m\u001b[1;3m```text\n",

"19 ** 0.43\n",

2023-02-18 21:40:43 +00:00

"```\n",

2023-05-02 02:07:26 +00:00

"...numexpr.evaluate(\"19 ** 0.43\")...\n",

2023-02-18 21:40:43 +00:00

"\u001b[0m\n",

2023-05-02 02:07:26 +00:00

"Answer: \u001b[33;1m\u001b[1;3m3.547023357958959\u001b[0m\n",

2023-02-18 21:40:43 +00:00

"\u001b[1m> Finished chain.\u001b[0m\n",

2023-05-02 02:07:26 +00:00

"\n",

"Observation: \u001b[33;1m\u001b[1;3mAnswer: 3.547023357958959\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mI now know the final answer.\n",

"Final Answer: 3.547023357958959\u001b[0m\n",

2023-02-18 21:40:43 +00:00

"\n",

"\u001b[1m> Finished chain.\u001b[0m\n"

]

},

{

"data": {

"text/plain": [

2023-05-02 02:07:26 +00:00

"'3.547023357958959'"

2023-02-18 21:40:43 +00:00

]

},

2023-04-18 04:35:29 +00:00

"execution_count": 9,

2023-02-18 21:40:43 +00:00

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

2023-06-16 18:52:56 +00:00

"agent.run(\n",

" \"Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?\"\n",

")"

2022-12-28 14:03:16 +00:00

]

},

2023-01-29 02:26:24 +00:00

{

"cell_type": "markdown",

"id": "824eaf74",

"metadata": {},

"source": [

"## Using the `tool` decorator\n",

"\n",

"To make it easier to define custom tools, a `@tool` decorator is provided. This decorator can be used to quickly create a `Tool` from a simple function. The decorator uses the function name as the tool name by default, but this can be overridden by passing a string as the first argument. Additionally, the decorator will use the function's docstring as the tool's description."

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 10,

2023-01-29 02:26:24 +00:00

"id": "8f15307d",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2023-01-29 02:26:24 +00:00

"outputs": [],

"source": [

2023-05-02 02:07:26 +00:00

"from langchain.tools import tool\n",

2023-01-29 02:26:24 +00:00

"\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-01-29 02:26:24 +00:00

"@tool\n",

"def search_api(query: str) -> str:\n",

" \"\"\"Searches the API for the query.\"\"\"\n",

2023-05-02 02:07:26 +00:00

" return f\"Results for query {query}\"\n",

"\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-01-29 02:26:24 +00:00

"search_api"

]

},

{

"cell_type": "markdown",

"id": "cc6ee8c1",

"metadata": {},

"source": [

"You can also provide arguments like the tool name and whether to return directly."

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 12,

2023-01-29 02:26:24 +00:00

"id": "28cdf04d",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2023-01-29 02:26:24 +00:00

"outputs": [],

"source": [

"@tool(\"search\", return_direct=True)\n",

"def search_api(query: str) -> str:\n",

" \"\"\"Searches the API for the query.\"\"\"\n",

" return \"Results\""

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 13,

2023-01-29 02:26:24 +00:00

"id": "1085a4bd",

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

2023-04-19 01:18:33 +00:00

"Tool(name='search', description='search(query: str) -> str - Searches the API for the query.', args_schema=<class 'pydantic.main.SearchApi'>, return_direct=True, verbose=False, callback_manager=<langchain.callbacks.shared.SharedCallbackManager object at 0x12748c4c0>, func=<function search_api at 0x16bd66310>, coroutine=None)"

2023-01-29 02:26:24 +00:00

]

},

2023-04-18 04:35:29 +00:00

"execution_count": 13,

2023-01-29 02:26:24 +00:00

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"search_api"

]

},

2023-04-19 01:18:33 +00:00

{

"cell_type": "markdown",

"id": "de34a6a3",

"metadata": {},

"source": [

"You can also provide `args_schema` to provide more information about the argument"

]

},

{

"cell_type": "code",

"execution_count": 14,

"id": "f3a5c106",

"metadata": {},

"outputs": [],

"source": [

"class SearchInput(BaseModel):\n",

" query: str = Field(description=\"should be a search query\")\n",

2023-06-16 18:52:56 +00:00

"\n",

"\n",

2023-04-19 01:18:33 +00:00

"@tool(\"search\", return_direct=True, args_schema=SearchInput)\n",

"def search_api(query: str) -> str:\n",

" \"\"\"Searches the API for the query.\"\"\"\n",

" return \"Results\""

]

},

{

"cell_type": "code",

"execution_count": 15,

"id": "7914ba6b",

"metadata": {},

"outputs": [

{

"data": {

"text/plain": [

"Tool(name='search', description='search(query: str) -> str - Searches the API for the query.', args_schema=<class '__main__.SearchInput'>, return_direct=True, verbose=False, callback_manager=<langchain.callbacks.shared.SharedCallbackManager object at 0x12748c4c0>, func=<function search_api at 0x16bcf0ee0>, coroutine=None)"

]

},

"execution_count": 15,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"search_api"

]

},

2022-12-28 14:03:16 +00:00

{

2023-05-02 02:07:26 +00:00

"attachments": {},

"cell_type": "markdown",

"id": "61d2e80b",

"metadata": {},

"source": [

"## Custom Structured Tools\n",

"\n",

"If your functions require more structured arguments, you can use the `StructuredTool` class directly, or still subclass the `BaseTool` class."

]

},

{

"attachments": {},

"cell_type": "markdown",

"id": "5be41722",

"metadata": {},

"source": [

"### StructuredTool dataclass\n",

"\n",

"To dynamically generate a structured tool from a given function, the fastest way to get started is with `StructuredTool.from_function()`."

]

},

{

"cell_type": "code",

"execution_count": 10,

"id": "3c070216",

"metadata": {},

"outputs": [],

"source": [

"import requests\n",

"from langchain.tools import StructuredTool\n",

"\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-05-02 02:07:26 +00:00

"def post_message(url: str, body: dict, parameters: Optional[dict] = None) -> str:\n",

" \"\"\"Sends a POST request to the given url with the given body and parameters.\"\"\"\n",

" result = requests.post(url, json=body, params=parameters)\n",

" return f\"Status: {result.status_code} - {result.text}\"\n",

"\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-05-02 02:07:26 +00:00

"tool = StructuredTool.from_function(post_message)"

]

},

{

"attachments": {},

"cell_type": "markdown",

"id": "fb0a38eb",

"metadata": {},

"source": [

"## Subclassing the BaseTool\n",

"\n",

"The BaseTool automatically infers the schema from the _run method's signature."

]

},

{

"cell_type": "code",

"execution_count": 11,

"id": "7505c9c5",

"metadata": {},

"outputs": [],

"source": [

"from typing import Optional, Type\n",

"\n",

2023-06-16 18:52:56 +00:00

"from langchain.callbacks.manager import (\n",

" AsyncCallbackManagerForToolRun,\n",

" CallbackManagerForToolRun,\n",

")\n",

"\n",

"\n",

2023-05-02 02:07:26 +00:00

"class CustomSearchTool(BaseTool):\n",

" name = \"custom_search\"\n",

" description = \"useful for when you need to answer questions about current events\"\n",

"\n",

2023-06-16 18:52:56 +00:00

" def _run(\n",

" self,\n",

" query: str,\n",

" engine: str = \"google\",\n",

" gl: str = \"us\",\n",

" hl: str = \"en\",\n",

" run_manager: Optional[CallbackManagerForToolRun] = None,\n",

" ) -> str:\n",

2023-05-02 02:07:26 +00:00

" \"\"\"Use the tool.\"\"\"\n",

" search_wrapper = SerpAPIWrapper(params={\"engine\": engine, \"gl\": gl, \"hl\": hl})\n",

" return search_wrapper.run(query)\n",

2023-06-16 18:52:56 +00:00

"\n",

" async def _arun(\n",

" self,\n",

" query: str,\n",

" engine: str = \"google\",\n",

" gl: str = \"us\",\n",

" hl: str = \"en\",\n",

" run_manager: Optional[AsyncCallbackManagerForToolRun] = None,\n",

" ) -> str:\n",

2023-05-02 02:07:26 +00:00

" \"\"\"Use the tool asynchronously.\"\"\"\n",

" raise NotImplementedError(\"custom_search does not support async\")\n",

"\n",

"\n",

"# You can provide a custom args schema to add descriptions or custom validation\n",

"\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-05-02 02:07:26 +00:00

"class SearchSchema(BaseModel):\n",

" query: str = Field(description=\"should be a search query\")\n",

" engine: str = Field(description=\"should be a search engine\")\n",

" gl: str = Field(description=\"should be a country code\")\n",

" hl: str = Field(description=\"should be a language code\")\n",

"\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-05-02 02:07:26 +00:00

"class CustomSearchTool(BaseTool):\n",

" name = \"custom_search\"\n",

" description = \"useful for when you need to answer questions about current events\"\n",

" args_schema: Type[SearchSchema] = SearchSchema\n",

"\n",

2023-06-16 18:52:56 +00:00

" def _run(\n",

" self,\n",

" query: str,\n",

" engine: str = \"google\",\n",

" gl: str = \"us\",\n",

" hl: str = \"en\",\n",

" run_manager: Optional[CallbackManagerForToolRun] = None,\n",

" ) -> str:\n",

2023-05-02 02:07:26 +00:00

" \"\"\"Use the tool.\"\"\"\n",

" search_wrapper = SerpAPIWrapper(params={\"engine\": engine, \"gl\": gl, \"hl\": hl})\n",

" return search_wrapper.run(query)\n",

2023-06-16 18:52:56 +00:00

"\n",

" async def _arun(\n",

" self,\n",

" query: str,\n",

" engine: str = \"google\",\n",

" gl: str = \"us\",\n",

" hl: str = \"en\",\n",

" run_manager: Optional[AsyncCallbackManagerForToolRun] = None,\n",

" ) -> str:\n",

2023-05-02 02:07:26 +00:00

" \"\"\"Use the tool asynchronously.\"\"\"\n",

2023-06-16 18:52:56 +00:00

" raise NotImplementedError(\"custom_search does not support async\")"

2023-05-02 02:07:26 +00:00

]

},

{

"attachments": {},

"cell_type": "markdown",

"id": "7d68b0ac",

"metadata": {},

"source": [

"## Using the decorator\n",

"\n",

"The `tool` decorator creates a structured tool automatically if the signature has multiple arguments."

]

},

{

"cell_type": "code",

"execution_count": 12,

"id": "38d11416",

"metadata": {},

"outputs": [],

"source": [

"import requests\n",

"from langchain.tools import tool\n",

"\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-05-02 02:07:26 +00:00

"@tool\n",

"def post_message(url: str, body: dict, parameters: Optional[dict] = None) -> str:\n",

" \"\"\"Sends a POST request to the given url with the given body and parameters.\"\"\"\n",

" result = requests.post(url, json=body, params=parameters)\n",

" return f\"Status: {result.status_code} - {result.text}\""

]

},

{

"attachments": {},

2022-12-28 14:03:16 +00:00

"cell_type": "markdown",

"id": "1d0430d6",

"metadata": {},

"source": [

"## Modify existing tools\n",

"\n",

2023-05-02 02:07:26 +00:00

"Now, we show how to load existing tools and modify them directly. In the example below, we do something really simple and change the Search tool to have the name `Google Search`."

2022-12-28 14:03:16 +00:00

]

},

{

"cell_type": "code",

2023-05-02 02:07:26 +00:00

"execution_count": 13,

2022-12-28 14:03:16 +00:00

"id": "79213f40",

"metadata": {},

"outputs": [],

"source": [

"from langchain.agents import load_tools"

]

},

{

"cell_type": "code",

2023-05-02 02:07:26 +00:00

"execution_count": 14,

2022-12-28 14:03:16 +00:00

"id": "e1067dcb",

"metadata": {},

"outputs": [],

"source": [

"tools = load_tools([\"serpapi\", \"llm-math\"], llm=llm)"

]

},

{

"cell_type": "code",

2023-05-02 02:07:26 +00:00

"execution_count": 15,

2022-12-28 14:03:16 +00:00

"id": "6c66ffe8",

"metadata": {},

"outputs": [],

"source": [

"tools[0].name = \"Google Search\""

]

},

{

"cell_type": "code",

2023-05-02 02:07:26 +00:00

"execution_count": 16,

2022-12-28 14:03:16 +00:00

"id": "f45b5bc3",

"metadata": {},

"outputs": [],

"source": [

2023-06-16 18:52:56 +00:00

"agent = initialize_agent(\n",

" tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True\n",

")"

2022-12-28 14:03:16 +00:00

]

},

{

"cell_type": "code",

2023-05-02 02:07:26 +00:00

"execution_count": 17,

2022-12-28 14:03:16 +00:00

"id": "565e2b9b",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\n",

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

2023-04-18 04:35:29 +00:00

"\u001b[32;1m\u001b[1;3mI need to find out Leo DiCaprio's girlfriend's name and her age.\n",

2022-12-28 14:03:16 +00:00

"Action: Google Search\n",

2023-05-02 02:07:26 +00:00

"Action Input: \"Leo DiCaprio girlfriend\"\u001b[0m\n",

"Observation: \u001b[36;1m\u001b[1;3mAfter rumours of a romance with Gigi Hadid, the Oscar winner has seemingly moved on. First being linked to the television personality in September 2022, it appears as if his \"age bracket\" has moved up. This follows his rumoured relationship with mere 19-year-old Eden Polani.\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mI still need to find out his current girlfriend's name and her age.\n",

"Action: Google Search\n",

"Action Input: \"Leo DiCaprio current girlfriend age\"\u001b[0m\n",

"Observation: \u001b[36;1m\u001b[1;3mLeonardo DiCaprio has been linked with 19-year-old model Eden Polani, continuing the rumour that he doesn't date any women over the age of ...\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mI need to find out the age of Eden Polani.\n",

2022-12-28 14:03:16 +00:00

"Action: Calculator\n",

2023-05-02 02:07:26 +00:00

"Action Input: 19^(0.43)\u001b[0m\n",

"Observation: \u001b[33;1m\u001b[1;3mAnswer: 3.547023357958959\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mI now know the final answer.\n",

"Final Answer: The age of Leo DiCaprio's girlfriend raised to the 0.43 power is approximately 3.55.\u001b[0m\n",

2023-02-18 21:40:43 +00:00

"\n",

"\u001b[1m> Finished chain.\u001b[0m\n"

2022-12-20 01:32:45 +00:00

]

},

{

"data": {

"text/plain": [

2023-05-02 02:07:26 +00:00

"\"The age of Leo DiCaprio's girlfriend raised to the 0.43 power is approximately 3.55.\""

2022-12-20 01:32:45 +00:00

]

},

2023-05-02 02:07:26 +00:00

"execution_count": 17,

2022-12-20 01:32:45 +00:00

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

2023-06-16 18:52:56 +00:00

"agent.run(\n",

" \"Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?\"\n",

")"

2022-12-20 01:32:45 +00:00

]

},

2023-01-06 14:40:32 +00:00

{

"cell_type": "markdown",

2023-01-06 14:56:11 +00:00

"id": "376813ed",

2023-01-06 14:40:32 +00:00

"metadata": {},

"source": [

2023-01-06 14:56:11 +00:00

"## Defining the priorities among Tools\n",

"When you made a Custom tool, you may want the Agent to use the custom tool more than normal tools.\n",

"\n",

"For example, you made a custom tool, which gets information on music from your database. When a user wants information on songs, You want the Agent to use `the custom tool` more than the normal `Search tool`. But the Agent might prioritize a normal Search tool.\n",

"\n",

"This can be accomplished by adding a statement such as `Use this more than the normal search if the question is about Music, like 'who is the singer of yesterday?' or 'what is the most popular song in 2022?'` to the description.\n",

"\n",

"An example is below."

]

},

{

"cell_type": "code",

2023-05-02 02:07:26 +00:00

"execution_count": 18,

2023-01-06 14:56:11 +00:00

"id": "3450512e",

"metadata": {},

"outputs": [],

"source": [

"# Import things that are needed generically\n",

"from langchain.agents import initialize_agent, Tool\n",

2023-04-04 14:21:50 +00:00

"from langchain.agents import AgentType\n",

2023-01-06 14:56:11 +00:00

"from langchain.llms import OpenAI\n",

"from langchain import LLMMathChain, SerpAPIWrapper\n",

2023-06-16 18:52:56 +00:00

"\n",

2023-01-06 14:56:11 +00:00

"search = SerpAPIWrapper()\n",

"tools = [\n",

" Tool(\n",

2023-06-16 18:52:56 +00:00

" name=\"Search\",\n",

2023-01-06 14:56:11 +00:00

" func=search.run,\n",

2023-06-16 18:52:56 +00:00

" description=\"useful for when you need to answer questions about current events\",\n",

2023-01-06 14:56:11 +00:00

" ),\n",

" Tool(\n",

" name=\"Music Search\",\n",

2023-06-16 18:52:56 +00:00

" func=lambda x: \"'All I Want For Christmas Is You' by Mariah Carey.\", # Mock Function\n",

2023-01-06 14:56:11 +00:00

" description=\"A Music search engine. Use this more than the normal search if the question is about Music, like 'who is the singer of yesterday?' or 'what is the most popular song in 2022?'\",\n",

2023-06-16 18:52:56 +00:00

" ),\n",

2023-01-06 14:56:11 +00:00

"]\n",

2023-01-06 14:40:32 +00:00

"\n",

2023-06-16 18:52:56 +00:00

"agent = initialize_agent(\n",

" tools,\n",

" OpenAI(temperature=0),\n",

" agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,\n",

" verbose=True,\n",

")"

2023-01-06 14:56:11 +00:00

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 20,

2023-01-06 14:56:11 +00:00

"id": "4b9a7849",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\n",

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

"\u001b[32;1m\u001b[1;3m I should use a music search engine to find the answer\n",

"Action: Music Search\n",

2023-04-18 04:35:29 +00:00

"Action Input: most famous song of christmas\u001b[0m\u001b[33;1m\u001b[1;3m'All I Want For Christmas Is You' by Mariah Carey.\u001b[0m\u001b[32;1m\u001b[1;3m I now know the final answer\n",

2023-01-06 14:56:11 +00:00

"Final Answer: 'All I Want For Christmas Is You' by Mariah Carey.\u001b[0m\n",

"\n",

"\u001b[1m> Finished chain.\u001b[0m\n"

]

},

{

"data": {

"text/plain": [

"\"'All I Want For Christmas Is You' by Mariah Carey.\""

]

},

2023-04-18 04:35:29 +00:00

"execution_count": 20,

2023-01-06 14:56:11 +00:00

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"agent.run(\"what is the most famous song of christmas\")"

]

},

{

"cell_type": "markdown",

"id": "bc477d43",

"metadata": {},

"source": [

"## Using tools to return directly\n",

"Often, it can be desirable to have a tool output returned directly to the user, if it’

2023-01-06 14:40:32 +00:00

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 21,

2023-01-06 14:56:11 +00:00

"id": "3bb6185f",

2023-01-06 14:40:32 +00:00

"metadata": {},

"outputs": [],

"source": [

"llm_math_chain = LLMMathChain(llm=llm)\n",

"tools = [\n",

" Tool(\n",

" name=\"Calculator\",\n",

" func=llm_math_chain.run,\n",

" description=\"useful for when you need to answer questions about math\",\n",

2023-06-16 18:52:56 +00:00

" return_direct=True,\n",

2023-01-06 14:40:32 +00:00

" )\n",

"]"

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 22,

2023-01-06 14:56:11 +00:00

"id": "113ddb84",

2023-01-06 14:40:32 +00:00

"metadata": {},

"outputs": [],

"source": [

"llm = OpenAI(temperature=0)\n",

2023-06-16 18:52:56 +00:00

"agent = initialize_agent(\n",

" tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True\n",

")"

2023-01-06 14:40:32 +00:00

]

},

{

"cell_type": "code",

2023-04-18 04:35:29 +00:00

"execution_count": 23,

2023-01-06 14:56:11 +00:00

"id": "582439a6",

2023-04-18 04:35:29 +00:00

"metadata": {

"tags": []

},

2023-01-06 14:40:32 +00:00

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\n",

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

"\u001b[32;1m\u001b[1;3m I need to calculate this\n",

"Action: Calculator\n",

2023-04-18 04:35:29 +00:00

"Action Input: 2**.12\u001b[0m\u001b[36;1m\u001b[1;3mAnswer: 1.086734862526058\u001b[0m\u001b[32;1m\u001b[1;3m\u001b[0m\n",

2023-01-06 14:40:32 +00:00

"\n",

"\u001b[1m> Finished chain.\u001b[0m\n"

]

},

{

"data": {

"text/plain": [

2023-04-18 04:35:29 +00:00

"'Answer: 1.086734862526058'"

2023-01-06 14:40:32 +00:00

]

},

2023-04-18 04:35:29 +00:00

"execution_count": 23,

2023-01-06 14:40:32 +00:00

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"agent.run(\"whats 2**.12\")"

]

Add ToolException that a tool can throw. (#5050)

# Add ToolException that a tool can throw

This is an optional exception that tool throws when execution error

occurs.

When this exception is thrown, the agent will not stop working,but will

handle the exception according to the handle_tool_error variable of the

tool,and the processing result will be returned to the agent as

observation,and printed in pink on the console.It can be used like this:

```python

from langchain.schema import ToolException

from langchain import LLMMathChain, SerpAPIWrapper, OpenAI

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool, tool

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

class Error_tool:

def run(self, s: str):

raise ToolException('The current search tool is not available.')

def handle_tool_error(error) -> str:

return "The following errors occurred during tool execution:"+str(error)

search_tool1 = Error_tool()

search_tool2 = SerpAPIWrapper()

tools = [

Tool.from_function(

func=search_tool1.run,

name="Search_tool1",

description="useful for when you need to answer questions about current events.You should give priority to using it.",

handle_tool_error=handle_tool_error,

),

Tool.from_function(

func=search_tool2.run,

name="Search_tool2",

description="useful for when you need to answer questions about current events",

return_direct=True,

)

]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True,

handle_tool_errors=handle_tool_error)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

```

## Who can review?

- @vowelparrot

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

2023-05-29 20:05:58 +00:00

},

{

"attachments": {},

"cell_type": "markdown",

"id": "f1da459d",

"metadata": {},

"source": [

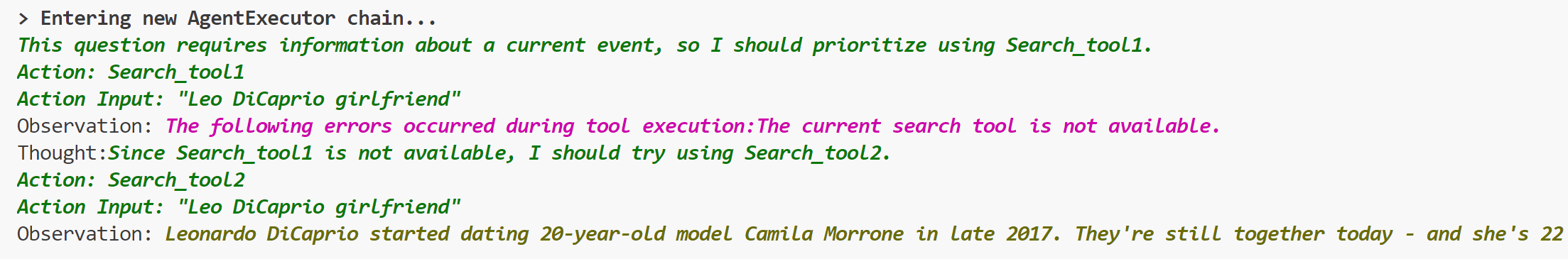

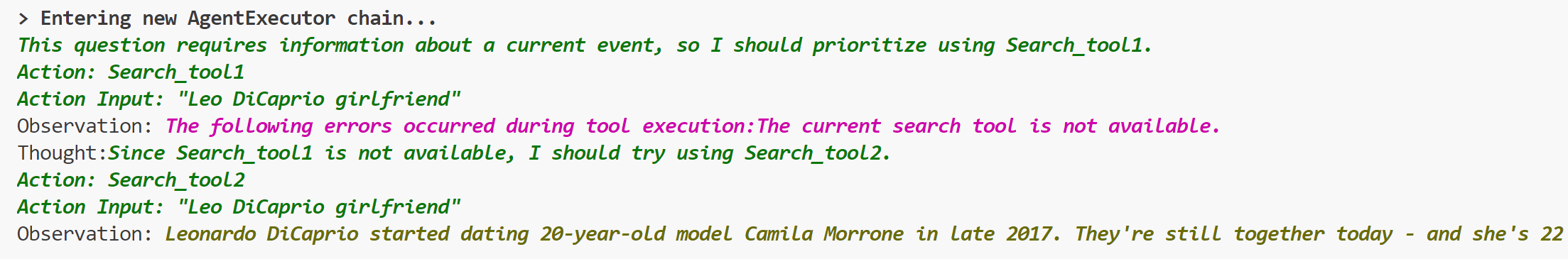

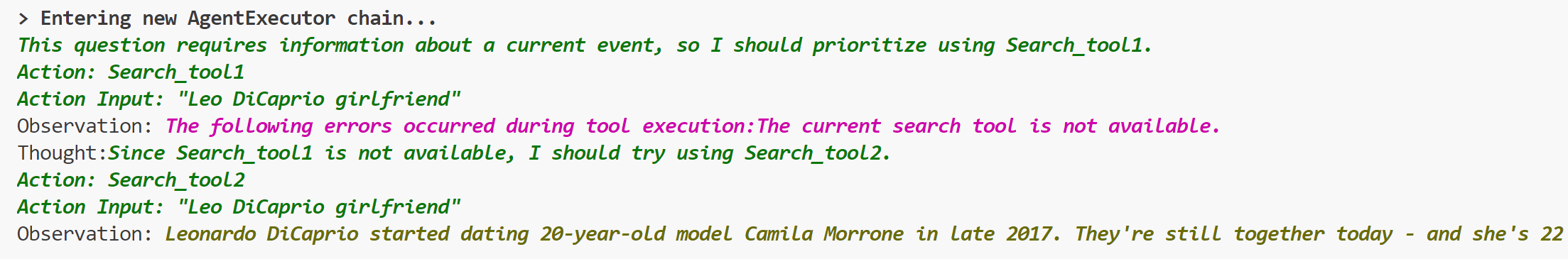

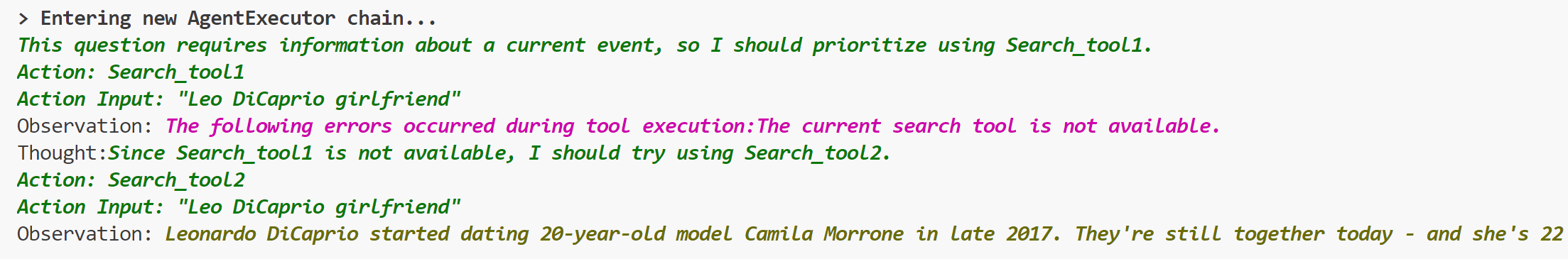

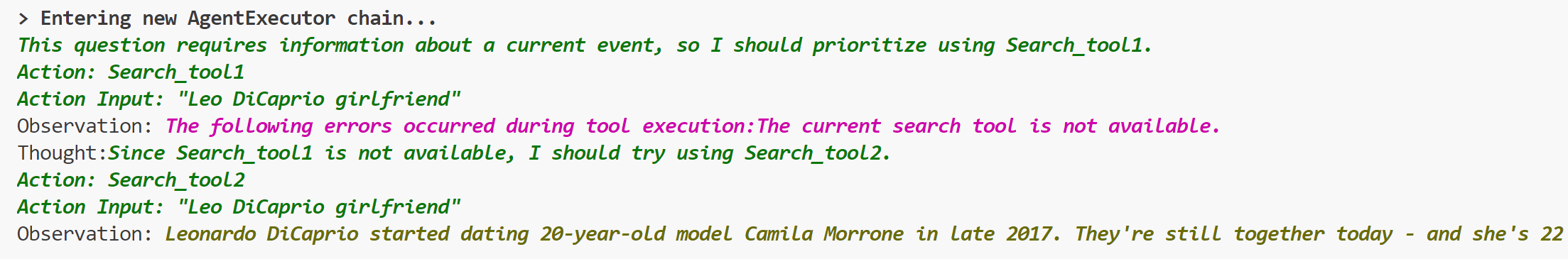

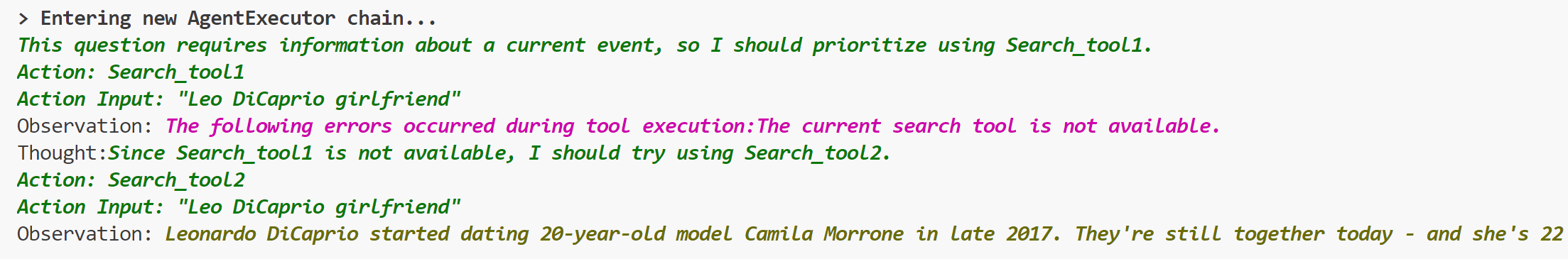

"## Handling Tool Errors \n",

"When a tool encounters an error and the exception is not caught, the agent will stop executing. If you want the agent to continue execution, you can raise a `ToolException` and set `handle_tool_error` accordingly. \n",

"\n",

"When `ToolException` is thrown, the agent will not stop working, but will handle the exception according to the `handle_tool_error` variable of the tool, and the processing result will be returned to the agent as observation, and printed in red.\n",

"\n",

"You can set `handle_tool_error` to `True`, set it a unified string value, or set it as a function. If it's set as a function, the function should take a `ToolException` as a parameter and return a `str` value.\n",

"\n",

"Please note that only raising a `ToolException` won't be effective. You need to first set the `handle_tool_error` of the tool because its default value is `False`."

]

},

{

"cell_type": "code",

"execution_count": 9,

"id": "ad16fbcf",

"metadata": {},

"outputs": [],

"source": [

2023-07-15 14:25:34 +00:00

"from langchain.tools.base import ToolException\n",

Add ToolException that a tool can throw. (#5050)

# Add ToolException that a tool can throw

This is an optional exception that tool throws when execution error

occurs.

When this exception is thrown, the agent will not stop working,but will

handle the exception according to the handle_tool_error variable of the

tool,and the processing result will be returned to the agent as

observation,and printed in pink on the console.It can be used like this:

```python

from langchain.schema import ToolException

from langchain import LLMMathChain, SerpAPIWrapper, OpenAI

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool, tool

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

class Error_tool:

def run(self, s: str):

raise ToolException('The current search tool is not available.')

def handle_tool_error(error) -> str:

return "The following errors occurred during tool execution:"+str(error)

search_tool1 = Error_tool()

search_tool2 = SerpAPIWrapper()

tools = [

Tool.from_function(

func=search_tool1.run,

name="Search_tool1",

description="useful for when you need to answer questions about current events.You should give priority to using it.",

handle_tool_error=handle_tool_error,

),

Tool.from_function(

func=search_tool2.run,

name="Search_tool2",

description="useful for when you need to answer questions about current events",

return_direct=True,

)

]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True,

handle_tool_errors=handle_tool_error)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

```

## Who can review?

- @vowelparrot

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

2023-05-29 20:05:58 +00:00

"\n",

"from langchain import SerpAPIWrapper\n",

"from langchain.agents import AgentType, initialize_agent\n",

"from langchain.chat_models import ChatOpenAI\n",

"from langchain.tools import Tool\n",

"\n",

"from langchain.chat_models import ChatOpenAI\n",

"\n",

2023-06-16 18:52:56 +00:00

"\n",

"def _handle_error(error: ToolException) -> str:\n",

" return (\n",

" \"The following errors occurred during tool execution:\"\n",

" + error.args[0]\n",

" + \"Please try another tool.\"\n",

" )\n",

"\n",

"\n",

"def search_tool1(s: str):\n",

" raise ToolException(\"The search tool1 is not available.\")\n",

"\n",

"\n",

"def search_tool2(s: str):\n",

" raise ToolException(\"The search tool2 is not available.\")\n",

"\n",

"\n",

Add ToolException that a tool can throw. (#5050)

# Add ToolException that a tool can throw

This is an optional exception that tool throws when execution error

occurs.

When this exception is thrown, the agent will not stop working,but will

handle the exception according to the handle_tool_error variable of the

tool,and the processing result will be returned to the agent as

observation,and printed in pink on the console.It can be used like this:

```python

from langchain.schema import ToolException

from langchain import LLMMathChain, SerpAPIWrapper, OpenAI

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool, tool

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

class Error_tool:

def run(self, s: str):

raise ToolException('The current search tool is not available.')

def handle_tool_error(error) -> str:

return "The following errors occurred during tool execution:"+str(error)

search_tool1 = Error_tool()

search_tool2 = SerpAPIWrapper()

tools = [

Tool.from_function(

func=search_tool1.run,

name="Search_tool1",

description="useful for when you need to answer questions about current events.You should give priority to using it.",

handle_tool_error=handle_tool_error,

),

Tool.from_function(

func=search_tool2.run,

name="Search_tool2",

description="useful for when you need to answer questions about current events",

return_direct=True,

)

]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True,

handle_tool_errors=handle_tool_error)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

```

## Who can review?

- @vowelparrot

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

2023-05-29 20:05:58 +00:00

"search_tool3 = SerpAPIWrapper()"

]

},

{

"cell_type": "code",

"execution_count": 10,

"id": "c05aa75b",

"metadata": {},

"outputs": [],

"source": [

2023-06-16 18:52:56 +00:00

"description = \"useful for when you need to answer questions about current events.You should give priority to using it.\"\n",

Add ToolException that a tool can throw. (#5050)

# Add ToolException that a tool can throw

This is an optional exception that tool throws when execution error

occurs.

When this exception is thrown, the agent will not stop working,but will

handle the exception according to the handle_tool_error variable of the

tool,and the processing result will be returned to the agent as

observation,and printed in pink on the console.It can be used like this:

```python

from langchain.schema import ToolException

from langchain import LLMMathChain, SerpAPIWrapper, OpenAI

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool, tool

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

class Error_tool:

def run(self, s: str):

raise ToolException('The current search tool is not available.')

def handle_tool_error(error) -> str:

return "The following errors occurred during tool execution:"+str(error)

search_tool1 = Error_tool()

search_tool2 = SerpAPIWrapper()

tools = [

Tool.from_function(

func=search_tool1.run,

name="Search_tool1",

description="useful for when you need to answer questions about current events.You should give priority to using it.",

handle_tool_error=handle_tool_error,

),

Tool.from_function(

func=search_tool2.run,

name="Search_tool2",

description="useful for when you need to answer questions about current events",

return_direct=True,

)

]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True,

handle_tool_errors=handle_tool_error)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

```

## Who can review?

- @vowelparrot

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

2023-05-29 20:05:58 +00:00

"tools = [\n",

" Tool.from_function(\n",

" func=search_tool1,\n",

" name=\"Search_tool1\",\n",

" description=description,\n",

" handle_tool_error=True,\n",

" ),\n",

" Tool.from_function(\n",

" func=search_tool2,\n",

" name=\"Search_tool2\",\n",

" description=description,\n",

" handle_tool_error=_handle_error,\n",

" ),\n",

" Tool.from_function(\n",

" func=search_tool3.run,\n",

" name=\"Search_tool3\",\n",

" description=\"useful for when you need to answer questions about current events\",\n",

" ),\n",

"]\n",

"\n",

"agent = initialize_agent(\n",

" tools,\n",

" ChatOpenAI(temperature=0),\n",

" agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,\n",

" verbose=True,\n",

2023-06-16 18:52:56 +00:00

")"

Add ToolException that a tool can throw. (#5050)

# Add ToolException that a tool can throw

This is an optional exception that tool throws when execution error

occurs.

When this exception is thrown, the agent will not stop working,but will

handle the exception according to the handle_tool_error variable of the

tool,and the processing result will be returned to the agent as

observation,and printed in pink on the console.It can be used like this:

```python

from langchain.schema import ToolException

from langchain import LLMMathChain, SerpAPIWrapper, OpenAI

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool, tool

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

class Error_tool:

def run(self, s: str):

raise ToolException('The current search tool is not available.')

def handle_tool_error(error) -> str:

return "The following errors occurred during tool execution:"+str(error)

search_tool1 = Error_tool()

search_tool2 = SerpAPIWrapper()

tools = [

Tool.from_function(

func=search_tool1.run,

name="Search_tool1",

description="useful for when you need to answer questions about current events.You should give priority to using it.",

handle_tool_error=handle_tool_error,

),

Tool.from_function(

func=search_tool2.run,

name="Search_tool2",

description="useful for when you need to answer questions about current events",

return_direct=True,

)

]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True,

handle_tool_errors=handle_tool_error)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

```

## Who can review?

- @vowelparrot

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

2023-05-29 20:05:58 +00:00

]

},

{

"cell_type": "code",

"execution_count": 15,

"id": "cff8b4b5",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\n",

"\u001b[1m> Entering new AgentExecutor chain...\u001b[0m\n",

"\u001b[32;1m\u001b[1;3mI should use Search_tool1 to find recent news articles about Leo DiCaprio's personal life.\n",

"Action: Search_tool1\n",

"Action Input: \"Leo DiCaprio girlfriend\"\u001b[0m\n",

"Observation: \u001b[31;1m\u001b[1;3mThe search tool1 is not available.\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mI should try using Search_tool2 instead.\n",

"Action: Search_tool2\n",

"Action Input: \"Leo DiCaprio girlfriend\"\u001b[0m\n",

"Observation: \u001b[31;1m\u001b[1;3mThe following errors occurred during tool execution:The search tool2 is not available.Please try another tool.\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mI should try using Search_tool3 as a last resort.\n",

"Action: Search_tool3\n",

"Action Input: \"Leo DiCaprio girlfriend\"\u001b[0m\n",

"Observation: \u001b[38;5;200m\u001b[1;3mLeonardo DiCaprio and Gigi Hadid were recently spotted at a pre-Oscars party, sparking interest once again in their rumored romance. The Revenant actor and the model first made headlines when they were spotted together at a New York Fashion Week afterparty in September 2022.\u001b[0m\n",

"Thought:\u001b[32;1m\u001b[1;3mBased on the information from Search_tool3, it seems that Gigi Hadid is currently rumored to be Leo DiCaprio's girlfriend.\n",

"Final Answer: Gigi Hadid is currently rumored to be Leo DiCaprio's girlfriend.\u001b[0m\n",

"\n",

"\u001b[1m> Finished chain.\u001b[0m\n"

]

},

{

"data": {

"text/plain": [

"\"Gigi Hadid is currently rumored to be Leo DiCaprio's girlfriend.\""

]

},

"execution_count": 15,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"agent.run(\"Who is Leo DiCaprio's girlfriend?\")"

]

2022-12-20 01:32:45 +00:00

}

],

"metadata": {

"kernelspec": {

2023-01-06 14:40:32 +00:00

"display_name": "Python 3 (ipykernel)",

2022-12-20 01:32:45 +00:00

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

Add ToolException that a tool can throw. (#5050)

# Add ToolException that a tool can throw

This is an optional exception that tool throws when execution error

occurs.

When this exception is thrown, the agent will not stop working,but will

handle the exception according to the handle_tool_error variable of the

tool,and the processing result will be returned to the agent as

observation,and printed in pink on the console.It can be used like this:

```python

from langchain.schema import ToolException

from langchain import LLMMathChain, SerpAPIWrapper, OpenAI

from langchain.agents import AgentType, initialize_agent

from langchain.chat_models import ChatOpenAI

from langchain.tools import BaseTool, StructuredTool, Tool, tool

from langchain.chat_models import ChatOpenAI

llm = ChatOpenAI(temperature=0)

llm_math_chain = LLMMathChain(llm=llm, verbose=True)

class Error_tool:

def run(self, s: str):

raise ToolException('The current search tool is not available.')

def handle_tool_error(error) -> str:

return "The following errors occurred during tool execution:"+str(error)

search_tool1 = Error_tool()

search_tool2 = SerpAPIWrapper()

tools = [

Tool.from_function(

func=search_tool1.run,

name="Search_tool1",

description="useful for when you need to answer questions about current events.You should give priority to using it.",

handle_tool_error=handle_tool_error,

),

Tool.from_function(

func=search_tool2.run,

name="Search_tool2",

description="useful for when you need to answer questions about current events",

return_direct=True,

)

]

agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True,

handle_tool_errors=handle_tool_error)

agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

```

## Who can review?

- @vowelparrot

---------

Co-authored-by: Dev 2049 <dev.dev2049@gmail.com>

2023-05-29 20:05:58 +00:00

"version": "3.11.3"

Docs refactor (#480)

Big docs refactor! Motivation is to make it easier for people to find

resources they are looking for. To accomplish this, there are now three

main sections:

- Getting Started: steps for getting started, walking through most core

functionality

- Modules: these are different modules of functionality that langchain

provides. Each part here has a "getting started", "how to", "key

concepts" and "reference" section (except in a few select cases where it

didnt easily fit).

- Use Cases: this is to separate use cases (like summarization, question

answering, evaluation, etc) from the modules, and provide a different

entry point to the code base.

There is also a full reference section, as well as extra resources

(glossary, gallery, etc)

Co-authored-by: Shreya Rajpal <ShreyaR@users.noreply.github.com>

2023-01-02 16:24:09 +00:00

},

"vscode": {

"interpreter": {

2023-01-29 02:26:24 +00:00

"hash": "e90c8aa204a57276aa905271aff2d11799d0acb3547adabc5892e639a5e45e34"

Docs refactor (#480)

Big docs refactor! Motivation is to make it easier for people to find

resources they are looking for. To accomplish this, there are now three

main sections:

- Getting Started: steps for getting started, walking through most core

functionality

- Modules: these are different modules of functionality that langchain

provides. Each part here has a "getting started", "how to", "key

concepts" and "reference" section (except in a few select cases where it

didnt easily fit).

- Use Cases: this is to separate use cases (like summarization, question

answering, evaluation, etc) from the modules, and provide a different

entry point to the code base.

There is also a full reference section, as well as extra resources

(glossary, gallery, etc)

Co-authored-by: Shreya Rajpal <ShreyaR@users.noreply.github.com>

2023-01-02 16:24:09 +00:00

}

2022-12-20 01:32:45 +00:00

}

},

"nbformat": 4,

"nbformat_minor": 5

}