FileCallbackHandler (#5589)

# like

[StdoutCallbackHandler](https://github.com/hwchase17/langchain/blob/master/langchain/callbacks/stdout.py),

but writes to a file

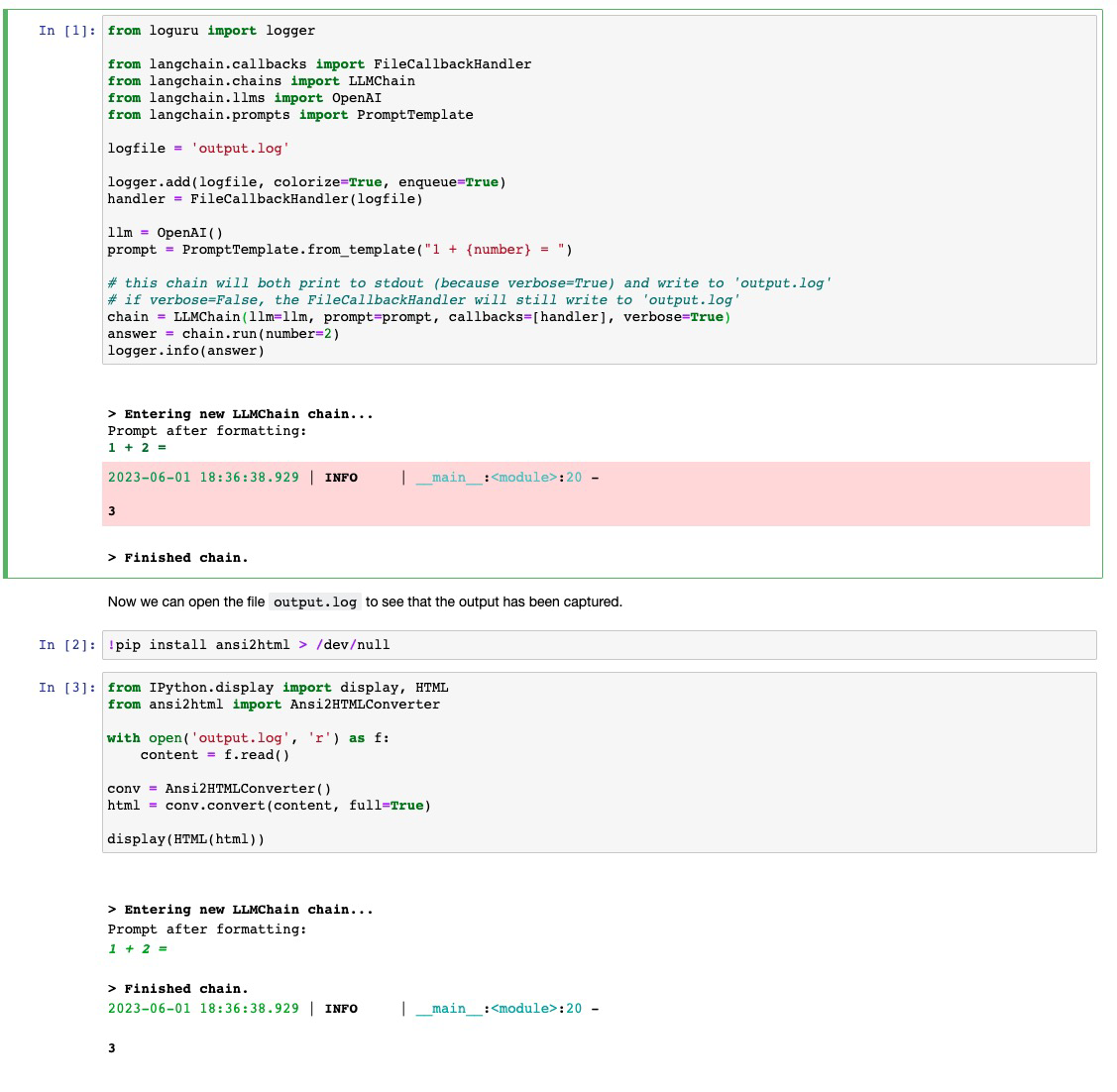

When running experiments I have found myself wanting to log the outputs

of my chains in a more lightweight way than using WandB tracing. This PR

contributes a callback handler that writes to file what

`StdoutCallbackHandler` would print.

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

## Example Notebook

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

See the included `filecallbackhandler.ipynb` notebook for usage. Would

it be better to include this notebook under `modules/callbacks` or under

`integrations/`?

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@agola11

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

2023-06-03 23:48:48 +00:00

{

"cells": [

{

"cell_type": "markdown",

"id": "63b87b91",

"metadata": {},

"source": [

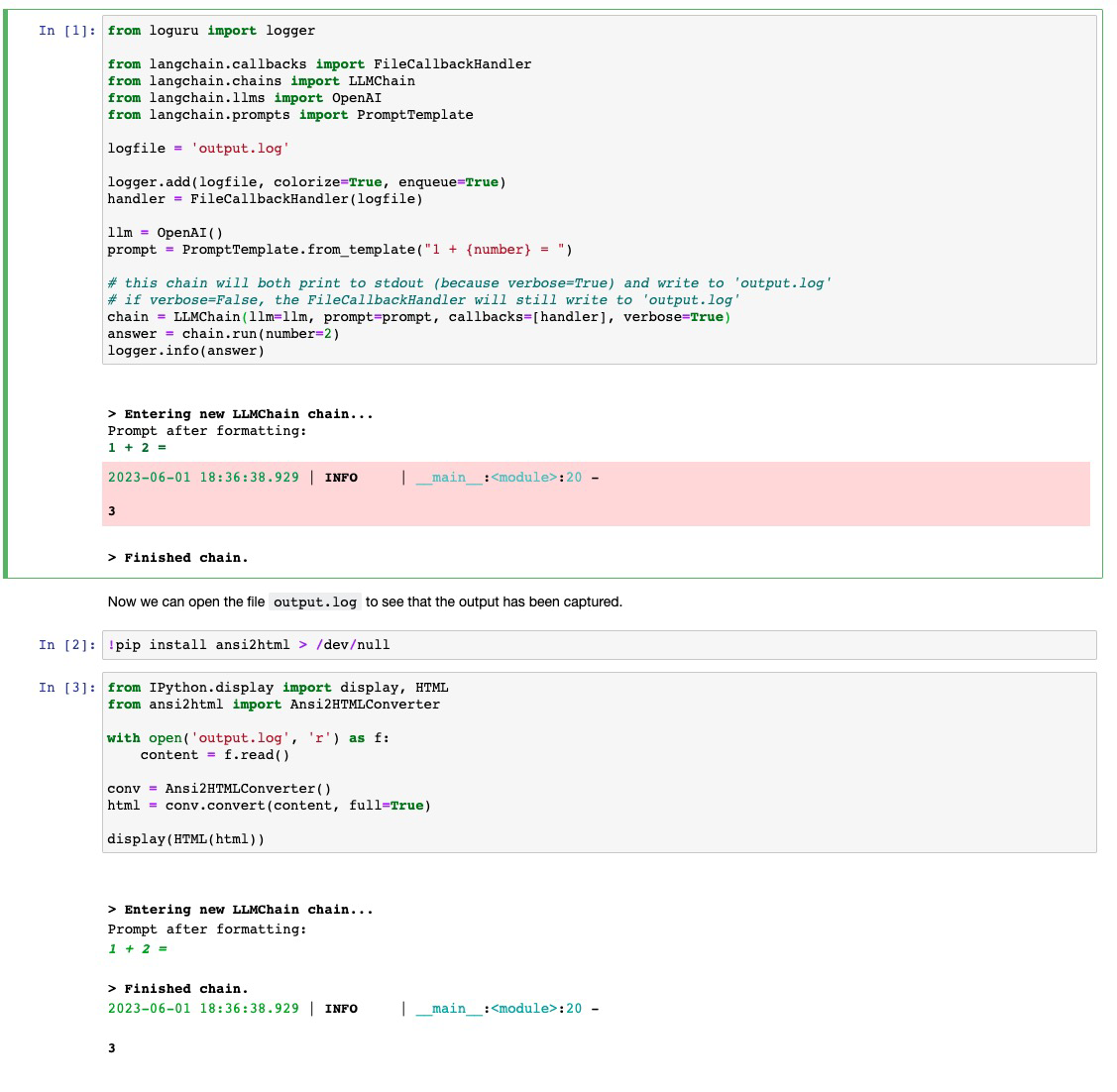

"# Logging to file\n",

"This example shows how to print logs to file. It shows how to use the `FileCallbackHandler`, which does the same thing as [`StdOutCallbackHandler`](https://python.langchain.com/en/latest/modules/callbacks/getting_started.html#using-an-existing-handler), but instead writes the output to file. It also uses the `loguru` library to log other outputs that are not captured by the handler."

]

},

{

"cell_type": "code",

"execution_count": 1,

"id": "6cb156cc",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\n",

"\u001b[1m> Entering new LLMChain chain...\u001b[0m\n",

"Prompt after formatting:\n",

"\u001b[32;1m\u001b[1;3m1 + 2 = \u001b[0m\n"

]

},

{

"name": "stderr",

"output_type": "stream",

"text": [

"\u001b[32m2023-06-01 18:36:38.929\u001b[0m | \u001b[1mINFO \u001b[0m | \u001b[36m__main__\u001b[0m:\u001b[36m<module>\u001b[0m:\u001b[36m20\u001b[0m - \u001b[1m\n",

"\n",

"3\u001b[0m\n"

]

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"\n",

"\u001b[1m> Finished chain.\u001b[0m\n"

]

}

],

"source": [

"from loguru import logger\n",

"\n",

"from langchain.callbacks import FileCallbackHandler\n",

"from langchain.chains import LLMChain\n",

"from langchain.llms import OpenAI\n",

"from langchain.prompts import PromptTemplate\n",

"\n",

2023-06-16 18:52:56 +00:00

"logfile = \"output.log\"\n",

FileCallbackHandler (#5589)

# like

[StdoutCallbackHandler](https://github.com/hwchase17/langchain/blob/master/langchain/callbacks/stdout.py),

but writes to a file

When running experiments I have found myself wanting to log the outputs

of my chains in a more lightweight way than using WandB tracing. This PR

contributes a callback handler that writes to file what

`StdoutCallbackHandler` would print.

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

## Example Notebook

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

See the included `filecallbackhandler.ipynb` notebook for usage. Would

it be better to include this notebook under `modules/callbacks` or under

`integrations/`?

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@agola11

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

2023-06-03 23:48:48 +00:00

"\n",

"logger.add(logfile, colorize=True, enqueue=True)\n",

"handler = FileCallbackHandler(logfile)\n",

"\n",

"llm = OpenAI()\n",

"prompt = PromptTemplate.from_template(\"1 + {number} = \")\n",

"\n",

"# this chain will both print to stdout (because verbose=True) and write to 'output.log'\n",

"# if verbose=False, the FileCallbackHandler will still write to 'output.log'\n",

"chain = LLMChain(llm=llm, prompt=prompt, callbacks=[handler], verbose=True)\n",

"answer = chain.run(number=2)\n",

"logger.info(answer)"

]

},

{

"cell_type": "markdown",

"id": "9c50d54f",

"metadata": {},

"source": [

"Now we can open the file `output.log` to see that the output has been captured."

]

},

{

"cell_type": "code",

"execution_count": 2,

"id": "aa32dc0a",

"metadata": {},

"outputs": [],

"source": [

"!pip install ansi2html > /dev/null"

]

},

{

"cell_type": "code",

"execution_count": 3,

"id": "4af00719",

"metadata": {},

"outputs": [

{

"data": {

"text/html": [

"<!DOCTYPE HTML PUBLIC \"-//W3C//DTD HTML 4.01 Transitional//EN\" \"http://www.w3.org/TR/html4/loose.dtd\">\n",

"<html>\n",

"<head>\n",

"<meta http-equiv=\"Content-Type\" content=\"text/html; charset=utf-8\">\n",

"<title></title>\n",

"<style type=\"text/css\">\n",

".ansi2html-content { display: inline; white-space: pre-wrap; word-wrap: break-word; }\n",

".body_foreground { color: #AAAAAA; }\n",

".body_background { background-color: #000000; }\n",

".inv_foreground { color: #000000; }\n",

".inv_background { background-color: #AAAAAA; }\n",

".ansi1 { font-weight: bold; }\n",

".ansi3 { font-style: italic; }\n",

".ansi32 { color: #00aa00; }\n",

".ansi36 { color: #00aaaa; }\n",

"</style>\n",

"</head>\n",

"<body class=\"body_foreground body_background\" style=\"font-size: normal;\" >\n",

"<pre class=\"ansi2html-content\">\n",

"\n",

"\n",

"<span class=\"ansi1\">> Entering new LLMChain chain...</span>\n",

"Prompt after formatting:\n",

"<span class=\"ansi1 ansi32\"></span><span class=\"ansi1 ansi3 ansi32\">1 + 2 = </span>\n",

"\n",

"<span class=\"ansi1\">> Finished chain.</span>\n",

"<span class=\"ansi32\">2023-06-01 18:36:38.929</span> | <span class=\"ansi1\">INFO </span> | <span class=\"ansi36\">__main__</span>:<span class=\"ansi36\"><module></span>:<span class=\"ansi36\">20</span> - <span class=\"ansi1\">\n",

"\n",

"3</span>\n",

"\n",

"</pre>\n",

"</body>\n",

"\n",

"</html>\n"

],

"text/plain": [

"<IPython.core.display.HTML object>"

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"from IPython.display import display, HTML\n",

"from ansi2html import Ansi2HTMLConverter\n",

"\n",

2023-06-16 18:52:56 +00:00

"with open(\"output.log\", \"r\") as f:\n",

FileCallbackHandler (#5589)

# like

[StdoutCallbackHandler](https://github.com/hwchase17/langchain/blob/master/langchain/callbacks/stdout.py),

but writes to a file

When running experiments I have found myself wanting to log the outputs

of my chains in a more lightweight way than using WandB tracing. This PR

contributes a callback handler that writes to file what

`StdoutCallbackHandler` would print.

<!--

Thank you for contributing to LangChain! Your PR will appear in our

release under the title you set. Please make sure it highlights your

valuable contribution.

Replace this with a description of the change, the issue it fixes (if

applicable), and relevant context. List any dependencies required for

this change.

After you're done, someone will review your PR. They may suggest

improvements. If no one reviews your PR within a few days, feel free to

@-mention the same people again, as notifications can get lost.

Finally, we'd love to show appreciation for your contribution - if you'd

like us to shout you out on Twitter, please also include your handle!

-->

## Example Notebook

<!-- If you're adding a new integration, please include:

1. a test for the integration - favor unit tests that does not rely on

network access.

2. an example notebook showing its use

See contribution guidelines for more information on how to write tests,

lint

etc:

https://github.com/hwchase17/langchain/blob/master/.github/CONTRIBUTING.md

-->

See the included `filecallbackhandler.ipynb` notebook for usage. Would

it be better to include this notebook under `modules/callbacks` or under

`integrations/`?

## Who can review?

Community members can review the PR once tests pass. Tag

maintainers/contributors who might be interested:

@agola11

<!-- For a quicker response, figure out the right person to tag with @

@hwchase17 - project lead

Tracing / Callbacks

- @agola11

Async

- @agola11

DataLoaders

- @eyurtsev

Models

- @hwchase17

- @agola11

Agents / Tools / Toolkits

- @vowelparrot

VectorStores / Retrievers / Memory

- @dev2049

-->

2023-06-03 23:48:48 +00:00

" content = f.read()\n",

"\n",

"conv = Ansi2HTMLConverter()\n",

"html = conv.convert(content, full=True)\n",

"\n",

"display(HTML(html))"

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3 (ipykernel)",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.9.16"

}

},

"nbformat": 4,

"nbformat_minor": 5

}